I’ve had lurking in my ‘to do’ list a comment about doing a post on when to gamify. In general, of course, I avoid it, but I have to acknowledge there are times when it makes sense. And someone challenged me to think about what those circumstances are. So here I’m taking a principled shot at it, but I also welcome your thoughts.

To be clear, let me first define what gamification is to me. So, I’m a big fan of serious games, that is when you wrap meaningful decisions into contexts that are intrinsically meaningful. And I can be convinced that there are times when tarting up memory practice with quiz-show window-dressing makes sense, e.g. when it has to be ‘in the head’. What I typically refer to as gamification, however, is where you use external resources, such as scores, leaderboards, badges, and rewards to support behavior you want to happen.

I happened to hear a gamification expert talk, and he pointed out some rules about what he termed ‘goal science’. He had five pillars:

- that clear goals makes people feel connected and aligns the organization

- that working on goals together (in a competitive sense ;) makes them feel supported

- that feedback helps people progress in systematic ways

- that the tight loop of feedback is more personalized

- that choosing challenging goals engages people

Implicit in this is that you do good goal setting and rewards. You have to have some good alignment to get these points across. He made the point that doing it badly could be worse than not doing it at all!

With these ground rules, we can think about when it might make sense. I’ll argue that one obvious, and probably sad case, would be when you don’t have a coherent organization, and people aren’t aware of their role in the organization. Making up for effective communication isn’t necessarily a good thing, in my mind.

I think it also might make sense for a fun diversion to achieve a short-term goal. This might be particularly useful for an organizational change, when extra motivation could be of assistance in supporting new behaviors. (Say, for moving to a coherent organization. ;) Or some periodic event, supporting say a philanthropic commitment related to the organization.

And it can be a reward for a desired behavior, such as my frequent flier points. I collect them, hoping to spend them. I resent it, a bit, because it’s never as good as is promised, which is a worry. Which means it’s not being done well.

On the other hand, I can’t see using it on an ongoing basis, as it seems it would undermine the intrinsic motivation of doing meaningful work. Making up for a lack of meaningful work would be a bad thing, too.

So, I recall talking to a guy many moons ago who was an expert in motivation for the workplace. And I had the opportunity to see the staggering amount of stuff available to orgs to reward behavior (largely sales) at an exhibit happening next to our event. It’s clear I’m not an expert, but while I’ll stick to my guns about preferring intrinsic motivation, I’m quite willing to believe that there are times it works, including on me.

Ok, those are my thoughts, what’ve I missed?

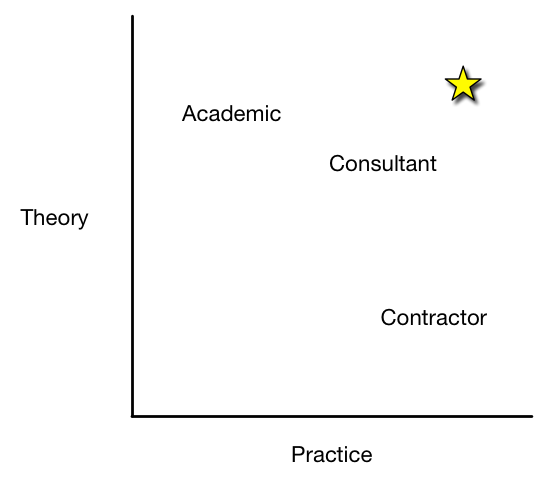

I was reminded of it, and realize I see it slightly differently. So I’d put someone high on the theory/information side as an academic or researcher, whether they’re in an institution or not. They know the theories behind the outcomes, and may study them, but don’t apply them . And I’d put someone who can execute against a particular model as a contractor. You know what you want done, and you hire someone to do it.

I was reminded of it, and realize I see it slightly differently. So I’d put someone high on the theory/information side as an academic or researcher, whether they’re in an institution or not. They know the theories behind the outcomes, and may study them, but don’t apply them . And I’d put someone who can execute against a particular model as a contractor. You know what you want done, and you hire someone to do it.