If you follow this blog (and you should :), it was pretty obvious that I was at the FocusOn Learning conference in San Diego last week (previous 2 posts were mindmaps of the keynotes). And it was fun as always. Here are my reflections on what happened a bit more, as an exercise in meta-learning.

There were three themes to the conference: mobile, games, and video. I’m pretty active in the first two (two books on the former, one on the latter), and the last is related to things I care and talk about. The focus led to some interesting outcomes: some folks were very interested in just one of the topics, while others were looking a bit more broadly. Whether that’s good or not depends on your perspective, I guess.

Mobile was present, happily, and continues to evolve. People are still talking about courses on a phone, but more folks were talking about extending the learning. Some of it was pretty dumb – just content or flash cards as learning augmentation – but there were interesting applications. Importantly, there was a growing awareness about performance support as a sensible approach. It’s nice to see the field mature.

For games, there were positive and negative signs. The good news is that games are being more fully understood in terms of their role in learning, e.g. deep practice. The bad news is that there’s still a lot of interest in gamification without a concomitant awareness of the important distinctions. Tarting up drill-and-kill with PBL (points, badges, and leaderboards; the new acronym apparently) isn’t worth significant interest! We know how to drill things that must be, but our focus should be on intrinsic interest.

As a side note, the demise of Flash has left us without a good game development environment. Flash is both a development environment and a delivery platform. As a development environment Flash had a low learning threshold, and yet could be used to build complex games. As a delivery platform, however, it’s woefully insecure (so much so that it’s been proscribed in most browsers). The fact that Adobe couldn’t be bothered to generate acceptable HTML5 out of the development environment, and let it languish, leaves the market open for another accessible tool. And Unity or Unreal provide good support (as I understand it), but still require coding. So we’re not at an easily accessible place. Oh, for HyperCard!

Most of the video interest was either in technical issues (how to get quality and/or on the cheap), but a lot of interest was also in interactive video. I think branching video is a real powerful learning environment for contextualized decision making. As a consequence the advent of tools that make it easier is to be lauded. An interesting session with the wise Joe Ganci (@elearningjoe) and a GoAnimate guy talked about when to use video versus animation, which largely seemed to reflect my view (confirmation bias ;) that it’s about whether you want more context (video) or concept (animation). Of course, it was also about the cost of production and the need for fidelity (video more than animation in both cases).

There was a lot of interest in VR, which crossed over between video and games. Which is interesting because it’s not inherently tied to games or video! In short, it’s a delivery technology. You can do branching scenarios, full game engine delivery, or just video in VR. The visuals can be generated as video or from digital models. There was some awareness, e.g. fun was made of the idea of presenting powerpoint in VR (just like 2nd Life ;).

I did an ecosystem presentation that contextualized all three (video, games, mobile) in the bigger picture, and also drew upon their cognitive and then L&D roles. I also deconstructed the game Fluxx (a really fun game with an interesting ‘twist’). Overall, it was a good conference (and nice to be in San Diego, one of my ‘homes’).

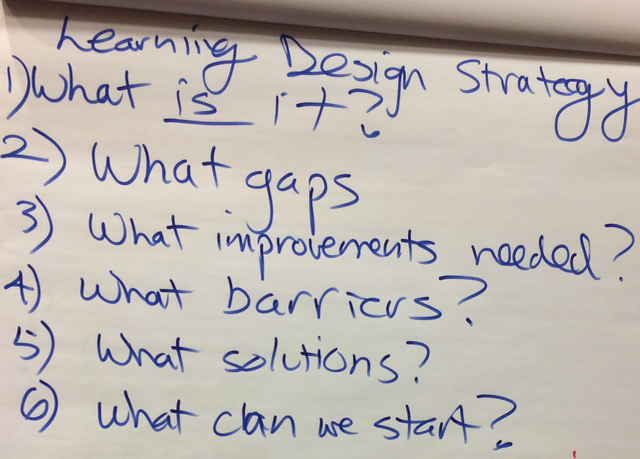

The other thing that I was involved in at Online Educa in Berlin was a session on The Flexible Worker. Three of us presented, each addressing one particular topic. One presentation was on collaborating to produce an elearning course on sleeping better, with the presenter’s firm demonstrating expertise in elearning, while the other firm had the subject matter expertise on sleep health. A second presentation was on providing tools to trainers to devolve content development locally, addressing a problem with centrally-developed content. My presentation was on the gaps between what L&D does and how our brains work, and the implications. And, per our design, issues emerged.

The other thing that I was involved in at Online Educa in Berlin was a session on The Flexible Worker. Three of us presented, each addressing one particular topic. One presentation was on collaborating to produce an elearning course on sleeping better, with the presenter’s firm demonstrating expertise in elearning, while the other firm had the subject matter expertise on sleep health. A second presentation was on providing tools to trainers to devolve content development locally, addressing a problem with centrally-developed content. My presentation was on the gaps between what L&D does and how our brains work, and the implications. And, per our design, issues emerged.