The last of the thoughts still percolating in my brain from #mlearncon finally emerged when I sat down to create a diagram to capture my thinking (one way I try to understand things is to write about them, but I also frequently diagram them to help me map the emerging conceptual relationships into spatial relationships).

What I was thinking about was how to distinguish between emergent opportunities for driving learning experiences, and semantic ones. When we built the Intellectricity© system, we had a batch of rules that guided how we were sequencing the content, based upon research on learning (rather than hardwiring paths, which is what we mostly do now). We didn’t prescribe, we recommended, so learners could choose something else, e.g. the next best, or browse to what they wanted. As a consequence, we also could have a machine learning component that would troll the outcomes, and improve the system over time.

What I was thinking about was how to distinguish between emergent opportunities for driving learning experiences, and semantic ones. When we built the Intellectricity© system, we had a batch of rules that guided how we were sequencing the content, based upon research on learning (rather than hardwiring paths, which is what we mostly do now). We didn’t prescribe, we recommended, so learners could choose something else, e.g. the next best, or browse to what they wanted. As a consequence, we also could have a machine learning component that would troll the outcomes, and improve the system over time.

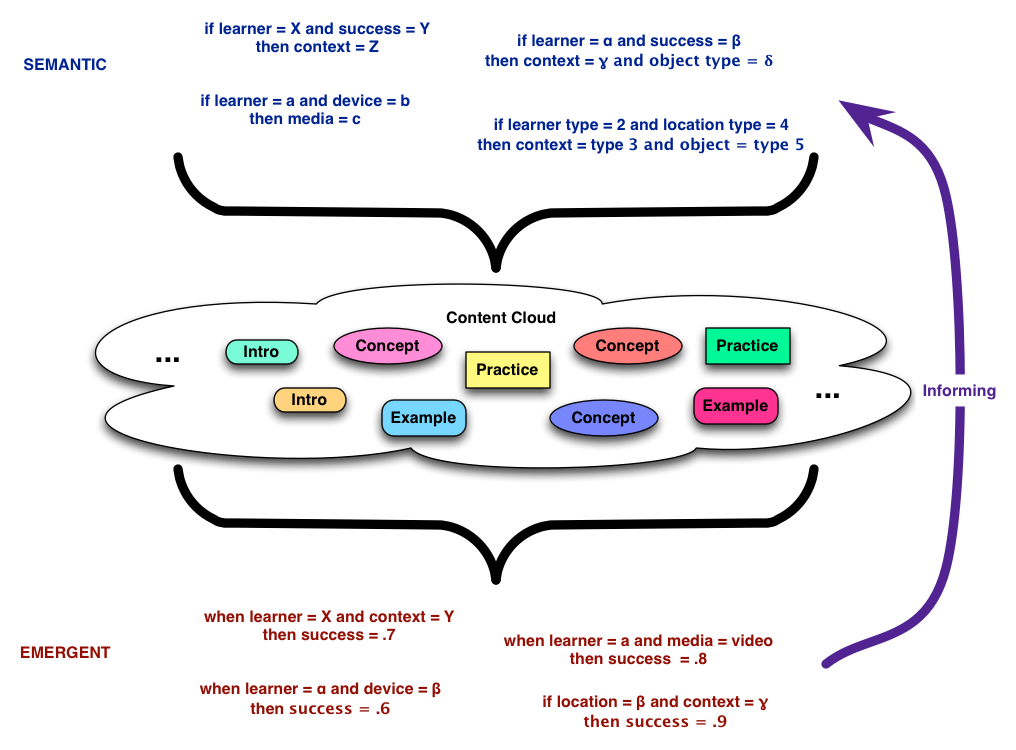

And that’s the principle here, where mainstream systems are now capable of doing similar things. What you see here are semantic rules (made up ones), explicitly making recommendations, ideally grounded in what’s empirically demonstrated in research. In places where research doesn’t stipulate, you could also make principled recommendations based upon the best theory. These would recommend objects to be pulled from a pool or cloud of available content.

However, as you track outcomes, e.g. success on practice, and start looking at the results by doing data analytics, you can start trolling for emergent patterns (again, made up). Here we might find confirmation (or the converse!) of the empirical rules, as well as potentially new patterns that we may be able to label semantically, and even perhaps some that would be new. Which helps explain the growing interest in analytics. And, if you’re doing this across massive populations of learners, as is possible across institutions, or with really big organizations, you’re talking the ‘big data’ phenomena that will provide the necessary quantities to start generating lots of these outcomes.

Another possibility is to specifically set up situations where you randomly trial a couple alternatives that are known research questions, and use this data opportunity to conduct your experiments. This way we can advance our learning more quickly using our own hypotheses, while we look for emergent information as well.

Until the new patterns emerge, I recommend adapting on the basis of what we know, but simultaneously you should be trolling for opportunities to answer questions that emerge as you design, and look for emergent patterns as well. We have the capability (ok, so we had it over a decade ago, but now the capability is on tap in mainstream solutions, not just bespoke systems), so now we need the will. This is the benefit of thinking about content as systems – models and architectures – not just as unitary files. Are you ready?

I love the idea and thinking here. The one thing that is truly the secret sauce is “recommend, not prescribe”. We’ve all seen the poorly recommended Amazon book. Too often in L&D, we try to guide too strongly (prescribe). No equation will be perfect and the engine and artificial intelligence will soon show it’s artificial stupidity. I think there is high value to use data mining and algorithms to drive recommendations that a learner may not be aware of, or biased to recognizing. However, I think the human element- good old common sense- and choice is the key to really making the framework a success.

One thing that I see in your framework design is a “solve for X” mentality. The variable may be user, success criteria, location- each of the components may be the variable in the context that changes the system response. That’s the flexibility I see to being critical in making an effective framework.