I have a statement that I actively recite to people: If I promise to do something, and it doesn’t get into a device, we never had the conversation. I’m not trying to be coy or problematic, there are sound reasons for this. It’s part of distributed cognition, and augmenting ourselves. It’s also part of a bigger picture, but here I am in praise of reminders.

I have a statement that I actively recite to people: If I promise to do something, and it doesn’t get into a device, we never had the conversation. I’m not trying to be coy or problematic, there are sound reasons for this. It’s part of distributed cognition, and augmenting ourselves. It’s also part of a bigger picture, but here I am in praise of reminders.

Schedule by clock is relatively new from a historical perspective. We used to use the sun, and that was enough. As we engaged in more abstract and group activities, we needed better coordination. We invented clocks and time as a way to accomplish this. For instance, train schedules.

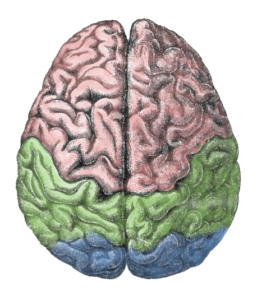

It’s an artifact of our creation, thus biologically secondary. We have to teach kids to tell time! Yet, we’re now beholden to it (even if we muck about with it, e.g. changing time twice a year, in conflict with research on the best outcomes for us). We created an external system to help us work better. However, it’s not well-aligned with our cognitive architecture, as we don’t naturally have instincts to recognize time.

We work better with external reminders. So, we have bells ringing to signal it’s time to go to another course, or to attend worship. Similar to, but different than other auditory signals (that don’t depend on our spatial attention) such as horns, buzzers, sirens, and the like. They can draw our attention to something that we should attend to. Which is a good thing!

I, for one, became a big fan of the Palm Pilot (I could only justify a III when I left academia, for complicated reasons). Having a personal device that I could add and edit things like reminders on a date/time calendar fundamentally altered my effectiveness. Before, I could miss things if I disappeared into a creative streak on a presentation, paper, diagram, etc. With this, I could be interrupted and be alerted that I had an appointment for something: call, meeting, etc. I automatically attach alerts to all my calendar entries.

Granted, I pushed myself to see just how effective I could make myself. Thus, I actively cultivated my address book, notes, and reminders as well as my calendar (and still do). But this is one area that’s really continued to support my ability to meet commitments. Something I immodestly pride myself for delivering on. I hate to have to apologize for missing a commitment! (I’ll add multiple reminders to critical things!) Which doesn’t mean you shouldn’t, actively avoid all the unnecessary events people would like to add to your calendar, but that’s just self-preservation!

Again, reminders are just one aspect of augmenting ourselves. There are many tools we can use – creating representations, externalizing knowledge, … – but this on in particular as been a big key to improving my ability to deliver. So I am in praise of reminders, as one of the tools we can, and should, use. What helps you?

(And now I’ll tick the box on my weekly reminder to write a blog post!)