The API formerly known as Tin Can provides a consistent way to report individual activity. With the simple syntax of <who> <did> <this> (e.g. <Clark Quinn> <wrote> <a blog post>), systems can generate records across a wide variety of activity, creating a rich base of data to mine for contingencies that lead to success. While machine learning and analytics is one opportunity, there’s another, which is having people look at the data. And one person in particular.

As background, I was fortunate back in 1989 to get a post-doctoral fellowship to study at the Learning Research & Development Center at the University of Pittsburgh. One of the projects that had been developed was a series of intelligent tutoring systems (ITS) that shared an unusual characteristic. Unlike most ITS, which tutor on the domain, these three systems crossed domains (geometric optics, microeconomics, and electrical circuits, if memory serves) but the tutoring was about the systematicity in exploration. That is, the system tracked and intervened on whether you were varying one variable at a time, ensuring your data sampling was across a broad enough range of data points, etc. This reflected work done by the Valerie Shute and Jeffery Bonar some years before on your learning strategies.

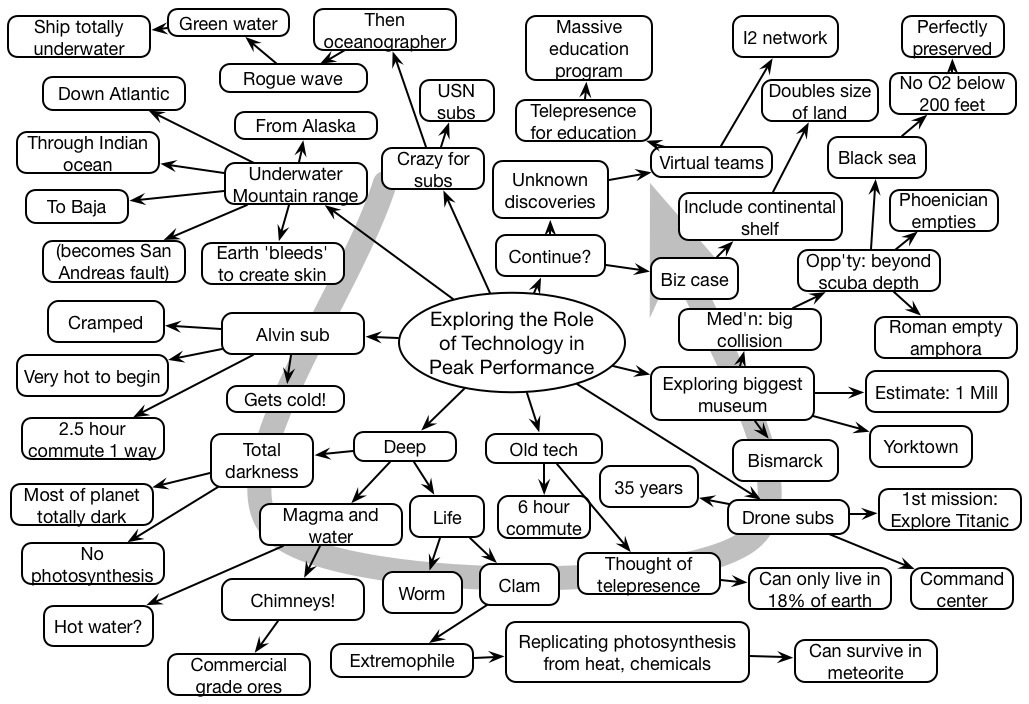

I had the further benefit to work under the guidance of Leona Schauble, a very insightful researcher. One of her projects was with Kalyani Raghavan on working to make the learners’ paths in these systems visible and comprehensible to the learner, and they created the Dynamic And Reflective Notation (DARN, heh!) to capture and represent those paths.

Fast forward to today, and one of the big opportunities I see is for performers to reflect on their own paths of action. The granularity at which Tin Can can capture data, and systems might be instrumented to generate data, could be too small to be useful, so some way of aggregating activity to a reasonable level would be necessary, but looking at one’s own paths, and perhaps others, would be a useful way to reflect on process and look for opportunities to improve.

Reflection on action is a powerful learning and improvement process, but recollection isn’t as good as actual recording. The power of working out loud is really seen when those tracks are left for examination. The API has the opportunity to support more than system mining (“oh look, everyone who has this responsibility who touches this resource does way better than those who don’t”). Not that there’s anything wrong with that, but having performers do it too is a great opportunity not to be missed. As the work on protein folding has found, some patterns are better for computer solution, and others for human. We’d be remiss if we didn’t explore the opportunities to be found.