So I presented on innovation to the local ATD chapter a few weeks ago, and they did an interesting and nice thing: they got the attendees to document their takeaways. And I promised to write a blog post about it, and I’ve finally received the list of thoughts, so here are my reflections. As an aside, I’ve written separate articles on L&D innovation recently for both CLO magazine and the Litmos blog so you can check those out, too.

I started talking about why innovation was needed, and then what it was. They recalled that I pointed out that by definition an innovation is not only a new idea, but one that is implemented and leads to better results. I made the point that when you’re innovating, designing, researching, trouble-shooting, etc, you don’t know the answer when you start, so they’re learning situations, though informal, not formal. And they heard me note that agility and adaptation are premised on informal learning of this sort, and that the opportunity is for L&D to take up the mantle to meed the increasing need.

There was interest but some lack of clarity around meta-learning. I emphasize that learning to learn may be your best investment, but given that you’re devolving responsibility you shouldn’t assume that individuals are automatically possessed of optimal learning skills. The focus then becomes developing learning to learn skills, which of needs is done across some other topic. And, of course, it requires the right culture.

There were some terms they heard that they weren’t necessarily clear on, so per the request, here are the terms (from them) and my definition:

- Innovation by Design: here I mean deliberately creating an environment where innovation can flourish. You can’t plan for innovation, it’s ephemeral, but you can certainly create a felicitous environment.

- Adjacent Possible: this is a term Steven Johnson used in his book Where Good Ideas Come From, and my take is that it means that lateral inspiration (e.g. ideas from nearby: related fields or technologies) is where innovation happens, but it takes exposure to those ideas.

- Positive Deviance: the idea (which I heard of from Jane Bozarth) is that the best way to find good ideas is to find people who are excelling and figure out what they’re doing differently.

- Hierarchy and Equality: I’m not quite sure what they were referring to hear (I think more along the lines of Husband’s Wirearchy versus hierarchy) but the point is to reduce the levels and start tapping into the contributions possible from all.

- Assigned roles and vulnerability: I’m even less certain what’s being referred to here (I can’t be responsible for everything people take away ;), but I could interpret this to mean that it’s hard to be safe to contribute if you’re in a hierarchy and are commenting on someone above you. Which again is an issue of safety (which is why I advocate that leaders ‘work out loud’, and it’s a core element of Edmondson’s Teaming; see below).

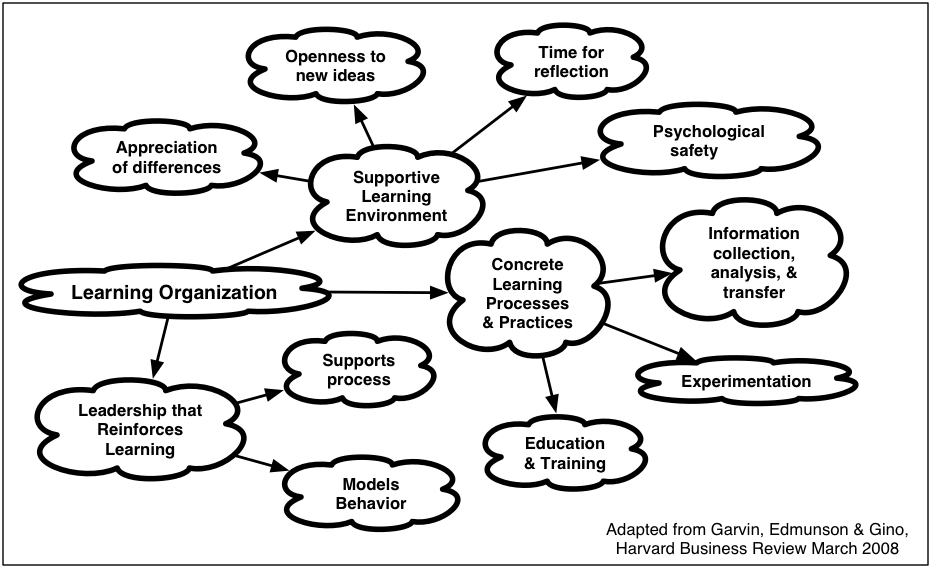

I used the Learning Organization Dimensions diagram (Garvin, Edmondson & Gino) to illustrate the components of successful innovation environment, and these were reflected in their comments. A number mentioned psychological safety in particular as well as the other elements of the learning environment. They also picked up on the importance of leadership.

I used the Learning Organization Dimensions diagram (Garvin, Edmondson & Gino) to illustrate the components of successful innovation environment, and these were reflected in their comments. A number mentioned psychological safety in particular as well as the other elements of the learning environment. They also picked up on the importance of leadership.

Some other notes that they picked up on included:

- best principles instead of best practices

- change is facilitated when the affected individual choose to change

- brainstorming needs individual work before collective work

- that trust is required to devolve responsibility

- the importance of coping with ambiguity

One that was provided that I know I didn’t say because I don’t believe it, but is interesting as a comment:

“Belonging trumps diversity, and security trumps grit”

This is an interesting belief, and I think that’s likely the case if it’s not safe to experiment and make mistakes.

They recalled some of the books I mentioned, so here’s the list:

- The Invisible Computer by Don Norman

- The Design of Everyday Things by Don Norman

- My Revolutionize Learning and Development (of course ;)

- XLR8 by John Kotter (with the ‘dual operating system‘ hypothesis)

- Teaming to Innovate by Amy Edmondson (I reviewed it)

- Working Out Loud by John Stepper

- Scaling Up Excellence by Robert I. Sutton and Huggy Rao (blogged)

- Organize for Complexity by Niels Pflaeging (though they heard this as a concept, not a title)

It was a great evening, and really rewarding to see that many of the messages stuck. So, what are your thought around innovation?

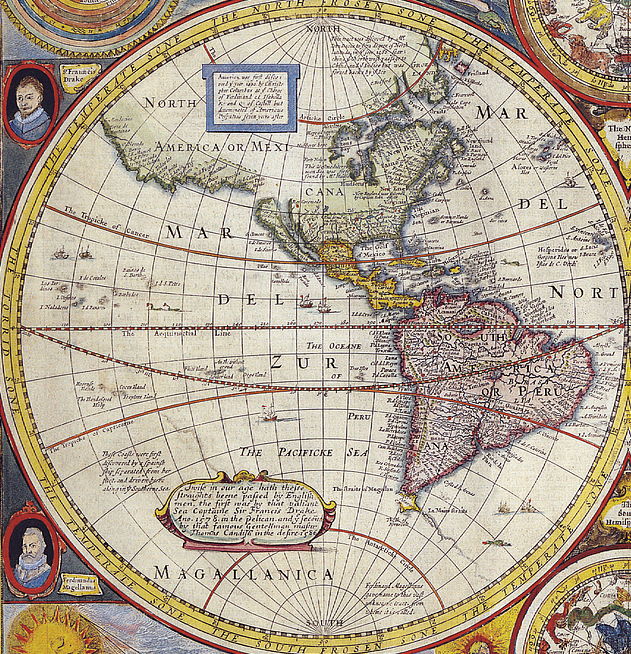

So, one of the first requirements was to have the necessary tools to explore. In the old days that could include means to navigate (chronograph, compass), ways to represent learnings/discoveries (map, journal), and resources (food, shelter, transport). It was necessary to get to the edge of the map, move forward, document the outcomes, and successfully return. This hasn’t changed in concept.

So, one of the first requirements was to have the necessary tools to explore. In the old days that could include means to navigate (chronograph, compass), ways to represent learnings/discoveries (map, journal), and resources (food, shelter, transport). It was necessary to get to the edge of the map, move forward, document the outcomes, and successfully return. This hasn’t changed in concept.