At the recent DevLearn, Donald Clark talked about AI in learning, and while I largely agreed with what he said, I had some thoughts and some quibbles. I discussed them with him, but I thought I’d record them here, not least as a basis for a further discussion.

Donald’s an interesting guy, very sharp and a voracious learner, and his posts are both insightful and inciteful (he doesn’t mince words ;). Having built and sold an elearning company, he’s now free to pursue what he believes and it’s currently in the power of technology to teach us.

As background, I was an AI groupie out of college, and have stayed current with most of what’s happened. And you should know a bit of the history of the rise of Intelligent Tutoring Systems, the problems with developing expert models, and current approaches like Knewton and Smart Sparrow. I haven’t been free to follow the latest developments as much as I’d like, but Donald gave a great overview.

He pointed to systems being on the verge of auto parsing content and developing learning around it. He showed an example, and it created questions from dropping in a page about Las Vegas. He also showed how systems can adapt individually to the learner, and discussed how this would be able to provide individual tutoring without many limitations of teachers (cognitive bias, fatigue), and can not only personalize but self-improve and scale!

One of my short-term problems was that the questions auto-generated were about knowledge, not skills. While I do agree that knowledge is needed (ala VanMerriënboer’s 4CID) as well as applying it, I think focusing on the latter first is the way to go.

This goes along with what Donald has rightly criticized as problems with multiple-choice questions. He points out how they’re largely used as knowledge test, and I agree that’s wrong, but while there are better practice situations (read: simulations/scenarios/serious games), you can write multiple choice as mini-scenarios and get good practice. However, it’s as yet an interesting research problem, to me, to try to get good scenario questions out of auto-parsing content.

I naturally argued for a hybrid system, where we divvy up roles between computer and human based upon what we each do well, and he said that is what he is seeing in the companies he tracks (and funds, at least in some cases). A great principle.

The last bit that interested me was whether and how such systems could develop not only learning skills, but meta-learning or learning to learn skills. Real teachers can develop this and modify it (while admittedly rare), and yet it’s likely to be the best investment. In my activity-based learning, I suggested that gradually learners should take over choosing their activities, to develop their ability to become self-learners. I’ve also suggested how it could be layered on top of regular learning experiences. I think this will be an interesting area for developing learning experiences that are scalable but truly develop learners for the coming times.

There’s more: pedagogical rules, content models, learner models, etc, but we’re finally getting close to be able to build these sorts of systems, and we should be aware of what the possibilities are, understanding what’s required, and on the lookout for both the good and bad on tap. So, what say you?

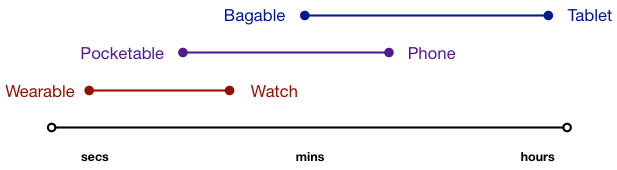

This is similar to the way I’d seen Palm talk about the

This is similar to the way I’d seen Palm talk about the