I was thinking (one morning at 4AM, when I was wishing I was asleep) about designing assignment structures that matched my activity-based learning model. And a model emerged that I managed to recall when I finally did get up. I’ve been workshopping it a bit since, tuning some details. No claim that it’s there yet, by the way.

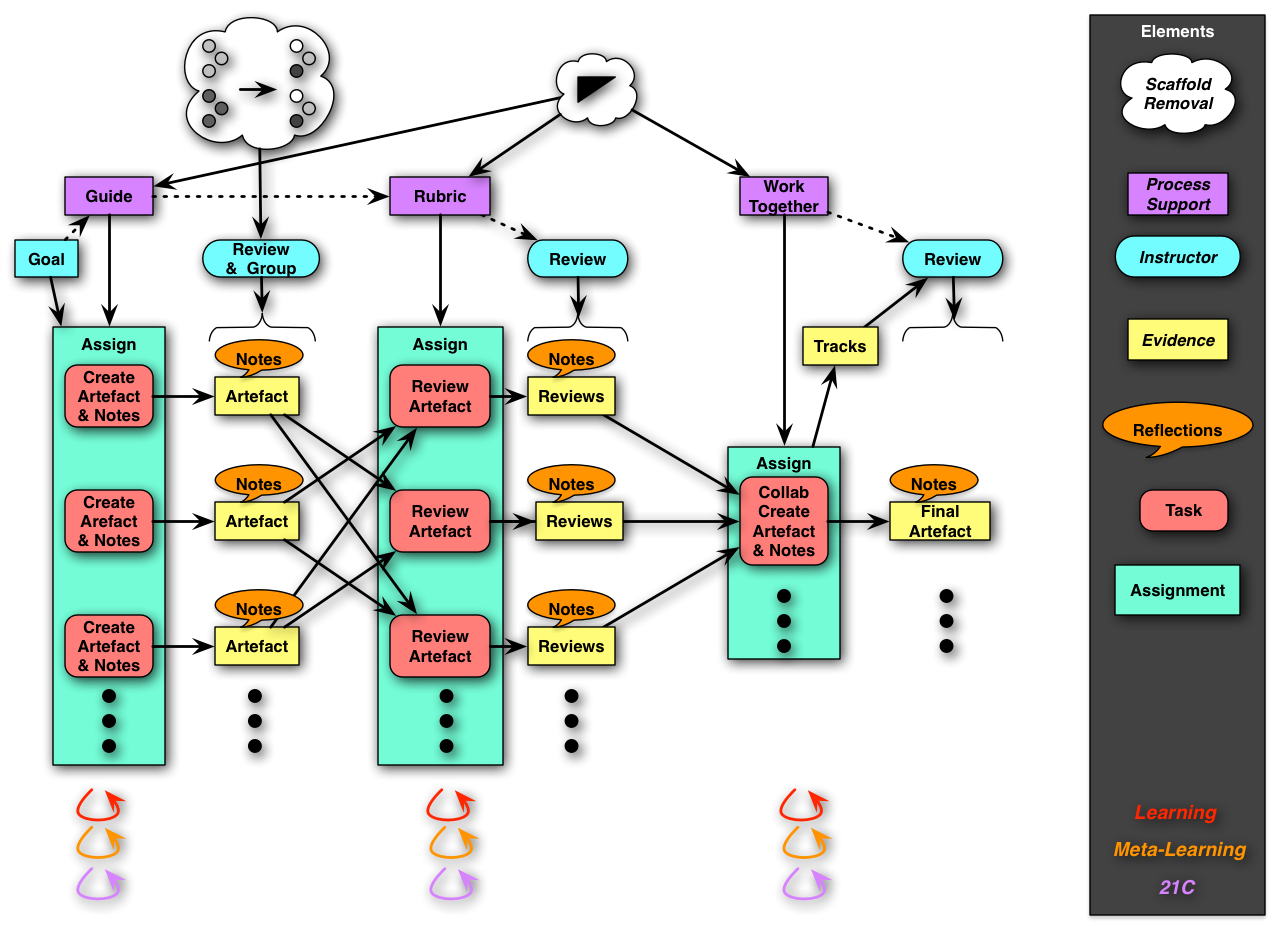

And I’ll be the first to acknowledge that it’s complex, as the diagram represents, but let me tease it apart for you and see if it makes sense. I’m trying to integrate meaningful tasks, meta-learning, and collaboration. And there are remaining issues, but let’s get to the model first.

And I’ll be the first to acknowledge that it’s complex, as the diagram represents, but let me tease it apart for you and see if it makes sense. I’m trying to integrate meaningful tasks, meta-learning, and collaboration. And there are remaining issues, but let’s get to the model first.

So, it starts by assigning the learners a task to create an artefact. (Spelling intended to convey that it’s not a typical artifact, but instead a created object for learning purposes.) It could be a presentation, a video, a document, or what have you. The learner is also supposed to annotate their rationale for the resulting design as well. And, at least initially, there’s a guide to principles for creating an artefact of this type. There could even be a model presentation.

The instructor then reviews these outputs, and assigns the student several others to review. Here it’s represented as 2 others, but it could be 4. The point is that the group size is the constraining factor.

And, again at least initially, there’s a rubric for evaluating the artefacts to support the learner. There could even be a video of a model evaluation. The learner writes reviews of the two artefacts, and annotates the underlying thinking that accompanies and emerges. And the instructor reviews the reviews, and provides feedback.

Then, the learner joins with other learners to create a joint output, intended to be better than each individual submission. Initially, at least, the learners will likely be grouped with others that are similar. This step might seem counter intuitive, but while ultimately the assignments will be to widely different artefacts, initially the assignment is lighter to allow time to come to grips with the actual process of collaborating (again with a guide, at least initially). Finally, the final artefacts are evaluated, perhaps even shared with all.

Several points to make about this. As indicated, the support is gradually faded. While another task might use another artefact, so the guides and rubrics will change, the working together guide can gradually first get to higher and higher levels (e.g. starting with “everyone contributes to the plan”, and ultimately getting to “look to ensure that all are being heard”) and gradually being removed. And the assignment to different groups goes from alike to as widely disparate as possible. And the tasks should eventually get back to the same type of artefact, developing those 21 C skills about different representations and ways of working. The model is designed more for a long-term learning experience than a one-off event model (which we should be avoiding anyways).

The artefacts and the notes are evidence for the instructor to look at the learner’s understanding and find a basis to understand not only their domain knowledge (and gaps), but also their understanding of the 21st Century Skills (e.g. the artefact-creation process, and working and researching and…), and their learning-to-learn skills. Moreover, if collaborative tools are used for the co-generation of the final artefact, there are traces of the contribution of each learner to serve as further evidence.

Of course, this could continue. If it’s a complex artefact (such as a product design, not just a presentation), there could be several revisions. This is just a core structure. And note that this is not for every assignment. This is a major project around or in conjunction with other, smaller, things like formative assessment of component skills and presentation of models may occur.

What emerges is that the learners are learning about the meta-cognitive aspects of artefact design, through the guides. They are also meta-learning in their reflections (which may also be scaffolded). And, of course, the overall approach is designed to get the valuable cognitive processing necessary to learning.

There are some unresolved issues here. For one, it could appear to be heavy load on the instructor. It’s essentially impossible to auto-mark the artefacts, though the peer review could remove some of the load, requiring only oversight. For another, it’s hard to fit into a particular time-frame. So, for instance, this could take more than a week if you give a few days for each section. Finally, there’s the issue of assessing individual understanding.

I think this represents an integration of a wide spread of desirable features in a learning experience. It’s a model to shoot for, though it’s likely that not all elements will initially be integrated. And, as yet, there’s no LMS that’s going to track the artefact creation across courses and support all aspects of this. It’s a first draft, and I welcome feedback!

Leave a Reply