So, a few weeks ago I ran a survey asking about elearning processes*, and it’s time to look at the results (I’ve closed it). eLearning process is something I’m suggesting is ripe for change, and I thought it appropriate to see what people thoughts. Some caveats: it’s self-selected, it’s limited (23 respondents), and it’s arguably readers of this blog or the other folks who pointed to it, so it’s a select group. With those caveats, what did we see?

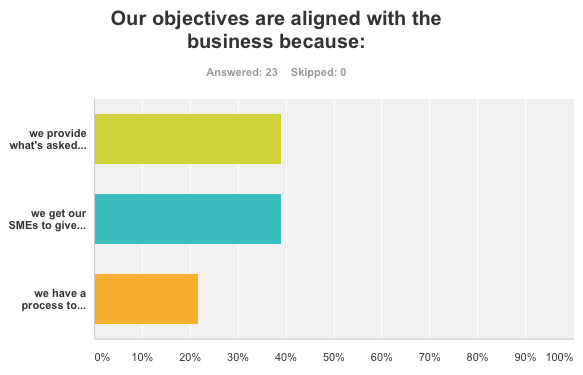

The first question was looking at how we align our efforts with business needs. The alternatives were ‘providing what’s asked for’ (e.g. taking orders), ‘getting from SMEs’, and ‘using a process’. These are clearly in ascending order of appropriateness. Order taking doesn’t allow for seeing if a course is needed and SMEs can’t tell you what they actually do. Creating a process to ensure a course is the best solution (as opposed to a job aid or going to the network), and then getting the real performance needs (by triangulating), is optimal. What we see, however, is that only a bit more than 20% are actually getting this right from the get-go, and almost 80% are failing at one of the two points along the way.

The first question was looking at how we align our efforts with business needs. The alternatives were ‘providing what’s asked for’ (e.g. taking orders), ‘getting from SMEs’, and ‘using a process’. These are clearly in ascending order of appropriateness. Order taking doesn’t allow for seeing if a course is needed and SMEs can’t tell you what they actually do. Creating a process to ensure a course is the best solution (as opposed to a job aid or going to the network), and then getting the real performance needs (by triangulating), is optimal. What we see, however, is that only a bit more than 20% are actually getting this right from the get-go, and almost 80% are failing at one of the two points along the way.

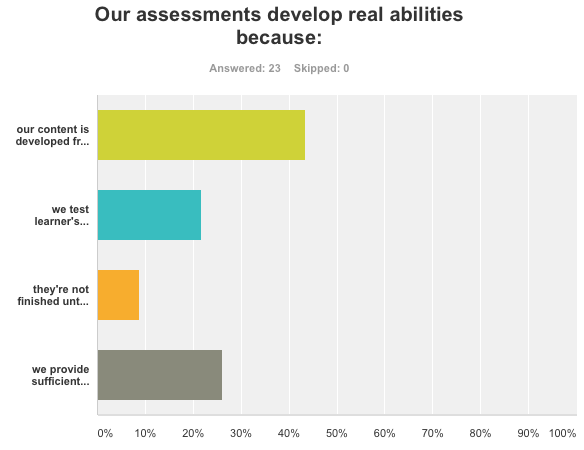

The second question was asking about how the assessments were aligned with the need. The options ranged from ‘developing from good sources’, thru ‘we test knowledge’ and ‘they have to get it right’ to ‘sufficient spaced contextualized practice’, e.g. ’til they can’t get it wrong. The clear need, if we’re bothering to develop learning, is to ensure that they can do it at the end. Doing it ‘until they get it right’ isn’t sufficient to develop a new ability to do. And, we see more than 40% are focusing on using the existing content! Now, the alternatives were not totally orthogonal (e.g. you could have the first response and any of the others), so interpreting this is somewhat problematic. I assumed people would know to choose the lowest option in the list if they could, and I don’t know that (flaw in the survey design). Still it’s pleasing to see that almost 30% are doing sufficient practice, but that’s only a wee bit ahead of those who say they’re just testing knowledge! So it’s still a concern.

The second question was asking about how the assessments were aligned with the need. The options ranged from ‘developing from good sources’, thru ‘we test knowledge’ and ‘they have to get it right’ to ‘sufficient spaced contextualized practice’, e.g. ’til they can’t get it wrong. The clear need, if we’re bothering to develop learning, is to ensure that they can do it at the end. Doing it ‘until they get it right’ isn’t sufficient to develop a new ability to do. And, we see more than 40% are focusing on using the existing content! Now, the alternatives were not totally orthogonal (e.g. you could have the first response and any of the others), so interpreting this is somewhat problematic. I assumed people would know to choose the lowest option in the list if they could, and I don’t know that (flaw in the survey design). Still it’s pleasing to see that almost 30% are doing sufficient practice, but that’s only a wee bit ahead of those who say they’re just testing knowledge! So it’s still a concern.

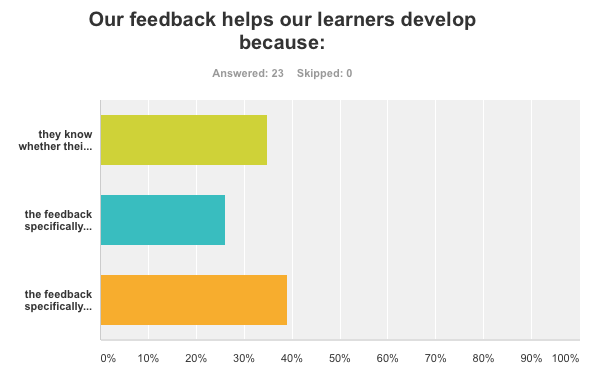

The third question was looking at the feedback provided. The options included ‘right or wrong’, ‘provides the right answer’, and ‘indication for each wrong answer’. I’ve been railing against one piece of feedback for all the wrong answers for years now, and it’s important. The alternatives to the wrong answer shouldn’t be random, but instead should represent the ways learners typically get it wrong (based upon misconceptions). It’s nice (and I admit somewhat surprising) that almost 40% are actually providing feedback that addresses each wrong answer. That’s a very positive outcome. However, that it’s not even half is still kind of concerning.

The third question was looking at the feedback provided. The options included ‘right or wrong’, ‘provides the right answer’, and ‘indication for each wrong answer’. I’ve been railing against one piece of feedback for all the wrong answers for years now, and it’s important. The alternatives to the wrong answer shouldn’t be random, but instead should represent the ways learners typically get it wrong (based upon misconceptions). It’s nice (and I admit somewhat surprising) that almost 40% are actually providing feedback that addresses each wrong answer. That’s a very positive outcome. However, that it’s not even half is still kind of concerning.

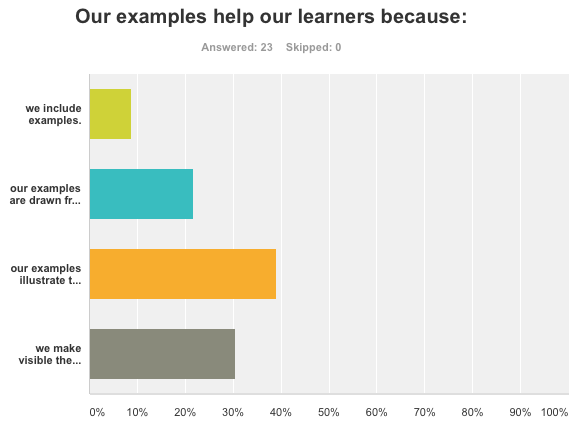

The fourth question digs into the issue of examples. There are nuances of details about examples, and here I was picking up on a few of these. The options ranged from ‘having’, thru ‘coming from SMEs’ and ‘illustrate the concept and context’, to ‘showing the underlying thinking’. Again, obviously the latter is the best. It turns out that experts don’t typically show the underlying cognition, and yet it’s really valuable for the learning. We see that we are getting the link of concept to context clear, and together with showing thinking we’re nabbing roughly 70% of the examples, so that’s a positive sign.

The fourth question digs into the issue of examples. There are nuances of details about examples, and here I was picking up on a few of these. The options ranged from ‘having’, thru ‘coming from SMEs’ and ‘illustrate the concept and context’, to ‘showing the underlying thinking’. Again, obviously the latter is the best. It turns out that experts don’t typically show the underlying cognition, and yet it’s really valuable for the learning. We see that we are getting the link of concept to context clear, and together with showing thinking we’re nabbing roughly 70% of the examples, so that’s a positive sign.

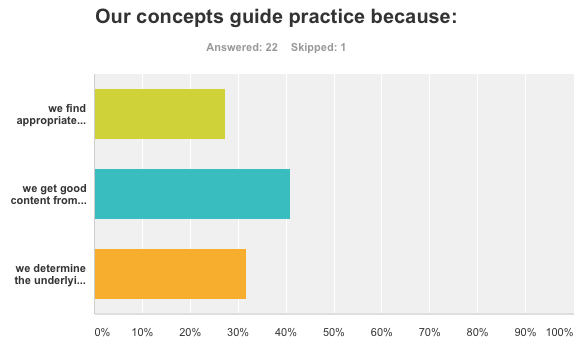

The fifth question asks about concepts. Concepts are (or should be) the models that guide performance in the contexts seen across examples and practice (and the basis for the aforementioned feedback). The alternatives ranged from ‘using good content’ and ‘working with SMEs’ to ‘determining the underlying model’. It’s the latter that is indicated as the basis for making better decisions, going forward. (I suggest that what will helps orgs is not the ability to receive knowledge, but to make better decisions.) And we see over 30% going to those models, but still a high percentage still taking the presentations from the SMEs. Which isn’t totally inappropriate, as they do have access to what they learned. I’m somewhat concerned overall that much of ID seems to talk about practice and ‘content’, lumping intros and concepts and examples and closing all together into the latter (without suitable differentiation), so this was better than expected.

The fifth question asks about concepts. Concepts are (or should be) the models that guide performance in the contexts seen across examples and practice (and the basis for the aforementioned feedback). The alternatives ranged from ‘using good content’ and ‘working with SMEs’ to ‘determining the underlying model’. It’s the latter that is indicated as the basis for making better decisions, going forward. (I suggest that what will helps orgs is not the ability to receive knowledge, but to make better decisions.) And we see over 30% going to those models, but still a high percentage still taking the presentations from the SMEs. Which isn’t totally inappropriate, as they do have access to what they learned. I’m somewhat concerned overall that much of ID seems to talk about practice and ‘content’, lumping intros and concepts and examples and closing all together into the latter (without suitable differentiation), so this was better than expected.

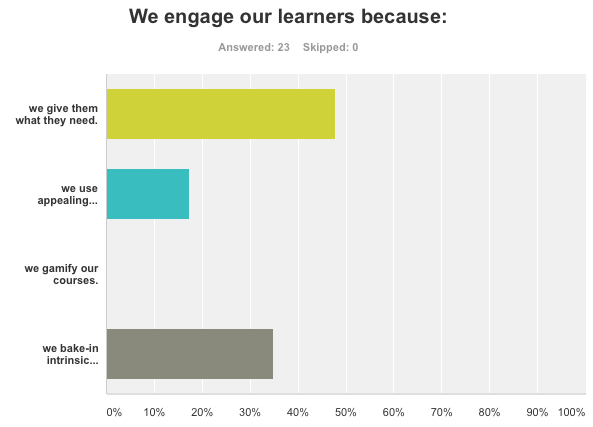

The sixth question tapped into the emotional side of learning, engagement. The options were ‘giving learners what they need’, ‘a good look’, ‘gamification’, and ‘tapping into intrinsic motivation’. I’ve been a big proponent of intrinsic motivation (heck, I effectively wrote a book on it ;), and not gamification. I think an appealing visual design, but just ‘giving them what they need’ isn’t sufficient for novices: they need the emotional component too. For practitioners, of course, not so much. I’m pleased that no one talked about gamification (yet the success of companies that sell ‘tart up’ templates suggests that this isn’t the norm). Still, more than a third are going to the intrinsic motivation, which is heartening. There’s a ways to go, but some folks are hearing the message.

The sixth question tapped into the emotional side of learning, engagement. The options were ‘giving learners what they need’, ‘a good look’, ‘gamification’, and ‘tapping into intrinsic motivation’. I’ve been a big proponent of intrinsic motivation (heck, I effectively wrote a book on it ;), and not gamification. I think an appealing visual design, but just ‘giving them what they need’ isn’t sufficient for novices: they need the emotional component too. For practitioners, of course, not so much. I’m pleased that no one talked about gamification (yet the success of companies that sell ‘tart up’ templates suggests that this isn’t the norm). Still, more than a third are going to the intrinsic motivation, which is heartening. There’s a ways to go, but some folks are hearing the message.

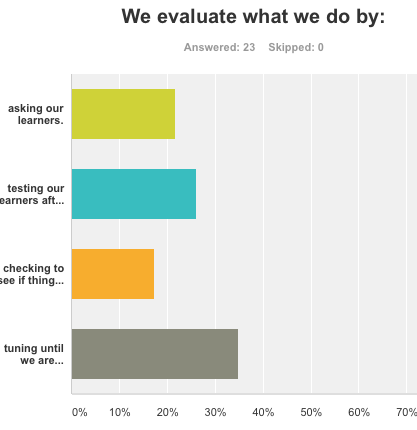

The last question gets into measurement. We should be evaluating what we do. Ideally, we start from a business metric we need to address and work backward. That’s typically not seen. The questions basically covered the Kirkpatrick model, working from ‘smile sheets’, through’ testing after the learning experience’ and ‘checking changes in workplace behavior’ to ‘tuning until impacting org metrics’. I was pleasantly surprised to see over a third doing the latter, and my results don’t parallel what I’ve seen elsewhere. I’m dismayed, of course, that over 20% are still just asking learners, which we know in general isn’t of particular use.

The last question gets into measurement. We should be evaluating what we do. Ideally, we start from a business metric we need to address and work backward. That’s typically not seen. The questions basically covered the Kirkpatrick model, working from ‘smile sheets’, through’ testing after the learning experience’ and ‘checking changes in workplace behavior’ to ‘tuning until impacting org metrics’. I was pleasantly surprised to see over a third doing the latter, and my results don’t parallel what I’ve seen elsewhere. I’m dismayed, of course, that over 20% are still just asking learners, which we know in general isn’t of particular use.

This was a set of questions deliberately digging into areas where I think elearning falls down, and (at least with this group of respondents), it’s not good as I’d hope, but not as bad as I feared. Still, I’d suggest there’s room for improvement, given the constraints above about who the likely respondents are. It’s not a representative sample, I’d suspect.

Clearly, there are ways to do well, but it’s not trivial. I’m arguing that we can do good elearning without breaking the bank, but it requires an understanding of the inflection points of the design process where small changes can yield important results. And it requires an understanding of the deeper elements to develop the necessary tools and support. I have been working with several organizations to make these improvements, but it’s well past time to get serious about learning, and start having a real impact.

So over to you: do you see this as a realistic assessment of where we are? And do you take the overall results as indicating a healthy industry, or an industry that needs to go beyond haphazard approaches and start practicing Learning Engineering?

*And, let me say, thanks very much to those respondents who bothered to take the time to respond. It was quick, but still, the effort was completely appreciated.

Leave a Reply