Perhaps inspired by Apple’s focus on Augmented Reality (AR), I thought I’d take a stab at conveying the types of things that could be done to support both learning and performance. I took a sample of some of my photos and marked them up. I’m sure there’s lots more that could be done (there were some great games), but I’m focusing on simple information that I would like to see. It’s mocked up (so the arrows are hand drawn), so understand I’m talking concept here, not execution!

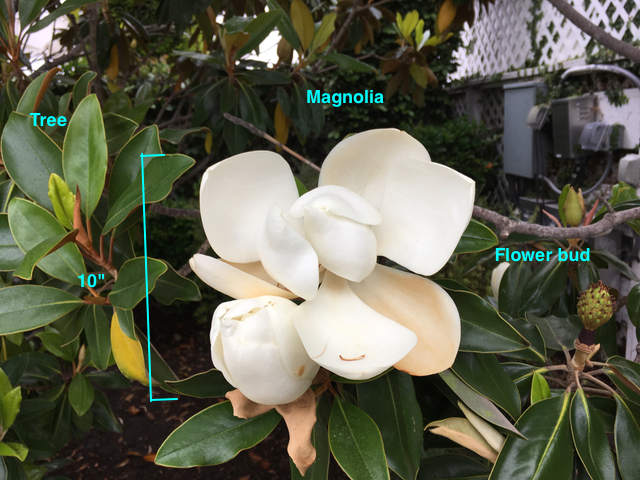

Here, I’m starting small. This is a photo I took of a flower on a walk. This is the type of information I might want while viewing the flower through the screen (or glasses). The system could tell me it’s a tree, not a bush, technically (thanks to my flora-wise better half). It could also illustrate how large it is. Finally, the view could indicate that what I’m viewing is a magnolia (which I wouldn’t have known), and show me off to the right the flower bud stage.

The point is that we can get information around the particular thing we’re viewing. I might not actually care about the flower bud, so that might be filtered out, and it might instead talk about any medicinal uses. Also, it could be dynamic, animating the process of going from bud to flower and falling off. It could also talk about the types of animals (bees, hummingbirds, ?) that interact with it, and how. It would be dependent on what I want to learn. And, perhaps, with some additional incidental information on the periphery of my interests, for serendipity.

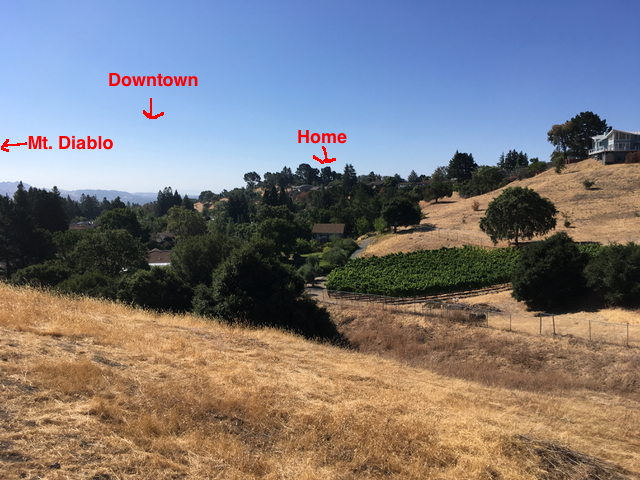

Going wider, here I’m looking out at a landscape, and the overlay is providing directions. Downtown is straight ahead, my house is over that ridge, and infamous Mt. Diablo is off to the left of the picture. It could do more, pointing out that the green ridges are grapes, provide the name of the neighborhood that’s in the foreground (I call it Stepford Downs, after the movie ;).

Going wider, here I’m looking out at a landscape, and the overlay is providing directions. Downtown is straight ahead, my house is over that ridge, and infamous Mt. Diablo is off to the left of the picture. It could do more, pointing out that the green ridges are grapes, provide the name of the neighborhood that’s in the foreground (I call it Stepford Downs, after the movie ;).

Dynamically, of course, if I moved the camera to the left, Mt. Diablo would get identified when it sprung into view. As we moved around, we’d point to the neighboring towns in view, and in the direction of further towns blocked by mountain ranges. We should or could also identify the river flowing past to the north. And we could instead focus on other information: infrastructure (pipes and electricity), government boundaries, whatever’s relevant could be filtered in or out.

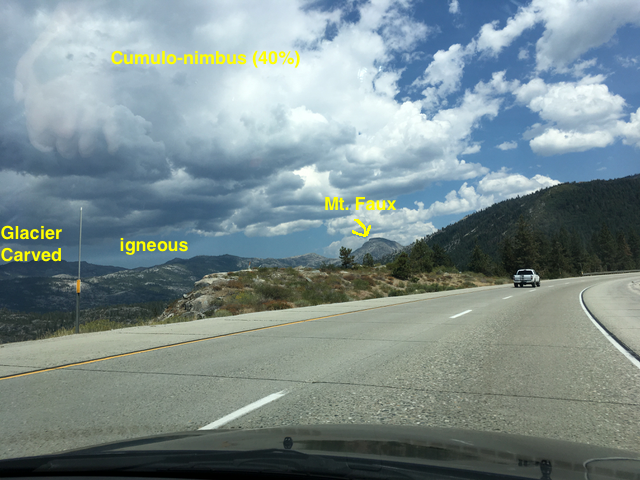

And in this final example, taken from the car on a trip, AR might indicate some natural features. Here I’ve pointed to the clouds (and indicated the likelihood of rain). Similarly, I’ve identified the rock and the mechanism of shaping. (These are all made up, they could be wrong; Mt Faux definitely is!) We might even be able to touch on a label and have it expand.

And in this final example, taken from the car on a trip, AR might indicate some natural features. Here I’ve pointed to the clouds (and indicated the likelihood of rain). Similarly, I’ve identified the rock and the mechanism of shaping. (These are all made up, they could be wrong; Mt Faux definitely is!) We might even be able to touch on a label and have it expand.

Similarly, as we moved, information would change as we viewed different areas. We might even animate what the area looked like hundreds of thousands of years ago and how it’s changed. Or we could illustrate coming changes. It could instead show boundaries of counties or parks, types of animals, or other relevant information.

The point here is that annotating the world, a capability AR has, can be an amazing learning tool. If I can specify my interests, we can capitalize on them to develop. And this is as an adult. Think about doing this for kids, layering on information in their Zone of Proximal Development and interests! I know VR’s cool, and has real learning potential, but there you have to create the context. Here we’re taking advantage of it. That may be harder, but it’s going to have some real upsides when it can be done ubiquitously.

Leave a Reply