A few years ago, I had a ‘debate’ with Will Thalheimer about the Kirkpatrick model (you can read it here). In short, he didn’t like it, and I did, for different reasons. However, the situation has changed, and it’s worth revisiting the issue of evaluation.

In the debate, I was lauding how Kirkpatrick starts with the biz problem, and works backwards. Will attacked that the model didn’t really evaluate learning. I replied that it’s role wasn’t evaluating the effectiveness of the learning design on the learning outcome, it was assessing the impact of the learning outcome on the organizational outcome.

In the debate, I was lauding how Kirkpatrick starts with the biz problem, and works backwards. Will attacked that the model didn’t really evaluate learning. I replied that it’s role wasn’t evaluating the effectiveness of the learning design on the learning outcome, it was assessing the impact of the learning outcome on the organizational outcome.

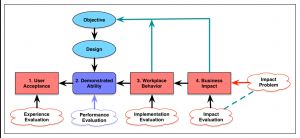

Fortunately, this discussion is now resolved. Will, to his credit, has released his own model (while unearthing the origins of Kirkpatrick’s work in Katzell’s). His model is more robust, with 8 levels. This isn’t overwhelming, as you can ignore some. Fortunately, there’re indicators as to what’s useful and what’s not!

It’s not perfect. Kirkpatrick (or Katzell? :) can relatively easily be used for other interventions (incentives, job aids, … tho’ you might not tell it from the promotional material). It’s not so obvious how to do so with his new model. However, I reckon it’s more robust for evaluating learning interventions. (Caveat: I may be biased, as I provided feedback.) And should he have numbered them in reverse, which Kirkpatrick admitted might’ve been a better idea?

Evaluation is critical. We do some, but not enough. (Smile sheets, level 1, where we ask learners what they think of the experience, has essentially zero correlation with outcomes.) We need to do a better job of evaluating our impacts (not just our efficiency). This is a more targeted model. I draw it to your attention.

I like the set-up of this model, do you plan on expanding upon the different steps/process to gain more insight into adopting something like this?

Jake, I don’t understand. I won’t go into Will’s model, he’s done that. I went into my model in the debate. Check either or both out.

Thanks Clark! It’s always wonderful to get your wisdom! Interestingly, I may be on the cusp of flipping the model as you suggest… Others have also mentioned this, quite strongly at the recent ISPI conference… Greatly appreciate our long collaboration!

Don Kirkpatrick’s 4-level model was and is sufficient. Someday there may be profound, game-changing, developments. That day has not yet come.

Interesting that you say that. A number of others disagree, including a “seminal research review from a top-tier scientific journal” as Will cites in the debate linked in the post, Donald Clark here, the list goes on. Still, assuming you start with level 4, and it works for you, no worries.

There seems to be very little evidence supporting the statement that evaluation is critical, since it is so rarely done well, if at all. Results are always anecdotes or unreliable/subjective opinion scales (with the occasional except of Kirkpatrick level 2 learning measures, but even these are not often subjected to examination for reliability and validity). Transfer and impact evaluations are even more likely to be faith-based – when are actual countable or measureable results actually collected and analyzed and a causal, or even correlational, connection to training completion identified? Evaluation can result in customer feedback that can be used to improve the content, effectiveness, or delivery of the training, if it is heard and analyzed, but actually listening to what users say is hard.