At the recent Learning Guild‘s Learning & HR Tech Solutions conference, the hot topic was AI. To the point where I was thinking we need a ‘contrarian’ AI event! Not that I’m against AI (I mean, I’ve been an AI groupie for literal decades), I think it’s got well-documented upsides. (And downsides.) Just right now, however, I feel that there’s too much hype, and I’m waiting for the trough of disillusionment to hit, ala the Gartner hype cycle. In the meantime, though, I really liked what Markus Bernhardt was saying in his session. It’s about viewing AI as a system, though of course I had a pedantic update to it ;).

So, Markus’ point was that we should separate out data from the processing method. Markus presented a simple model to think about AI that I liked. In it, he proposed three pieces that I paraphrase:

- the information you use as your basis

- the process you use with the information

- and the output you achieve

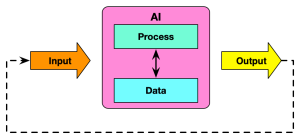

Of course, I had a quibble, and ended up diagramming my own way of thinking about it. Really, it only adds one thing to his model, an input! Why?

Of course, I had a quibble, and ended up diagramming my own way of thinking about it. Really, it only adds one thing to his model, an input! Why?

So I have the AI system containing the process and data it operates on. I like that separation, because you can use the same process on other data, or vice versa. As the opening keynote speaker, Maurice Conti, pointed out, the AI’s not biased, the data is. Having good data is important. As is having the right process to achieve the results you want (that is, a good match between problem and process; the results are the results ;). Are you generating or discriminating, for instance? Then Markus said you get the output, which could be prose, and image, a decision, …

However, I felt that it’s important to also talk about the input. What you input determines the output. With different queries, for instance, you can get different results. That’s what prompt engineering is all about! Moreover, your output can then be part of the input in an iterative step (particularly if your system retains some history). Thus, thinking about the input separately is, to me, a useful conceptual extension.

It may seem a trivial addition, but I think it helps to think about how to design inputs. Just as we align process with data for task, we need to make sure that the input matches to the process to get the best output. So, maybe I’m overcomplicating thinking about AI as a system. What say you?

Leave a Reply