Ok, so I shared that I was trying to visualize the generative process. Which is, to be fair, aka elaboration. And, I did take a stab. So I thought I’d share what I’d come up with for generative visualized. Two reasons: 1) for you to quibble with what I came up with, and 2) learning out loud. I reckon if you can see my process, you may learn, you may improve it, all the good things that happen from sharing. So…

First, I was looking to do a higher build than the neural level. Yes, at the neural level we’re strengthening patterns across neural networks. We’re taking two patterns and putting them into conjunction, basically, activating both. That activating in conjunction strengthens the links between them, associating them more. (I’ve a diagram on that, actually, emphasizing that they need time to recover before the next strengthening. If you’re interested, let me know and I’ll share that, too.) But the way we activate patterns is by using words or images, semantically. So, I was looking for words.

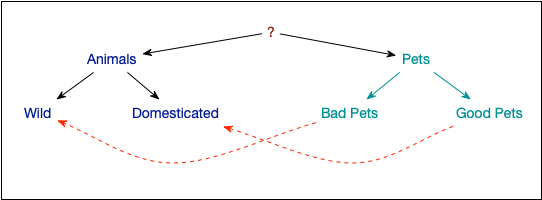

They also should be ideas that are familiar enough that I’m not requiring knowledge you don’t know. Further, I need to be making associations that you’d get. So, something familiar. In this case, animals and pets. (Perhaps that’s salient to me, for some reason.) And I needed a relationship between them. I chose, rightly or wrongly, the notion of how wild animals don’t make good pets. So, what’s this look like?

Now, I’m showing the final build, not the whole process. You start with something you’re talking about, like how there are good and bad pets (“do raccoons make good pets?”). And you ask the learners to find some personal or conceptual basis for that distinction. They can find examples, like that good pets are domesticated animals, and bad pets are wild, drawing on their previous knowledge. Or, they might talk about someone who had a bad pet (“my friend’s parents had this pet monkey, but it was always doing bad things”). Of course, pets are a subset of animals, so this becomes confusing at the top level, but that’s okay, as the structures are similar but not exactly the same.

Now, I’m showing the final build, not the whole process. You start with something you’re talking about, like how there are good and bad pets (“do raccoons make good pets?”). And you ask the learners to find some personal or conceptual basis for that distinction. They can find examples, like that good pets are domesticated animals, and bad pets are wild, drawing on their previous knowledge. Or, they might talk about someone who had a bad pet (“my friend’s parents had this pet monkey, but it was always doing bad things”). Of course, pets are a subset of animals, so this becomes confusing at the top level, but that’s okay, as the structures are similar but not exactly the same.

The point is that they’re generating this information, actively connecting their own pre-existing knowledge to the information you’d like them to acquire. It’s causing them to elaborate, and so strengthening the links in their mind. Which is what we want. So, that’s my attempt to capture generative visualized. Does it work, or do you have ideas to improve or replace?

FWIW, this is part of what I’ll be presenting at the LDA’s Learning Science Conference, starting Nov 3 to review the presentations and discuss, with live sessions with the presenters Dec 8-12. Same format as last year (with recognition of that in pricing, for those that attended), but with some new content and presenters. It’s still the things we think you need to be an informed learning designer.

Leave a Reply