I was asked, recently, about how to get execs to look more favorably on L&D initiatives. And, I discover, I’ve talked about this before, more than 15 years ago. And we’re still having the conversation. So, it appears we’re still struggling with making the case. Maybe there’s another way?

So, there were two messages. Briefly, I heard this:

the difficulty lies in shifting behaviours and mindsets around how people perceive the L&D role and its function(s); particularly when advocating for a transition towards evidence-based approaches and best practices.

Then this response:

[we] are just considered a bunch of lowly content creators who are given SMEs to create courses with no real access to end learners and with no scope of reaching out to anyone else. We are supposed to create all singing and dancing content which will hopefully change behaviour and make some impact.

Both are absolutely tragic situations! We should not be having to fight to be using evidence-informed approaches, and we shouldn’t be expected to create courses without having an opportunity to do research (conversations and more). Why would you want to do things in a vacuum according to outdated beliefs? It’s maniacal.

Now, my earlier screed posited making sure that the executives were aware of the tradeoffs. In general, the model I believe has been validated is one that says people need to see the alternatives, before choosing one. In this case, they really aren’t aware of the costs, largely for one reason. Folks don’t measure impact of learning interventions! That’s not true everywhere, of course, but just asking if people liked it is worthless, and even asking if they thought it had an impact is pretty much a zero correlation with actual value. You have to do more. Our colleague Will Thalheimer has been one of the foremost proponents of this. In his recent tome, talking to org execs, he argues why you should. But that’s still the theoretical argument.

Sadly, if you don’t measure, you don’t have evidence. And, I’ll argue, you can’t look elsewhere, because I’ve tried. I have regularly looked for articles that cite research about whether training investments pay off. Beer and colleagues mentioned a meta-analysis that showed only 10% of investment showed a return, but…they didn’t cite the study and haven’t responded to a request for more data. So, I’ve been trying to think of another way.

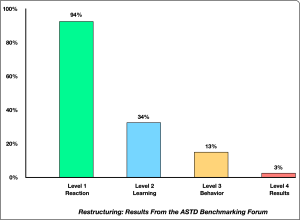

Recently, it occurred to me that the measurement itself might be a mechanism. So, ATD had data on the use of evaluation, and most everybody was saying they did Kirkpatrick Level 1 (did they like it), but as mentioned, that’s not useful. A third did Kirkpatrick level 2, which checks whether learners can perform after the course. Too often, however, that can be knowledge checks, not actual performance. Only an eighth actually looked for change in the workplace behavior, and almost no one checked whether there was an org impact. One problem is that this totals more than 100%, so clearly some folks were doing more.

Recently, it occurred to me that the measurement itself might be a mechanism. So, ATD had data on the use of evaluation, and most everybody was saying they did Kirkpatrick Level 1 (did they like it), but as mentioned, that’s not useful. A third did Kirkpatrick level 2, which checks whether learners can perform after the course. Too often, however, that can be knowledge checks, not actual performance. Only an eighth actually looked for change in the workplace behavior, and almost no one checked whether there was an org impact. One problem is that this totals more than 100%, so clearly some folks were doing more.

However, if we take the final two and add them, 13% and 3%, we get 16%, which means at best, 84% of folks aren’t measuring. Which means they’re likely not getting any results. So, a cynical view would say that 84% of efforts aren’t returning value! Now, to caveats. For one, the data is old; I don’t have an exact date but it precedes my book on strategy, so it’s at least before 2014. And, of course, we could be doing better (though the above quotes might argue otherwise). Also, maybe some of those unmeasured approaches actually are working. Who knows?

Still, I take this as a strong case that we’re still wasting money on L&D. Now, I’ve argued that you should have a collection of arguments: data, theory, examples, your personal experience, their personal experience, and perhaps what the competition is doing. Then, you present what works for them at the moment. Or you can (and should) do stealth evaluation. Find performance data, work with eager adopters, whatever it takes. But worst case, you might use the above argument to show that it’s not being measured, and, as the saying goes, “what’s measured matters”. I’ll suggest that this may be one way of making the case. I welcome hearing others, or better yet, actual real research! But we have to find some traction to get better, for ourselves and our colleagues.

Leave a Reply