In preparation for a presentation, I was reviewing my mobile models. You may recall I started with my 4C‘s model (Content, Compute, Communicate, & Capture), and have mapped that further onto Augmenting Formal, Performance Support, Social, & Contextual. I’ve refined it as well, separating out contextual and social as different ways of looking at formal and performance support. And, of course, I’ve elaborated it again, and wonder whether you think this more detailed conceptualization makes sense.

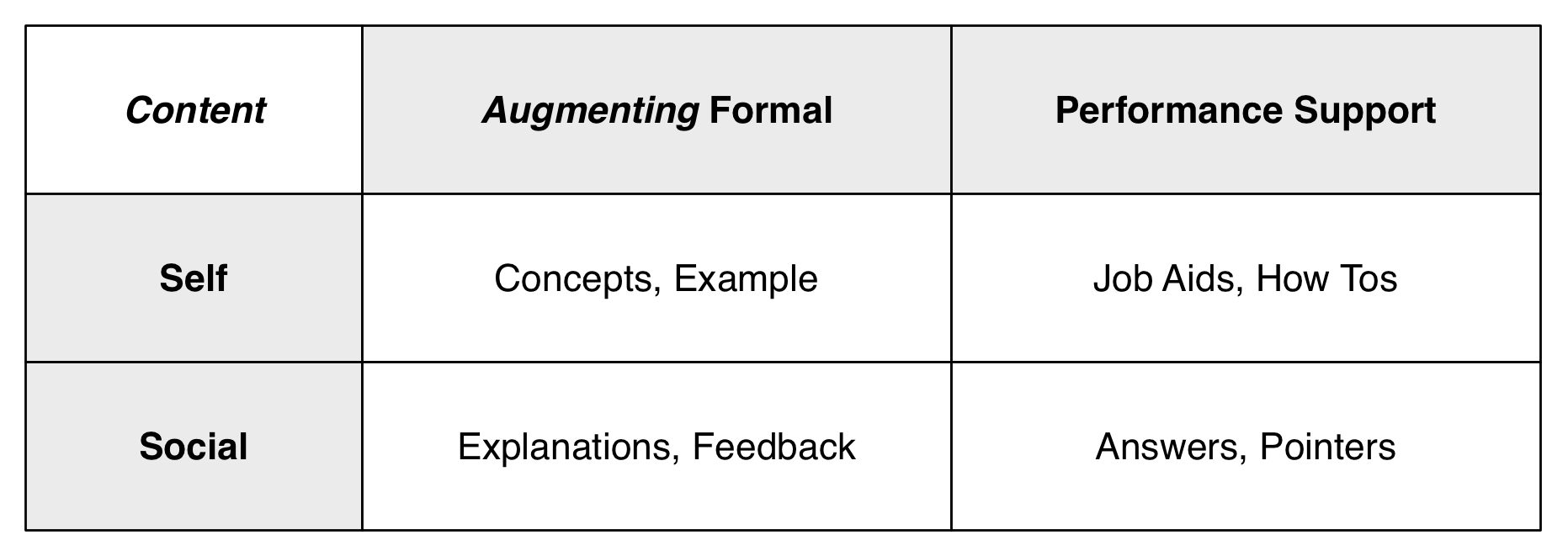

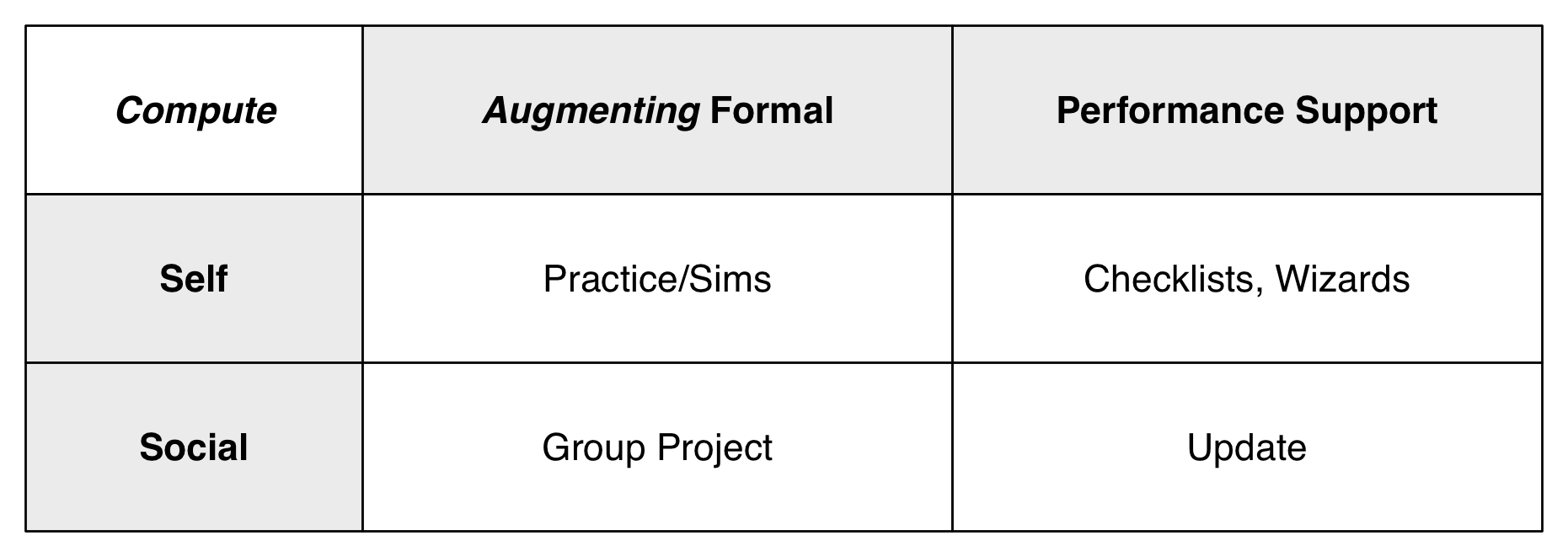

So, my starting point was realizing that it wasn’t just content. That is, there’s a difference between compute and content where the interactivity was an important part of the 4C’s, so that the characteristics in the content box weren’t discriminated enough. So the new two initial sections are mlearning content and mlearning compute, by self or social. So, we can be getting things for an individual, or it can be something that’s socially generated or socially enabled.

So, my starting point was realizing that it wasn’t just content. That is, there’s a difference between compute and content where the interactivity was an important part of the 4C’s, so that the characteristics in the content box weren’t discriminated enough. So the new two initial sections are mlearning content and mlearning compute, by self or social. So, we can be getting things for an individual, or it can be something that’s socially generated or socially enabled.

The point is that content is prepared media, whether text, audio, or video. It can be delivered or accessed as needed. Compute, interactive capability, is harder, but potentially more valuable. Here, an individual might actively practice, have mixed initiative dialogs, or even work with others or tools to develop an outcome or update some existing shared resources.

The point is that content is prepared media, whether text, audio, or video. It can be delivered or accessed as needed. Compute, interactive capability, is harder, but potentially more valuable. Here, an individual might actively practice, have mixed initiative dialogs, or even work with others or tools to develop an outcome or update some existing shared resources.

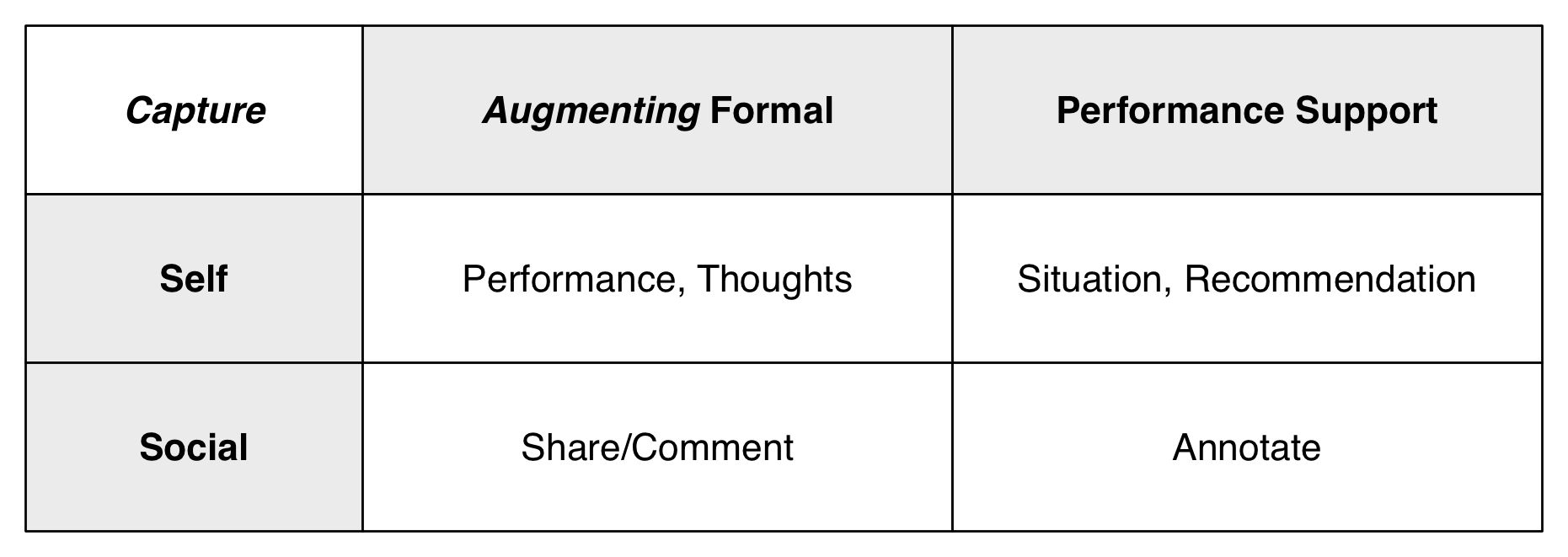

Things get more complex when we go beyond these elements. So I had capture as one thing, and I’m beginning to think it’s two: one is the capture of current context and keeping sharing that for various purposes, and the other is the system using that context to do something unique.

Things get more complex when we go beyond these elements. So I had capture as one thing, and I’m beginning to think it’s two: one is the capture of current context and keeping sharing that for various purposes, and the other is the system using that context to do something unique.

To be clear here, capture is where you use the text insertion, microphone, or camera to catch unique contextual data (or user input). It could also be other such data, such as a location, time, barometric pressure, temperature, or more. This data, then, is available to review, reflect on, or more. It can be combinations, of course, e.g. a picture at this time and this location.

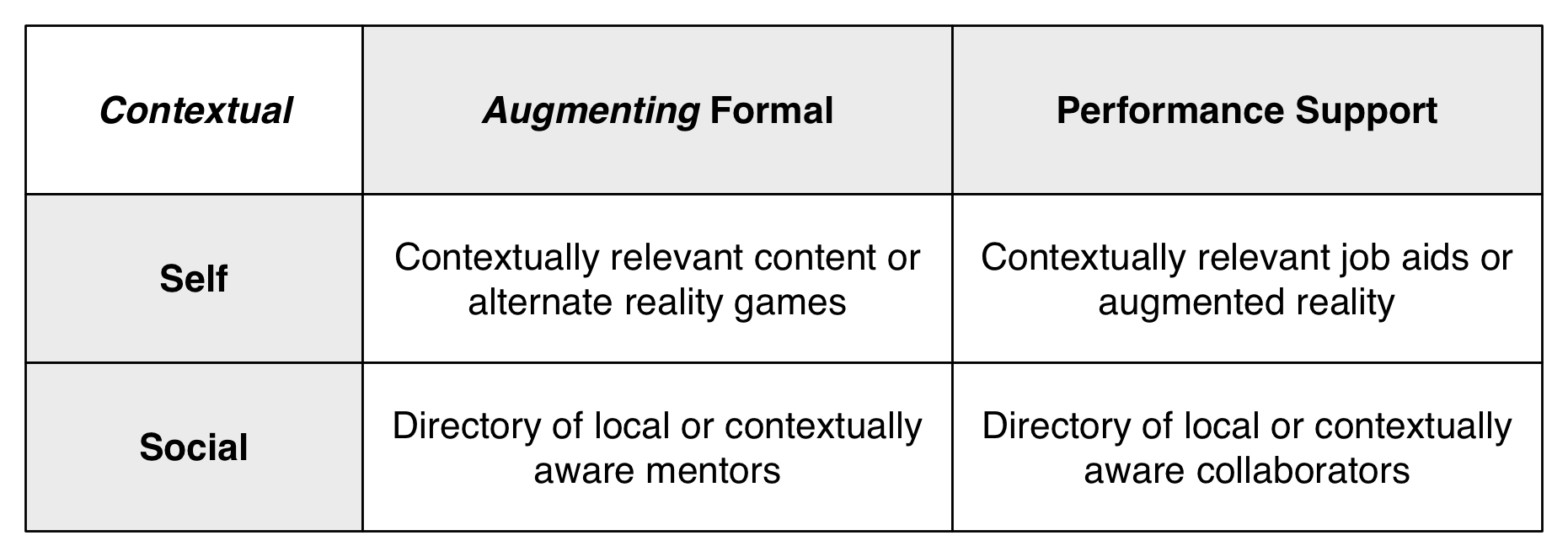

Now, if the system uses this information to do something different than under other circumstances, we’re contextualizing what we do. Whether it’s because of when you are, providing specific information, or where you are, using location characteristics, this is likely to be the most valuable opportunity. Here I’m thinking alternate reality games or augmented reality (whether it’s voiceover, visual overlays, what have you).

Now, if the system uses this information to do something different than under other circumstances, we’re contextualizing what we do. Whether it’s because of when you are, providing specific information, or where you are, using location characteristics, this is likely to be the most valuable opportunity. Here I’m thinking alternate reality games or augmented reality (whether it’s voiceover, visual overlays, what have you).

And I think this is device independent, e.g. it could apply to watches or glasses or..as well as phones and tablets. It means my 4 C’s become: content, compute, capture, and contextualize. To ponder.

So, this is a more nuanced look at the mobile opportunities, and certainly more complex as well. Does the greater detail provide greater benefit?

Leave a Reply