At the Guild’s recent mLearnCon, I was having a conversation in which I was reminded that designing performance interventions is a probabilistic exercise, and it occurred to me that we’re often not up front enough about it. And we need to be.

When we start our design process with a performance vision, we have an idea of what ideal performance would be. However, we make some assumptions about the performer and their ability to have comprehended our learning interventions and any resources we design or are available in the context. We figure our learning was successful (if we’re doing it right, we’re not letting them out of our mitts until they’ve demonstrated the ability to reliably perform what we need), and we figure our performance solutions are optimal and useful (and, again, we should test until we know). But there are other mitigating factors.

In the tragic plane crash in the Tenerife’s – and pilots train as much as anybody – miscommunication (and status) got in the way. Other factors like distraction, debilitating substances, sleep deprivation and the like can also affect performance. Moreover, there’s some randomness in our architecture, basically. We don’t do everything perfectly all the time.

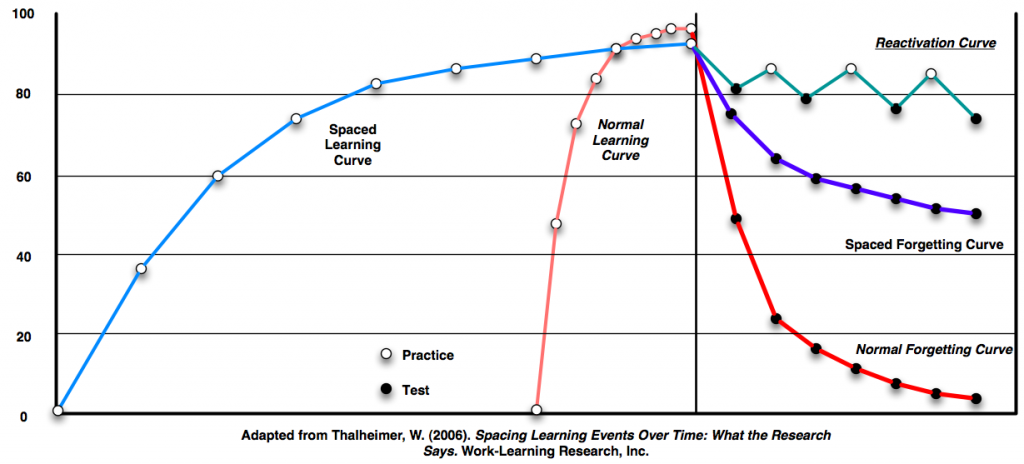

But more importantly, beyond the actual performance, there’s a probability involved in our learning interventions. Most of our research based results raise the likelihood of the intervention affecting the outcome. Starting with meaningful objectives, using model-based concepts, contextualized examples, meaningful practice, all that increases the probability.

This holds true with performance support, coaching/mentoring, and more. Look, humans don’t have the predictable properties of concrete or steel. We are much more complex, and consequently variable. That’s why I went from calling it cognitive engineering to cognitive design. And we need to be up front about it.

The best thing to do is use the very best solutions to hand; just as we over-engineer bridges to ensure stability (and, as Henry Petrovski points out in To Engineer is Human, on subsequent projects we’ll relax constraints until ultimately we get failure), we need to over-design our learning. We’ve gotten slacker and slacker, but if it’s important (and, frankly, why else are we bothering), we need to do the right job. And tarting up learning with production values isn’t the same thing. It’s easier, since we just do it instead of having to test and refine, but it’s unlikely to lead to any worthwhile outcomes.

As I’ve argued before, better design doesn’t take longer, but there is a learning curve. Get over the curve, and start increasing the likelihood that your learning will have the impact you intend.