As a prelude to a small webinar I’ll be doing next week (though it also serves to tee up the free Best of mLearnCon webinar I’ll be doing for the eLearning Guild next week as well, here’re some deliberately provocative thoughts on mobile:

According to Tomi Ahonen, mobile is the fastest growing industry ever. But just because everyone has one, what does it mean? I think the implications are broader, but here I want to talk specifically about work and learning. I want to suggest that it has the opportunity to totally upend the organization. How? By broadening our understanding of how we work and learn.

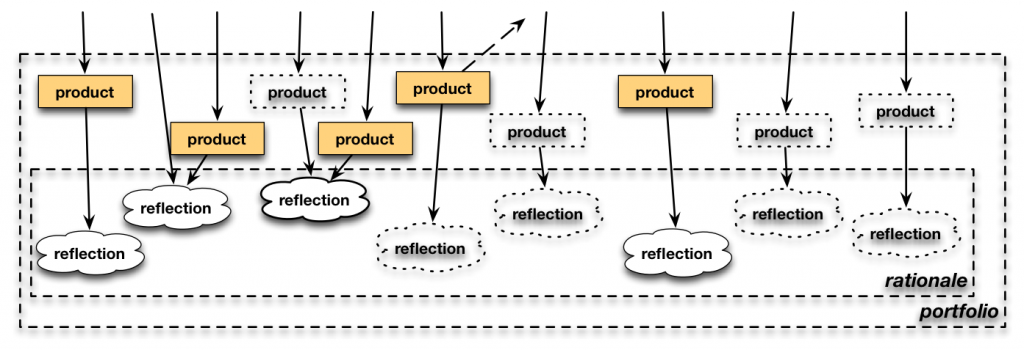

The 70:20:10 framework, while not descriptive, does capture the reality that most of what we learn at work doesn’t come from courses (the ’10’). Instead, we learn by coaching/mentoring (the ‘2o’), and ‘on the job’ (70). Yet, by and large, the learning units in organizations are only addressing the 10 percent. They could, and should, be looking at how to support the other 90, but haven’t seen it, yet there’re lots that can be done.

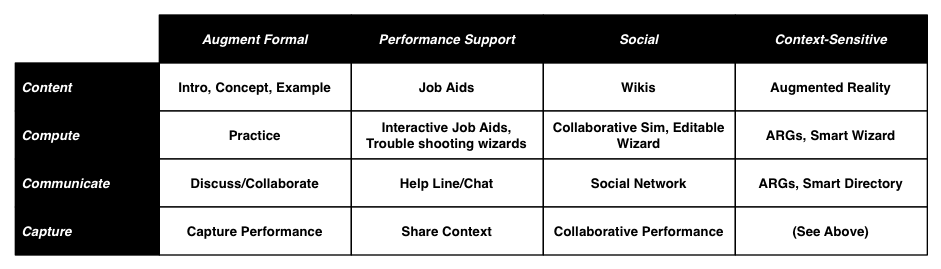

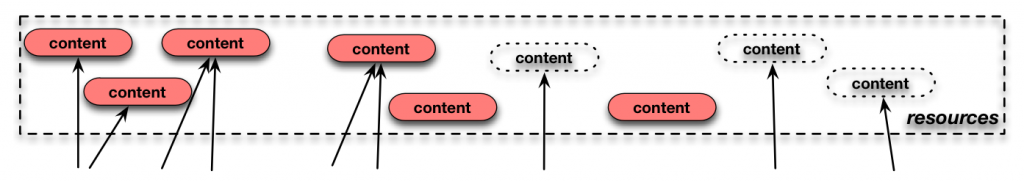

The bigger picture is that digital technology augments our brain. Our brains are really good at pattern-matching and extracting meaning. They’re also really bad at doing rote things, particularly complex ones. Fortunately, digital technology is exactly the opposite, so combined we’re far more capable. This has been true at the desktop, with not only powerful tools, but support wrapped around tools and tasks. Now it’s also true where- and whenever we are: we can share content, compute capabilities, and communication. And you should be able to see how that benefits the organization.

And more: it’s adding in something that the desktop didn’t really have: the ability to capture your current context, and to leverage that to your benefit. Your device can know when and where you are, and do things appropriately.

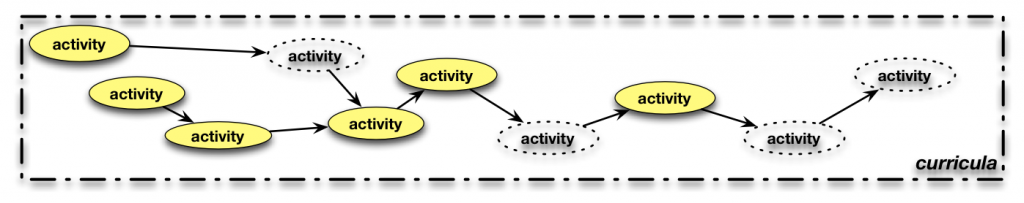

So why is this game-changing? I want to suggest that the notion of a digital platform that supports us ubiquitously will be the inroad to recognize that the formal learning is not, and cannot, be separate from the work. If we’re professionals, we’re always working and learning (as my colleague Harold Jarche extols us). If a new platform comes out that’s ubiquitous yet relatively unsuited for courses, we have a forcing function to start thinking anew about what the role of learning and performance professionals is. I suggest that there are rich ways we can think about coupling mobile with work.

Why do I suggest that courses on a phone isn’t the ideal solution? You have to make some distinctions about the platform. A tablet is just not the same as a pocketable device. It has been hard to get a handle on how they differ, but I think you do need to recognize that they do. For example, I’ll suggest that you’re not likely to want to take a full course on a pocketable device, however on a tablet that’d be quite feasible.

To take full advantage, you have to consider mobile as a platform, not just a device. It’s a channel for capability to reach across limitations of chronology and geography, and make us more productive. And more. So, get on board, and get going to more and better performance.

In a recent

In a recent