Yesterday I attend SDL’s DITAFest. While it’s a vendor-driven show, there were several valuable presentations and information to help get clearer about designing content. And we do need to start looking at the possibilities on tap. Beyond deeper instructional design (tapping into both emotion and effective instruction, not the folk tales we tell about what good design is), we need to start looking at content models and content architecture.

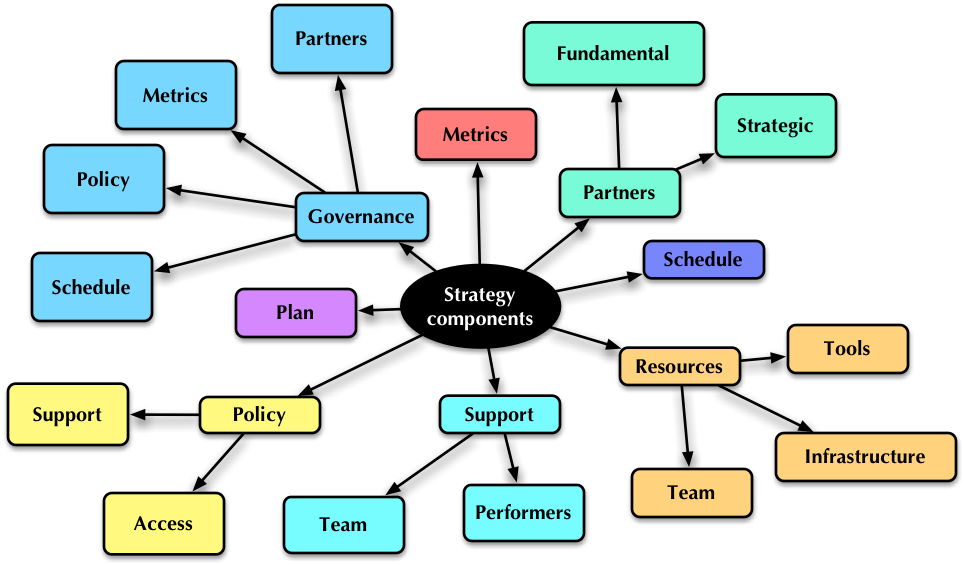

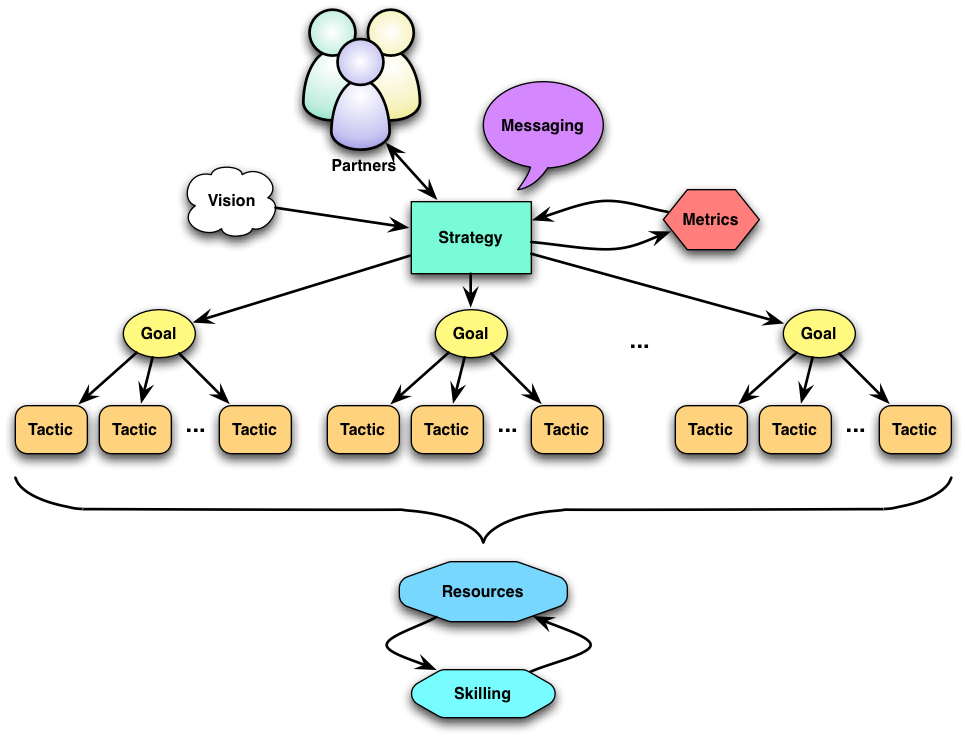

Let me put this a bit in context. When I talk about the Performance Ecosystem, I’m talking about a number of things: improved instructional design, performance support, social learning and mobile. But the “greater integration” step is one that both yields immediate benefits, and sets the stage for some future opportunities. Here we’re talking investing in the underlying infrastructure to leverage the possibilities of analytics, semantics and more, and content architecture is a part of that.

Let me put this a bit in context. When I talk about the Performance Ecosystem, I’m talking about a number of things: improved instructional design, performance support, social learning and mobile. But the “greater integration” step is one that both yields immediate benefits, and sets the stage for some future opportunities. Here we’re talking investing in the underlying infrastructure to leverage the possibilities of analytics, semantics and more, and content architecture is a part of that.

So DITA is Darwin Information Typing Architecture, and what it is about is structuring content a bit. It’s an XML-based approach developed at IBM that lets you not only separate out content from how it’s expressed, but lets you add some semantics on top of it. This has been mostly used for material like product descriptions, such as technical writers produce, but it can be used for white papers, marketing communications, and any other information. Like eLearning. However, the elearning use is still idiosyncratic; one of the top DITA strategy consultants told me that the Learning and Training committee’s contribution has not yet been sufficient.

The important point, however, is that articulating content has real benefits. A panel of implementers mentioned reducing tool costs, reduced redundancy savings, and decreasing time to create and maintain information. There were also strategic benefits in breaking down silos and finding common ground with other groups in the organization. The opportunity to wrap quality standards around the content adds another opportunity for benefits. Server storage was another benefit. As learning groups start taking responsibility for performance support and other areas, these opportunities will be important.

And, the initial investment to start focusing on content more technically is a step along the path to start moving from web 2.0 to web 3.0; custom content generation for the learner or performer. A further step is context-sensitive customization. This is really only possible in a scalable way if you get your arms around paying tighter attention to defining content: tagging, mapping, and more.

It may seem scary, but the first steps aren’t that difficult, and it’s an investment in efficiencies initially, and into a whole new realm of capability going forward. It may not be for you tomorrow, but you have to have it on your radar.

An absolutely killer learning experience

An absolutely killer learning experience