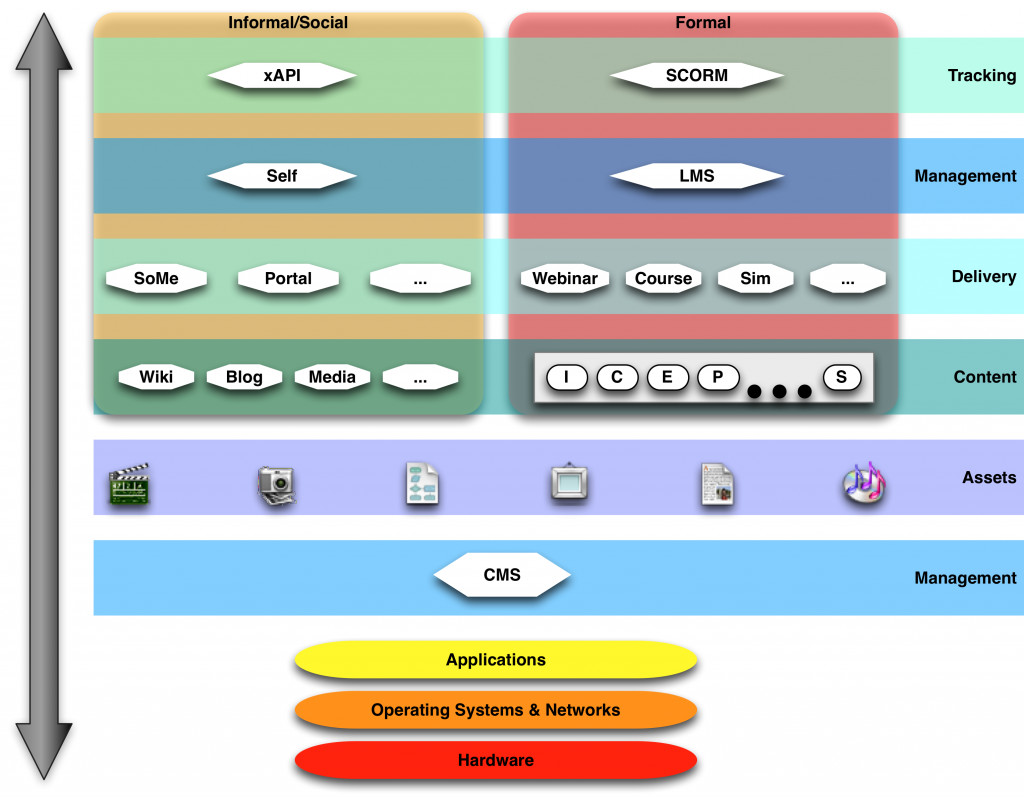

A few years ago, I created a diagram to capture a bit about the technology to support learning (Big ‘L’ Learning). I was revisiting that diagram for some writing I’m doing, and thought it needed updating. The point is to characterize the relationship between underpinning infrastructure and mechanisms to support availability for formal and informal learning.

Here’s the accompanying description: As a reference framework, we can think of a hierarchy of levels of tools. At the bottom is the hardware, running an operating system and connecting to networks. Above that are applications that deliver core services. We start with the content management systems, from the delivery perspective, which maintains media assets. Above that we have the aggregation of those assets into content, whether full learning consisting of introductions, concepts, examples, practice items, all the way to the summary, or user-generated content via a variety of tools. These are served up via delivery channels and managed, whether through webinars, courses, or simulations through a learning management system (LMS) on the formal learning side, or self-managed through social media and portals on the informal learning side. Ultimately, these activities can or will be tracked through standards such as SCORM for formal learning or the new experience API (xAPI) for informal learning.

Here’s the accompanying description: As a reference framework, we can think of a hierarchy of levels of tools. At the bottom is the hardware, running an operating system and connecting to networks. Above that are applications that deliver core services. We start with the content management systems, from the delivery perspective, which maintains media assets. Above that we have the aggregation of those assets into content, whether full learning consisting of introductions, concepts, examples, practice items, all the way to the summary, or user-generated content via a variety of tools. These are served up via delivery channels and managed, whether through webinars, courses, or simulations through a learning management system (LMS) on the formal learning side, or self-managed through social media and portals on the informal learning side. Ultimately, these activities can or will be tracked through standards such as SCORM for formal learning or the new experience API (xAPI) for informal learning.

I add, as a caveat: Note that this is merely indicative, and there are other approaches possible. For instance, this doesn‘t represent authoring tools for aggregating media assets into content. Similarly, individual implementations may not have differing choices, such as not utilizing an independent content management system underpinning the media asset and content development.

So, my question to you is, does this make sense? Does this diagram capture the technology infrastructure for learning you are familiar with?