I was pinged on LinkedIn by someone who used the entrée of hearing me speak in next week’s Learning Solutions conference to begin discussing LMS capabilities. (Hint: they provide one.) And I thought I’d elaborate on my response, as the discussion prompted some reflections. In short, what are the arguments for and against having a single platform to deliver an ecosystem solution?

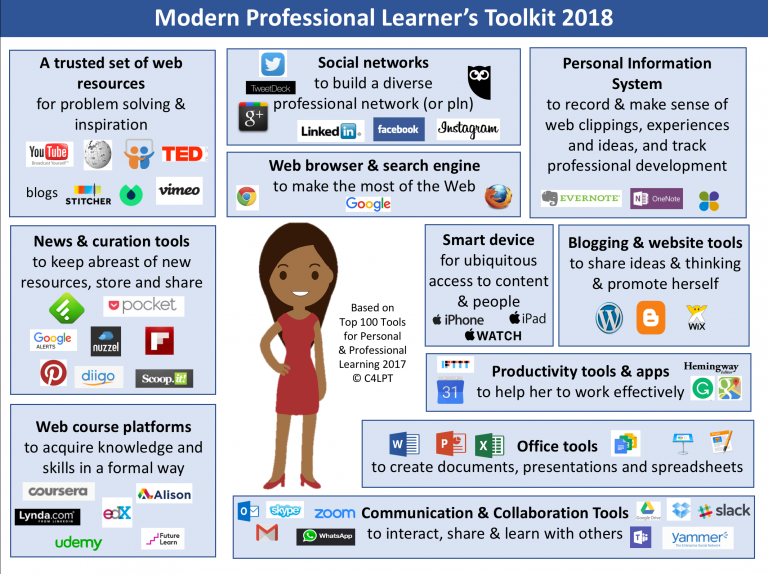

In Revolutionize Learning & Development, I argue for a Performance & Development ccosystem. The idea is more than courses, it’s performance support, social, informal, etc. It’s about having a performer-centric support environment that has tools and information to hand to both help you perform in the moment and develop you over time. The goal is to support working alone and together to meet both the anticipated, and unanticipated, needs of the organization.

On principle, I tend to view an ‘all singing all dancing’ solution as likely to fail on some part of that. It’s implausible that a system would have all the capabilities needed. First, there are many functionalities: access to formal learning, supporting access to known or found resources, sharing, collaborating, and more. It’s unlikely that all those can be done well in one platform. Let alone, doing them in ways that matches any one organization’s ways of working.

I’m not saying the LMS is a bad tool for what it does. (Note: I am not in the LMS benchmark business; there are other people that do that and it’s a full time job.) However, can an LMS be a full solution? Even if there is some capability in all the areas, what’s the likelihood that it’s best-of-breed in all? Ok, in some small orgs where you can’t have an IT group capable of integrating the necessary tools, you might settle for working around the limitations. That’s understandable. But it’s different than choosing to trust one system. It’s just having the people act as the glue instead of the system.

It’s always about tradeoffs, and so integrating best-of-breed capabilities around what’s already in place would make more sense to me. For instance, how *could* one system integrate enterprise-wide federated search as a stand-alone platform? It’s about integrating a suite of capabilities to create a performer-centric environment. That’s pretty much beyond a solo platform, intrinsically. Am I missing something?