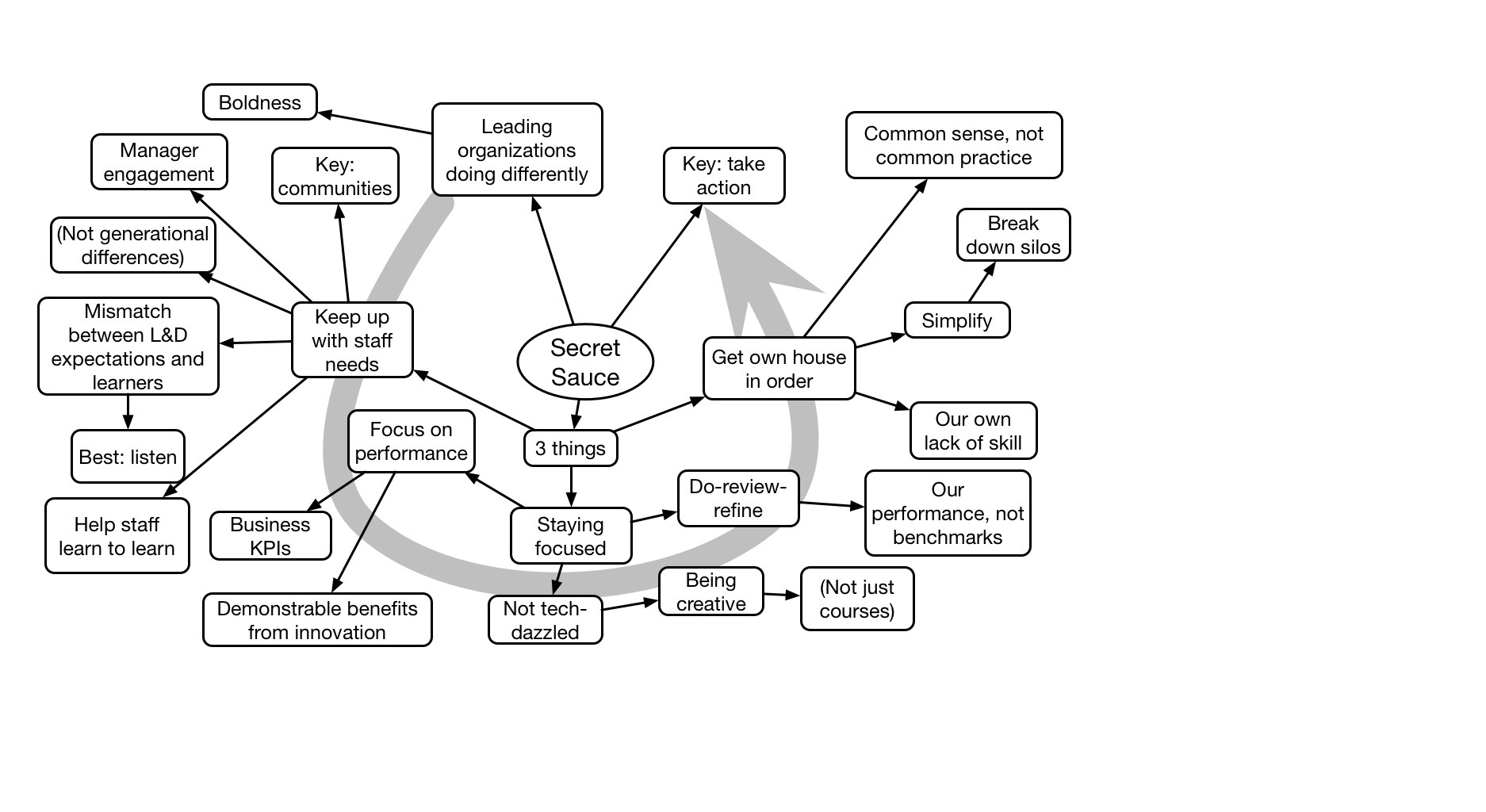

Laura used Towards Maturity data to provide insight into how leading L&D organizations are making their way.

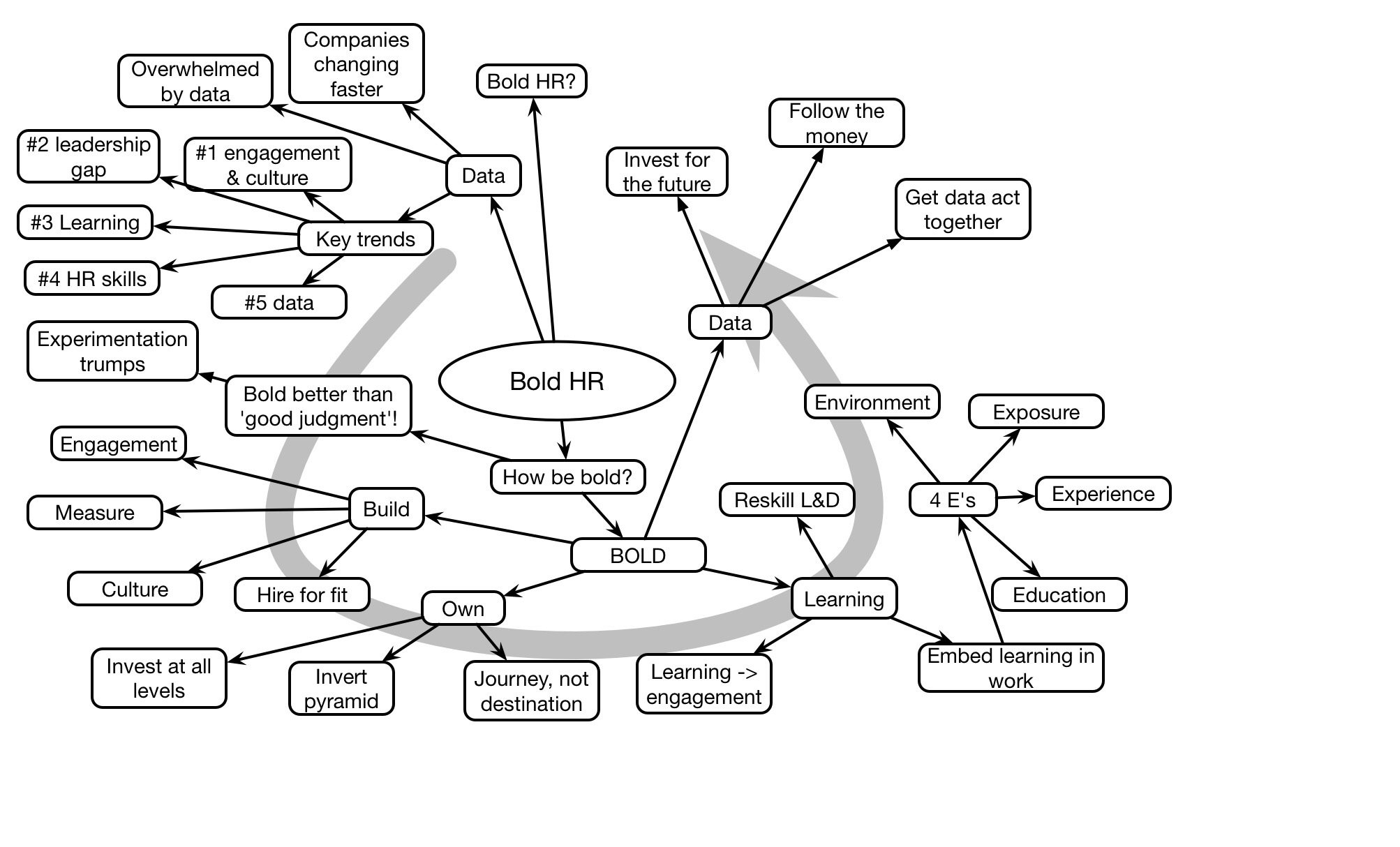

David Mallon #learnatworkau Plenary Mindmap

Learning by experimenting

In some recent work, an organization is looking to find a way to learn fast enough to cope with the increasing changes we’re seeing. Or, better yet, learn ahead of the curve. And this led to some thoughts.

As a starting point, it helps to realize that adapting to change is a form of learning. So, what are the individual equivalents we might use as an analogy? Well, in known areas we take a course. On the other hand, for self-learning, e.g. when there isn’t a source for the answer, we need to try things. That is, we need a cycle of: do – review -refine.

In the model of a learning organization, experimentation is clearly listed as a component of concrete learning processes and practices. And my thought was that it is therefore clear that any business unit or community of practice that wants to be leading the way needs to be trying things out.

I’ve argued before that learning units need to be using new technologies to get their minds around the ‘affordances’ possible to support organizational performance and development. Yet we see that far too few organizations are using social networks for learning (< 30%), for example.

If you’re systematically tracking what’s going on, determining small experiments to trial out the implications, documenting and sharing the results, you’re going to be learning out ahead of the game. This should be the case for all business units, and I think this is yet another area that L&D could and should be facilitating. And by facilitating, I mean: modeling (by doing it internally), evangelizing, supporting in process, publicizing, rewarding, and scaling.

I think the way to keep up with the rate of change is to be driving it. Or, as Alan Kay put it: “the best way to predict the future is to invent it”. Yes, this requires some resources, but it’s ultimately key to organizational success, and L&D can and should be the driver of the process within the organization.

The new shape of organizations?

As I read more about how to create organizations that are resilient and adaptable, there’s an interesting emergent characteristic. What I’m seeing is a particular pattern of structure that has arisen out of totally disparate areas, yet keeps repeating. While I haven’t had a chance to think about it at scale, like how it would manifest in a large organization, it certainly bears some strengths.

Dave Grey, in his recent book The Connected Company that I reviewed, has argued for a ‘podular’ structure, where small groups of people are connected in larger aggregations, but work largely independently. He argues that each pod is a small business within the larger business, which gives flexibility and adaptiveness. Innovation, which tends to get stifled in a hierarchical structure, can flourish in this more flexible structure.

Dave Grey, in his recent book The Connected Company that I reviewed, has argued for a ‘podular’ structure, where small groups of people are connected in larger aggregations, but work largely independently. He argues that each pod is a small business within the larger business, which gives flexibility and adaptiveness. Innovation, which tends to get stifled in a hierarchical structure, can flourish in this more flexible structure.

More recently, on Harold Jarche‘s recommendation, I read Niels Pflaeging’s Organize for Complexity, a book also on how to create organizations that are high performance. While I think the argument was a bit sketchy (to be fair, it’s deliberately graphic and lean), I was sold on the outcomes, and one of them is ‘cells’ composed of a small group of diverse individuals accomplishing a business outcome. He makes clear that this is not departments in a hierarchy, but flat communication between cross-functional teams.

More recently, on Harold Jarche‘s recommendation, I read Niels Pflaeging’s Organize for Complexity, a book also on how to create organizations that are high performance. While I think the argument was a bit sketchy (to be fair, it’s deliberately graphic and lean), I was sold on the outcomes, and one of them is ‘cells’ composed of a small group of diverse individuals accomplishing a business outcome. He makes clear that this is not departments in a hierarchy, but flat communication between cross-functional teams.

And, finally, Stan McChrystal has a book out called Team of Teams, that builds upon the concepts he presented as a keynote I mindmapped previously. This emerged from how the military had to learn to cope with rapid changes in tactics. Here again, the same concept of small groups working with a clear mission and freedom to pursue emerges.

This also aligns well with the results implied by Dan Pink’s Drive, where he suggests that the three critical elements for performance are to provide people with important goals, the freedom to pursue them, and support to succeed. Small teams fit well within what’s known about the best in getting the best ideas and solutions out of people, such as brainstorming.

These are nuances on top of Jon Husband’s Wirearchy, where we have some proposed structure around the connections. It’s clear that to become adaptive, we need to strengthen connections and decrease structure (interestingly, this also reflects the organizational equivalents of nature’s extremophiles). It’s about trust and purpose and collaboration and more. And, of course, to create a culture where learning is truly welcomed.

Interesting that out of responding to societal changes, organizational work, and military needs, we see a repeated pattern. As such, I think it’s worth taking notice. And there are clear L&D implications, I reckon. What say you?

#itashare

The Polymath Proposition

At the recent DevLearn conference, one of the keynotes was Adam Savage. And he said something that gave me a sense of validation. He was talking about being a polymath, and I think that’s worth understanding.

His point was that his broad knowledge of a lot of things was valuable. While he wasn’t the world’s expert in any particular thing, he knew a lot about a lot of things. Now if you don’t know him, it helps to understand that he’s one of the two hosts of Mythbusters, a show that takes urban myths and puts them to the test. This requires designing experiments that fit within pragmatic constraints of cost and safety, and will answer the question. Good experiment design is an art as well as a science, and given the broad range of what the myths cover, this ends up requiring a large amount of ingenuity.

The reason I like this is that my interests vary broadly (ok, I’m coming to terms with a wee bit of ADD ;). The large picture is how technology can be designed to help us think, work, and learn. This ends up meaning I have to understand things like cognition and learning (my Ph.D. is in cognitive psychology), computers (I’ve programmed and designed architectures at many levels), design (I’ve looked at usability, software engineering, industrial design, architectural design, and more), and organizational issues (social, innovation…). It’s led to explorations covering things like games, mobile, and strategy (e.g. the topics of my books). And more; I’ve led development of adaptive learning systems, content models, learning content, performance support, social environments, and so on. It’s led me further, too, exploring org change and culture, myth and ritual, engagement and fun, aesthetics and media, and other things I can’t even recall right now.

And I draw upon models from as many fields as I can. My Ph.D. research was related to the power of models as a basis for solving new problems in uncertain domains, and so I continue to collect them like others collect autographs or music. I look for commonalities, and try to make my understanding explicit by continuing to diagram and write about my reflections. I immodestly think I draw upon a broad swath of areas. And I particularly push learning to learn and meta-cognition to others because it’s been so core to my own success.

What I thrive on is finding situations where the automatic solutions don’t apply. It’s not just a clear case for ID, or performance support, or… Where technology can be used (or used better) in systemic ways to create new opportunities. Where I really contribute is where it’s clear that change is needed, but what, how, and where to start aren’t obvious. I’ve a reliable track record of finding unique, and yet pragmatic solutions to such situations, including the above named areas I’ve innovated in. And it is a commitment of mine to do so in ways that pass on that knowledge, to work in collaboration to co-develop the approach and share the concepts driving it, to hand off ownership to the client. I’m not looking for a sinecure; I want to help while I’m adding value and move on when I’m not. And many folks have been happy to have my assistance.

It’s hard for me to talk about myself in this way, but I reckon I bring that polymath ability of a broad background to organizations trying to advance. It’s been in assisting their ability to develop design processes that yield better learning outcomes, through mobile strategies and solutions that meet their situation, to overarching organizational strategies that map from concepts to system. There’s a pretty fair track record to back up what I say.

I am deep in a lot of areas, and have the ability to synthesize solutions across these areas in integrated ways. I may not be the deepest in any one, but when you need to look across them and integrate a systemic solution, I like to think and try to ensure that I’m your guy. I help organizations envision a future state, identify the benefits and costs, and prioritize the opportunities to define a strategy. I have operated independently or with partners, but I adamantly retain my freedom to say what I truly think so that you get an unbiased response from the broad suite of principles I have to hand. That’s my commitment to integrity.

I didn’t intend this to be a commercial, but I did like his perspective and it made me reflect on what my own value proposition is. I welcome your thoughts. We now return you to your regularly scheduled blog already in progress…

Buy this…for your boss

So I’ve been pushing an L&D Revolution, and for good reasons. I truly believe that L&D is on a path to extinction because: “it isn’t doing near what it could and should, and what it is doing, it is doing badly, otherwise it’s fine” (as my mantra would have it). So many bad practices – info-dump and knowledge-test classes, no alternative to courses, lack of measuring impact – mean that L&D is out of touch with the information age. And what with everyone being able to access the web, content creation tools, and social media environments, wherever and whenever they are, people can survive and thrive without what L&D does, and are doing so.

What I’ve argued is that we need to align with how we really think, work, and learn, and bring that to the organization. What L&D could be doing – providing a rich performance ecosystem that not only empowers optimal execution, but foster the necessary continual innovation – is a truly deep contribution to the success of the organization.

I feel so strongly that I wrote a book about it. If you’ve read it, you know it documents the problems, provides framing concepts, is illustrated with examples, and promotes a roadmap forward (if you’ve read and liked it, I’d love an Amazon review!). And while it’s both selling reasonably well (as far as I can tell, the information from my publisher is impenetrable ;) and leading to speaking opportunities, I fear it’s not getting to the right people. Frankly, most of my speaking and writing has been at the practitioner and manager level, and this is really for the director, and up! All the way to the C-suite, potentially. And while I make an effort to get this idea into their vision, there’s a lot of competition, because everyone wants the C-suite’s attention.

The point I want to make is that the real audience for this book is your boss (unless you’re the CEO, of course ;). And I’m not saying this to sell books (I’m unlikely to make more than enough to buy a couple of cups of coffee off the proceeds, given book contracts), but because I think the message is so important!

So, let me implore you to consider somehow getting the revolution in front of your boss, or your grandboss, and up. It doesn’t have to be the book, but the concept really needs to be understood if the organization is going to remain competitive. All evidence points to the fact that organizations have to become more agile, and that’s a role L&D is in a prime position to facilitate. If, however (and that’s a big if), they get the bigger picture. And that’s the message I’m trying to spread in all the ways I can see. I welcome your thoughts, and your assistance even more.

Supporting our Brains

One of the ways I’ve been thinking about the role mobile can play in design is thinking about how our brains work, and don’t. It came out of both mobile and the recent cognitive science for learning workshop I gave at the recent DevLearn. This applies more broadly to performance support in general, so I though I’d share where my thinking is going.

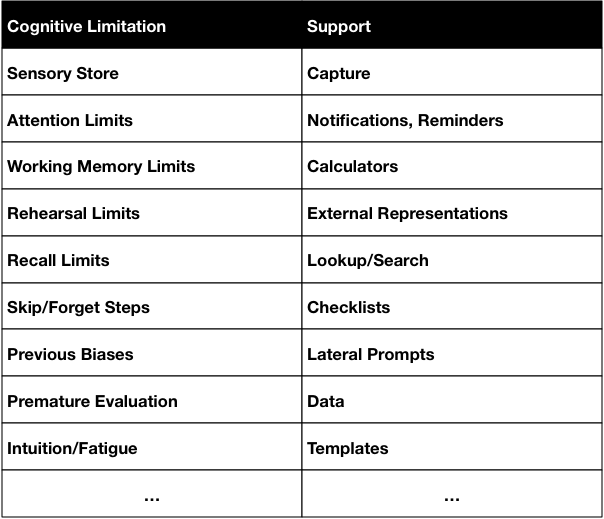

To begin with, our cognitive architecture is demonstrably awesome; just look at your surroundings and recognize your clothing, housing, technology, and more are the product of human ingenuity. We have formidable capabilities to predict, plan, and work together to accomplish significant goals. On the flip side, there’s no one all-singing, all-dancing architecture out there (yet) and every such approach also has weak points. Technology, for instance, is bad at pattern-matching and meaning-making, two things we’re really pretty good at. On the flip side, we have some flaws too. So what I’ve done here is to outline the flaws, and how we’ve created tools to get around those limitations. And to me, these are principles for design:

So, for instance, our senses capture incoming signals in a sensory store. Which has interesting properties that it has almost an unlimited capacity, but for only a very short time. And there is no way all of it can get into our working memory, so what happens is that what we attend to is what we have access to. So we can’t recall what we perceive accurately. However, technology (camera, microphone, sensors) can recall it all perfectly. So making capture capabilities available is a powerful support.

So, for instance, our senses capture incoming signals in a sensory store. Which has interesting properties that it has almost an unlimited capacity, but for only a very short time. And there is no way all of it can get into our working memory, so what happens is that what we attend to is what we have access to. So we can’t recall what we perceive accurately. However, technology (camera, microphone, sensors) can recall it all perfectly. So making capture capabilities available is a powerful support.

Similar, our attention is limited, and so if we’re focused in one place, we may forget or miss something else. However, we can program reminders or notifications that help us recall important events that we don’t want to miss, or draw our attention where needed.

The limits on working memory (you may have heard of the famous 7 ±2, which really is <5) mean we can’t hold too much in our brains at once, such as interim results of complex calculations. However, we can have calculators that can do such processing for us. We also have limited ability to carry information around for the same reasons, but we can create external representations (such as notes or scribbles) that can hold those thoughts for us. Spreadsheets, outlines, and diagramming tools allow us to take our interim thoughts and record them for further processing.

We also have trouble remembering things accurately. Our long term memory tends to remember meaning, not particular details. However, technology can remember arbitrary and abstract information completely. What we need are ways to look up that information, or search for it. Portals and lookup tables trump trying to put that information into our heads.

We also have a tendency to skip steps. We have some randomness in our architecture (a benefit: if we sometimes do it differently, and occasionally that’s better, we have a learning opportunity), but this means that we don’t execute perfectly. However, we can use process supports like checklists. Atul Gawande wrote a fabulous book on the topic that I can recommend.

Other phenomena include that previous experience can bias us in particular directions, but we can put in place supports to provide lateral prompts. We can also prematurely evaluate a solution rather than checking to verify it’s the best. Data can be used to help us be aware. And we can trust our intuition too much and we can wear down, so we don’t always make the best decisions. Templates, for example are a tool that can help us focus on the important elements.

This is just the result of several iterations, and I think more is needed (e.g. about data to prevent premature convergence), but to me it’s an interesting alternate approach to consider where and how we might support people, particularly in situations that are new and as yet untested. So what do you think?

Learnnovators Deeper eLearning Series

For the past 6 months, Learnnovators has been hosting a series of posts I’ve done on Deeper eLearning Design that goes through the elements beyond traditional ID. That is, reflecting on what’s known about how we learn and what that implies for the elements of learning. Too often, other than saying we need an objective and practice (and getting those wrong), we talk about ‘content’. Basically, we don’t talk enough about the subtleties.

So here I’ve been getting into the nuances of each element, closing with an overview of changes that are implied for processes:

1. Deeper eLearning Design: Part 1 – The Starting Point: Good Objectives

2. Deeper eLearning Design: Part 2 – Practice Makes Perfect

3. Deeper eLearning Design: Part 3 – Concepts

4. Deeper eLearning Design: Part 4 – Examples

5. Deeper eLearning Design: Part 5 – Emotion

6. Deeper eLearning Design: Part 6 – Putting it All Together

I’ve put into these posts my best thinking around learning design. The final one’s been posted, so now I can collect the whole set here for your convenience.

And don’t forget the Serious eLearning Manifesto! I hope you find this useful, and welcome your feedback.

AI and Learning

At the recent DevLearn, Donald Clark talked about AI in learning, and while I largely agreed with what he said, I had some thoughts and some quibbles. I discussed them with him, but I thought I’d record them here, not least as a basis for a further discussion.

Donald’s an interesting guy, very sharp and a voracious learner, and his posts are both insightful and inciteful (he doesn’t mince words ;). Having built and sold an elearning company, he’s now free to pursue what he believes and it’s currently in the power of technology to teach us.

As background, I was an AI groupie out of college, and have stayed current with most of what’s happened. And you should know a bit of the history of the rise of Intelligent Tutoring Systems, the problems with developing expert models, and current approaches like Knewton and Smart Sparrow. I haven’t been free to follow the latest developments as much as I’d like, but Donald gave a great overview.

He pointed to systems being on the verge of auto parsing content and developing learning around it. He showed an example, and it created questions from dropping in a page about Las Vegas. He also showed how systems can adapt individually to the learner, and discussed how this would be able to provide individual tutoring without many limitations of teachers (cognitive bias, fatigue), and can not only personalize but self-improve and scale!

One of my short-term problems was that the questions auto-generated were about knowledge, not skills. While I do agree that knowledge is needed (ala VanMerriënboer’s 4CID) as well as applying it, I think focusing on the latter first is the way to go.

This goes along with what Donald has rightly criticized as problems with multiple-choice questions. He points out how they’re largely used as knowledge test, and I agree that’s wrong, but while there are better practice situations (read: simulations/scenarios/serious games), you can write multiple choice as mini-scenarios and get good practice. However, it’s as yet an interesting research problem, to me, to try to get good scenario questions out of auto-parsing content.

I naturally argued for a hybrid system, where we divvy up roles between computer and human based upon what we each do well, and he said that is what he is seeing in the companies he tracks (and funds, at least in some cases). A great principle.

The last bit that interested me was whether and how such systems could develop not only learning skills, but meta-learning or learning to learn skills. Real teachers can develop this and modify it (while admittedly rare), and yet it’s likely to be the best investment. In my activity-based learning, I suggested that gradually learners should take over choosing their activities, to develop their ability to become self-learners. I’ve also suggested how it could be layered on top of regular learning experiences. I think this will be an interesting area for developing learning experiences that are scalable but truly develop learners for the coming times.

There’s more: pedagogical rules, content models, learner models, etc, but we’re finally getting close to be able to build these sorts of systems, and we should be aware of what the possibilities are, understanding what’s required, and on the lookout for both the good and bad on tap. So, what say you?

Mobile Time

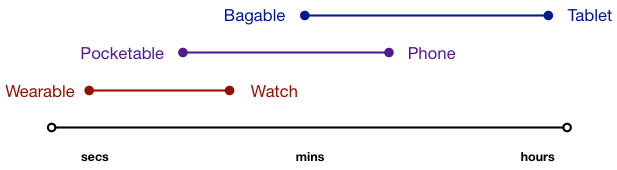

At the recent DevLearn conference, David Kelly spoke about his experiences with the Apple Watch. Because I don’t have one yet, I was interested in his reflections. There were a number of things, but what came through for me (and other reviews I’ve read) is that the time scale is a factor.

Now, first, I don’t have one because as with technology in general, I don’t typically acquire anything in particular until I know how it’s going to make me more effective. I may have told this story before, but for instance I didn’t wasn’t interested in acquiring an iPad when they were first announced (“I’m not a content consumer“). By the time they were available, however, I’d heard enough about how it would make me more productive (as a content creator), that I got one the first day it was available.

So too with the watch. I don’t get a lot of notifications, so that isn’t a real benefit. The ability to be navigated subtly around towns sounds nice, and to check on certain things. Overall, however, I haven’t really found the tipping-point use-case. However, one thing he said triggered a thought.

He was talking about how it had reduced the amount of times he accessed his phone, and I’d heard that from others, but here it struck a different cord. It made me realize it’s about time frames. I’m trying to make useful conceptual distinctions between devices to try to help designers figure out the best match of capability to need. So I came up with what seemed an interesting way to look at it.

This is similar to the way I’d seen Palm talk about the difference between laptops and mobile, I was thinking about the time you spent in using your devices. The watch (a wearable) is accessed quickly for small bits of information. A pocketable (e.g. a phone) is used for a number of seconds up to a few minutes. And a tablet tends to get accessed for longer uses (a laptop doesn’t count). Folks may well have all 3, but they use them for different things.

This is similar to the way I’d seen Palm talk about the difference between laptops and mobile, I was thinking about the time you spent in using your devices. The watch (a wearable) is accessed quickly for small bits of information. A pocketable (e.g. a phone) is used for a number of seconds up to a few minutes. And a tablet tends to get accessed for longer uses (a laptop doesn’t count). Folks may well have all 3, but they use them for different things.

Sure, there are variations, (you can watch a movie on a phone, for instance; phone calls could be considerably longer), but by and large I suspect that the time of access you need will be a determining factor (it’s also tied to both battery life and screen size). Another way to look at it would be the amount of information you need to make a decision about what to do, e.g. for cognitive work.

Not sure this is useful, but it was a reflection and I do like to share those. I welcome your feedback!