I tout the value of learning science and good design. And yet, I also recognize that to do it to the full extent is beyond most people’s abilities. In my own work, I’m not resourced to do it the way I would and should do it. So how can we strike a balance? I believe that we need to use smart heuristics instead of the full process.

I have been talking to a few different people recently who basically are resourced to do it the right way. They talk about getting the right SMEs (e.g. with sufficient depth to develop models), using a cognitive task analysis process to get the objectives, align the processing activities to the type of learning objective, developing appropriate materials and rich simulations, testing the learning and using feedback to refine the product, all before final release. That’s great, and I laud them. Unfortunately, the cost to get a team capable of doing this, and the time schedule to do it right, doesn’t fit in the situation I’m usually in (nor most of you). To be fair, if it really matters (e.g. lives depend on it or you’re going to sell it), you really do need to do this (as medical, aviation, military training usually do).

But what if you’ve a team that’s not composed of PhDs in the learning sciences, your development resources are tied to the usual tools, your budgets far more stringent, and schedules are likewise constrained? Do you have to abandon hope? My claim is no.

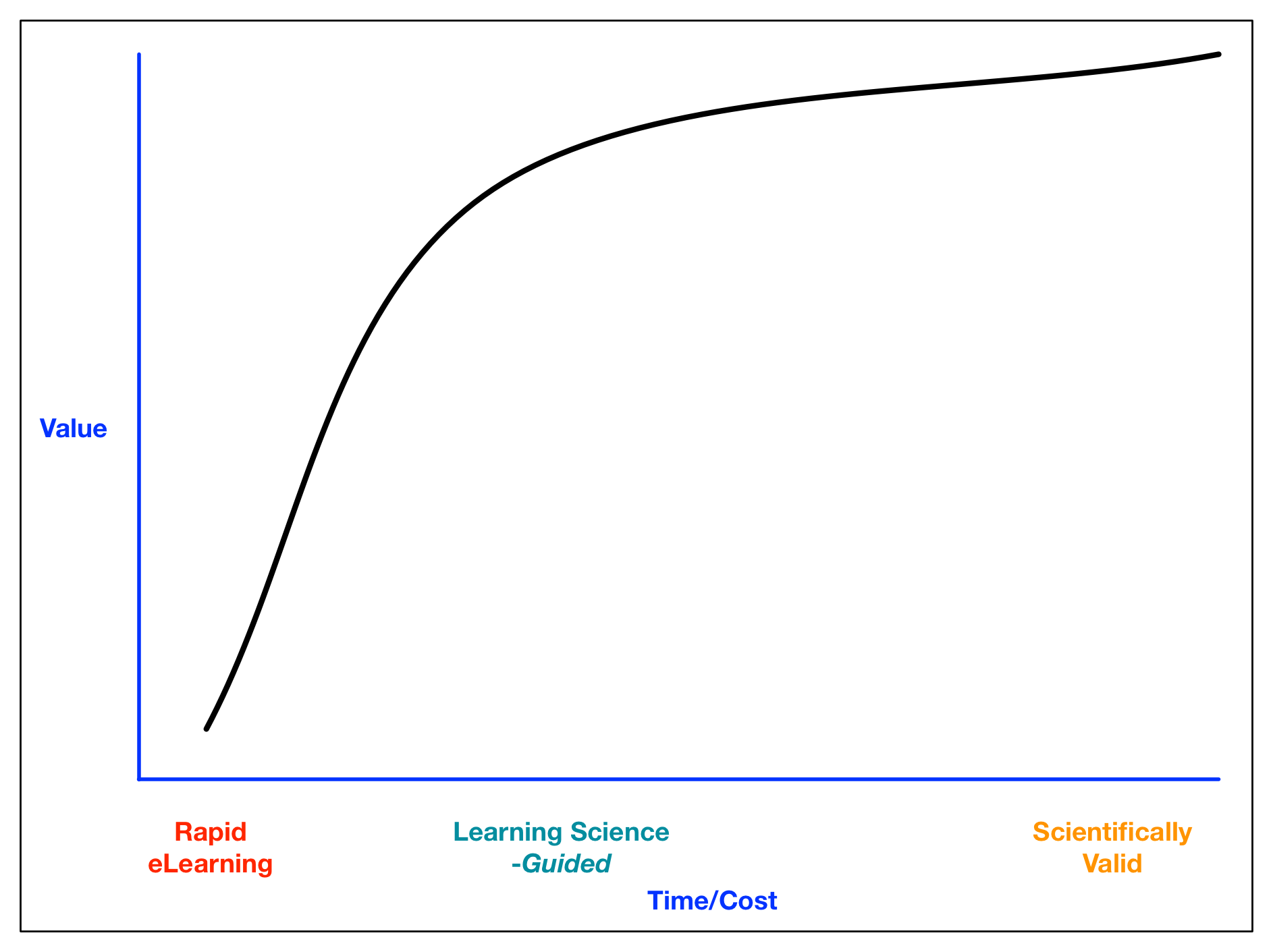

I believe that a smart, heuristic approach is plausible. Using the typical ‘law of diminishing returns’ curve (and the shape of this curve is open to debate), I suggest that it’s plausible that there is a sweet spot of design processes that gives you an high amount of value for a pragmatic investment of time and resources. Conceptually, I believe you can get good outcomes with some steps that tap into the core of learning science without following the letter. Learning is a probabilistic game, overall, so we’re taking a small tradeoff in probability to meet real world constraints.

I believe that a smart, heuristic approach is plausible. Using the typical ‘law of diminishing returns’ curve (and the shape of this curve is open to debate), I suggest that it’s plausible that there is a sweet spot of design processes that gives you an high amount of value for a pragmatic investment of time and resources. Conceptually, I believe you can get good outcomes with some steps that tap into the core of learning science without following the letter. Learning is a probabilistic game, overall, so we’re taking a small tradeoff in probability to meet real world constraints.

What are these steps? Instead of doing a full cognitive task analysis, we’ll do our best guess of meaningful activities before getting feedback from the SME. We’ll switch the emphasis from knowledge test to mini- and branching-scenarios for practice tasks, or we’ll have them take information resources and use them to generate work products (charts, tables, analyses) as processing. We’ll try to anticipate the models, and ask for misconceptions & stories to build in. And we’ll align pre-, in-, and post-class activities in a pragmatic way. Finally, we’ll do a learning equivalent of heuristic evaluation, not do a full scientifically valid test, but we’ll run it by the SMEs and fix their (legitimate) complaints, then run it with some students and fix the observed flaws.

In short, what we’re doing here are approximations to the full process that includes some smart guesses instead of full validation. There’s not the expectation that the outcome will be as good as we’d like, but it’s going to be a lot better than throwing quizzes on content. And we can do it with a smart team that aren’t learning scientists but are informed, in a longer but still reasonable schedule.

I believe we can create transformative learning under real world constraints. At least, I’ll claim this approach is far more justifiable than the too oft-seen approach of info dump and knowledge test. What say you?

In my experience, 50% of the people I’ve worked with don’t even have coherent learning objectives. Of those, 80% don’t have any kind of meaningful measurement system to see if their objectives were met. So I think your chart is probably pretty close to reality.

“Some good” is better than “none good”.

If you are stuck in an instructional/push construct, it’s very likely that a cost v. benefit over time ratio will be very close to how you’ve asserted it here. However, the results might be better if you simply open your mindset to include self-directed ‘learning’ by learners who ‘pull’ information from multiple sources including your SMEs for their own learning process. Where this has been tried in other domains, the results have been strikingly impressive.

Hi Clark,

I’ll touch on two elements – the diagnosis of the problem and the application of evidence based instructional design methods (both of which I regularly debate with my colleagues, apologies if the following reads like half a rant…).

First, my experience is a rigorous and deep analysis of the problem takes a lot of time and money. It’s one thing to talk to stakeholders, perhaps complete a fishbone diagram and delve into an issue. It’s another to collect data sets (that’s when it’s available), use complex statistical methods, test findings, understand relationships, etc. (I think the evolution of big data and analytics will improve this). Unless it’s a matter between life and death, I’ll assess the information on hand and facilitate an approximation followed by iterations. This method regularly surpasses the business metrics I’m aiming for. The organisations I work with change rapidly, by the time a ‘clinical’ diagnoses is performed the situation has changed.

Second, I believe instructional designers need a solid understanding of the science of how people learn. Good books are out there, as are smart enthusiastic and qualified people who passionately want to change the state of play. I regularly cross paths with experience instructional designers very much wedded to the dreaded learning styles, content dumps and poor assessments.

So, can we create transformative learning under real world constraints? My strong view is yes, with the right people and right techniques.

Ben