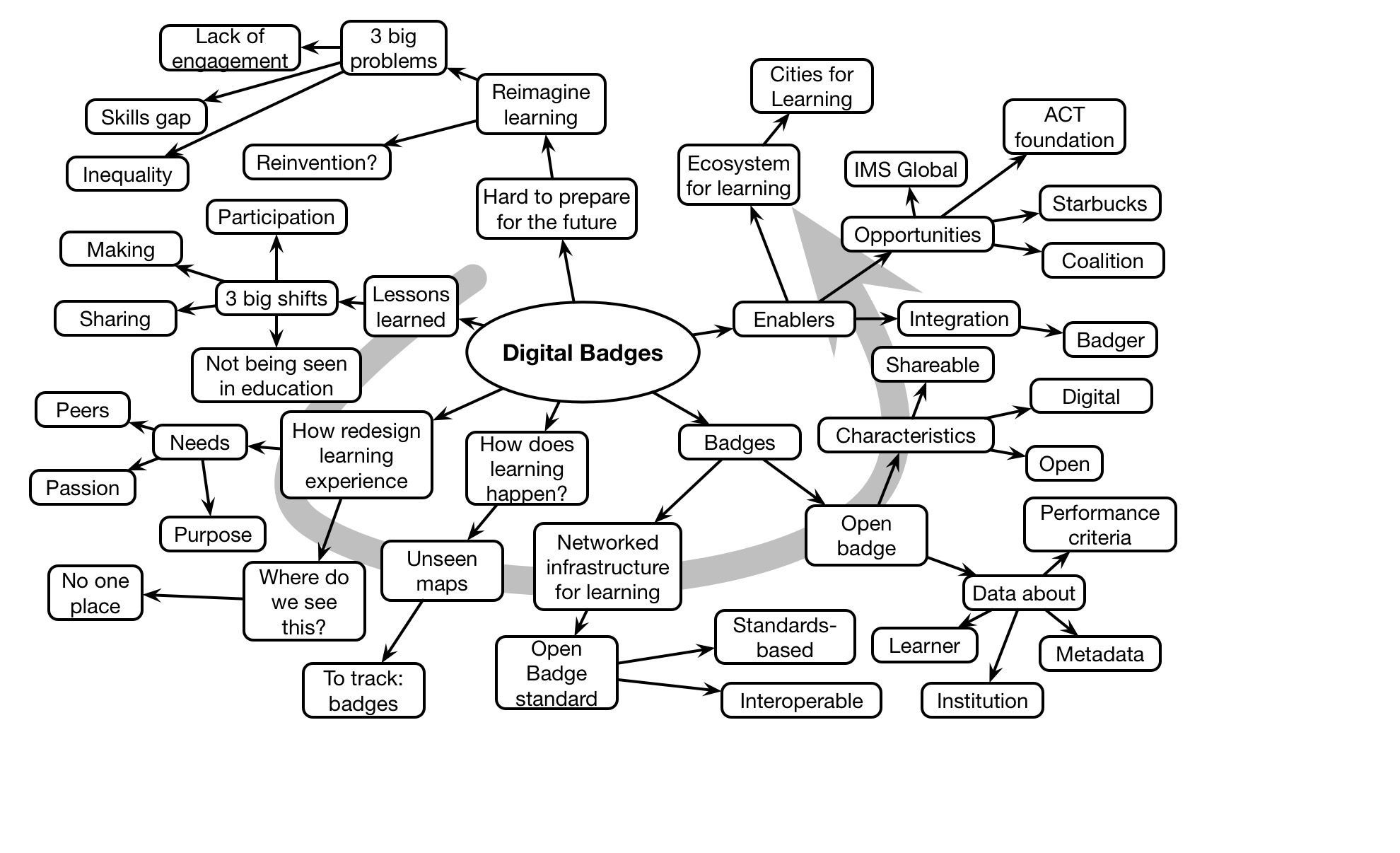

Connie Yowell gave a passionate and informing presentation on the driving forces behind digital badges.

Archives for September 2015

David Pogue #DevLearn Keynote Mindmap

David Pogue addressed the DevLearn audience on Learning Disruption. In a very funny and insightful presentation, he ranged from the Internet of Things, thru disintermediation and wearables, pointing out disruptive trends. He concluded by talking about the new generation and the need to keep trying new things.

Tech travails

Today I attended David Pogue’s #DevLearn Keynote. And, as a DevLearn ‘official blogger’, I was expected to mindmap it (as I regularly do). So, I turn on my iPad and have had a steady series of problems. The perils of living in a high tech world.

First, when I opened my diagramming software, OmniGraffle, it doesn’t work. I find out they’ve stopped supporting this edition! So, $50 later (yes, it’s almost unconscionably dear) and sweating out the download (“will it finish in time”), I start prepping the mindmap.

Except the way it does things are different. How do I add break points to an arrow?!? Well, I can’t find a setting, but I finally explore other interface icons and find a way. The defaults are different, but manage to create a fairly typical mindmap. Phew.

So, I export to Photos and open WordPress. After typing in my usual insipid prose, I go to add the image. And it starts, and fails. I try again, and it’s reliably failing. I reexport, and try again. Nope. I get the image over to my iPhone to try it there, to no avail.

I’ve posted the image to the conference app, but it’s not going to appear here until I get back to my room and my laptop. Grr.

Oh well, that’s life in this modern world, eh?

Looking forward on content

At DevLearn next week, I’ll be talking about content systems in session 109. The point is that instead of monolithic content, we want to start getting more granular for more flexible delivery. And while there I’ll be talking about some of the options on how, here I want to make the case about why, in a simplified way.

As an experiment (gotta keep pushing the envelope in a myriad of ways), I’ve created a video, and I want to see if I can embed it. Fingers crossed. Your feedback welcome, as always.

Revolution Roadmap: Assess

Last week, I wrote about a process to follow in moving forward on the L&D Revolution. The first step is Assess, and I’ve been thinking about what that means. So here, let me lay out some preliminary thoughts.

The first level are the broad categories. As I’m talking about aligning with how we think, work, and learn, those are the three top areas where I feel we fail to recognize what’s known about cognition, individually and together. As I mentioned yesterday, I’m looking at how we use technology to facilitate productivity in ways specifically focused on helping people learn. But let me be clear, here I’m talking about the big picture of learning – problem-solving, design, research, innovation, etc – as they call fall under the category of things we don’t know the answer to when we begin.

I started with how we think. Too often we don’t put information in the world when we can, yet we know that all our thinking isn’t in our head. So we can ask :

- Are you using performance consulting?

- Are you taking responsibility for resource development?

- Are you ensuring the information architecture for resources is user-focused?

The next area is working, and here the revelation is that the best outcomes come from people working together. Creative friction, when done in consonance with how we work together best, is where the best solutions and the best new ideas will come from. So you can look at:

- Are people communicating?

- Are people collaborating?

- Do you have in place a learning culture?

Finally, with learning, as the area most familiar to L&D, we need to look at whether we’re applying what’s known about making learning work. We should start with Serious eLearning, but we can go farther. Things to look at include:

- Are you practicing deeper learning design?

- Are you designing engagement into learning?

- Are you developing meta-learning?

In addition to each of these areas, there are cross-category issues. Things to look at for each include:

- Do you have infrastructure?

- What are you measuring?

All of these areas have nuances underneath, but at the top level these strike me as the core categories of questions. This is working down to a finer grain than I looked at in the book (c.f. Figure 8.1), though that was a good start at evaluating where one is.

I’m convinced that the first step for change is to understand where you are (before the next step, Learn, about where you could be). I’ve yet to see many organizations that are in full swing here, and I have persistently made the case that the status quo isn’t sufficient. So, are you ready to take the first step to assess where you are?

Biz tech

One of my arguments for the L&D revolution is the role that L&D could be playing. I believe that if L&D were truly enabling optimal execution as well as facilitating continual innovation (read: learning), then they’d be as critical to the organization as IT. And that made me think about how this role would differ.

To be sure, IT is critical. In today’s business, we track our business, do our modeling, run operations, and more with IT. There is plenty of vertical-specific software, from product design to transaction tracking, and of course more general business software such as document generation, financials, etc. So how does L&D be as ubiquitous as other software? Several ways.

First, formal learning software is really enterprise-wide. Whether it’s simulations/scenarios/serious games, spaced learning delivered via mobile, or user-generated content (note: I’m deliberately avoiding the LMS and courses ;), these things should play a role in preparing the audience to optimally execute and being accessed by a large proportion of the audience. And that’s not including our tools to develop same.

Similarly, our performance support solutions – portals housing job aids and context-sensitive support – should be broadly distributed. Yes, IT may own the portals, but in most cases they are not to be trusted to do a user- and usage-centered solution. L&D should be involved in ensuring that the solutions both articulate with and reflect the formal learning, and are organized by user need not business silo.

And of course the social network software – profiles and locators as well as communication and collaboration tools – should be under the purview of L&D. Again, IT may own them or maintain them, but the facilitation of their use, the understanding of the different roles and ensuring they’re being used efficiently, is a role for L&D.

My point here is that there is an enterprise-wide category of software, supporting learning in the big sense (including problem-solving, research, design, innovation), that should be under the oversight of L&D. And this is the way in which L&D becomes more critical to the enterprise. That it’s not just about taking people away from work and doing things to them before sending them back, but facilitating productive engagement and interaction throughout the workflow. At least at the places where they’re stepping outside of the known solutions, and that is increasingly going to be the case.

Agile?

Last Friday’s #GuildChat was on Agile Development. The topic is interesting to me, because like with Design Thinking, it seems like well-known practices with a new branding. So as I did then, I’ll lay out what I see and hope others will enlighten me.

As context, during grad school I was in a research group focused on user-centered system design, which included design, processes, and more. I subsequently taught interface design (aka Human Computer Interaction or HCI) for a number of years (while continuing to research learning technology), and made a practice of advocating the best practices from HCI to the ed tech community. What was current at the time were iterative, situated, collaborative, and participatory design processes, so I was pretty familiar with the principles and a fan. That is, really understand the context, design and test frequently, working in teams with your customers.

Fast forward a couple of decades, and the Agile Manifesto puts a stake in the ground for software engineering. And we see a focus on releasable code, but again with principles of iteration and testing, team work, and tight customer involvement. Michael Allen was enthused enough to use it as a spark that led to the Serious eLearning Manifesto.

That inspiration has clearly (and finally) now moved to learning design. Whether it’s Allen’s SAM or Ger Driesen’s Agile Learning Manifesto, we’re seeing a call for rethinking the old waterfall model of design. And this is a good thing (only decades late ;). Certainly we know that working together is better than working alone (if you manage the process right ;), so the collaboration part is a win.

And we certainly need change. The existing approaches we too often see involve a designer being given some documents, access to a SME (if lucky), and told to create a course on X. Sure, there’re tools and templates, but they are focused on making particular interactions easier, not on ensuring better learning design. And the person works alone and does the design and development in one pass. There are likely to be review checkpoints, but there’s little testing. There are variations on this, including perhaps an initial collaboration meeting, some SME review, or a storyboard before development commences, but too often it’s largely an independent one way flow, and this isn’t good.

The underlying issue is that waterfall models, where you specify the requirements in advance and then design, develop, and implement just don’t work. The problem is that the human brain is pretty much the most complex thing in existence, and when we determine a priori what will work, we don’t take into account the fact that like Heisenberg what we implement will change the system. Iterative development and testing allows the specs to change after initial experience. Several issues arise with this, however.

For one, there’s a question about what is the right size and scope of a deliverable. Learning experiences, while typically overwritten, do have some stricture that keeps them from having intermediately useful results. I was curious about what made sense, though to me it seemed that you could develop your final practice first as a deliverable, and then fill in with the required earlier practice, and content resources, and this seemed similar to what was offered up during the chat to my question.

The other one is scoping and budgeting the process. I often ask, when talking about game design, how to know when to stop iterating. The usual (and wrong answer) is when you run out of time or money. The right answer would be when you’ve hit your metrics, the ones you should set before you begin that determine the parameters of a solution (and they can be consciously reconsidered as part of the process). The typical answer, particularly for those concerned with controlling costs, is something like a heuristic choice of 3 iterations. Drawing on some other work in software process, I’d recommend creating estimates, but then reviewing them after. In the software case, people got much better at estimates, and that could be a valuable extension. But it shouldn’t be any more difficult to estimate, certainly with some experience, than existing methods.

Ok, so I may be a bit jaded about new brandings on what should already be good practice, but I think anything that helps us focus on developing in ways that lead to quality outcomes is a good thing. I encourage you to work more collaboratively, develop and test more iteratively, and work on discrete chunks. Your stakeholders should be glad you did.

ALIGN!

I’m recognizing that there’s an opportunity to provide more support for implementing the Revolution. So I’ve been thinking through what sort of process might be a way to go about making progress. Given that the core focus in on aligning with how we think, work, and learn (elements we’re largely missing), I thought I’d see whether that could provide a framework. Here’s my first stab, for your consideration:

Assess: here we determine our situation. I’m working on an evaluation instrument that covers the areas and serves as a guide to any gaps between current status and possible futures, but the key element is to ascertain where we are.

Learn: this step is about reviewing the conceptual frameworks available, e.g. our understandings of how we think, work and learn. The goal is to identify possible directions to move in detail and to prioritize them. The ultimate outcome is our next step to take, though we may well have a sequence queued up.

Initiate: after choosing a step, here’s where we launch it. This may not be a major initiative. The principle of ‘trojan mice‘ suggests small focused steps, and there are reasons to think small steps make sense. We’ll need to follow the elements of successful change, with planning, communicating, supporting, rewarding, etc.

Guide: then we need to assess how we’re doing and look for interventions needed. This involves knowing what the change should accomplish, evaluating to see if it’s occurring, and implementing refinements as we go. We shouldn’t assume it will go well, but instead check and support.

Nurture: once we’ve achieved a stable state, we want to nurture it on an ongoing basis. This may be documenting and celebrating the outcome, replicating elsewhere, ensuring persistence and continuity, and returning to see where we are now and where we should go next.

Obviously, I’m pushing the ALIGN acronym (as one does), as it helps reinforce the message. Now to put in place tools to support each step. Feedback solicited!

Culture Before Strategy

In an insightful article, Ken Majer (full disclosure, a boss of mine many years ago) has written about the need to have the right culture before executing strategy. And this strikes me as a valuable contribution to thinking about effective change in the transformation of L&D in the Revolution.

I have argued that you can get some benefits from the Revolution without having an optimized culture, but you’re not going to tap into the full potential. Revising formal learning to be truly effective by aligning to how we learn, adding in performance support in ways that augment our cognitive limitations, etc, are all going to offer useful outcomes. I think the optimal execution stuff will benefit, but the ability to truly tap into the network for the continual innovation requires making it safe and meaningful to share. If it’s not safe to Show Your Work, you can’t capitalize on the benefits.

What Ken is talking about here is ensuring you have values and culture in alignment with the vision and mission. And I’ll go further and say that in the long term, those values have to be about valuing people and the culture has to be about working and learning together effectively. I think that’s the ultimate goal when you really want to succeed: we know that people perform best when given meaningful work and are empowered to pursue it.

It’s not easy, for sure. You need to get explicit about your values and how those manifest in how you work. You’ll likely find that some of the implicit values are a barrier, and they’ll require conscious work to address. The change in approach on the part of management and executives and the organizational restructuring that can accompany this new way of working isn’t going to happen overnight, and change is hard. But it is increasingly, and will be, a business necessity.

So too for the move to a new L&D. You can start working in these ways within your organization, and grow it. And you should. It’s part of the path, the roadmap, to the revolution. I’m working on more bits of it, trying to pull it together more concretely, but it’s clear to me that one thread (and as already indicated in the diagrams that accompany the book) is indeed a path to a more enabling culture. In the long term, it will be uplifting, and it’s worth getting started on now.

Accreditation and Compliance Craziness

A continued bane of my existence is the ongoing requirements that are put in place for a variety of things. Two in particular are related and worth noting: accreditation and compliance. The way they’re typically construed is barking mad, and we can (and need to) do better.

To start with accreditation. It sounds like a good thing: to make sure that someone issuing some sort of certification has in place the proper procedures. And, done rightly, it would be. However, what we currently see is that, basically, the body says you have to take what the Subject Matter Expert (SME) says as the gospel. And this is problematic.

The root of the problem is that SMEs don’t have access to around 70% of what they do, as research at the University of Southern California’s Cognitive Technology group has documented. However, of course, they have access to all they ‘know’. So it’s easy for them to say what learners should know, but not what learners actually should be able to do. And some experts are better than others at articulating this, but the process is opaque to this nuance.

So unless the certification process is willing to allow the issuing institution the flexibility to use a process to drill down into the actual ‘do’, you’re going to get knowledge-focused courses that don’t actually achieve important outcomes. You could do things like incorporating those who depend on the practitioners, and/or using a replicable and grounded process with SMEs that helps them work out what the core objectives need to be; meaningful ones, ala competencies. And a shoutout to Western Governors University for somehow being accredited using competencies!

Compliance is, arguably, worse. Somehow, the amount of time you spend is the important determining factor. Not what you can do at the end, but instead that you’ve done something for an hour. The notion that amount of time spent relates to ability at this level of granularity is outright maniacal. Time would matter, differently for different folks, but you have to be doing the right thing, and there’s no stricture for that. Instead, if you’ve been subjected to an hour of information, that somehow is going to change your behavior. As if.

Again, competencies would make sense. Determine what you need them to be able to do, and then assess that. If it takes them 30 minutes, that’s OK. If it takes them 5 hours, well, it’s necessary to be compliant.

I’d like to be wrong, but I’ve seen personal instances of both of these, working with clients. I’d really like to find a point of leverage to address this. How can we start having processes that obtain necessary skills, and then use those to determine ability, not time or arbitrary authority! Where can we start to make this necessary change?