So, I’d been unhappy with how hard it was to update my site. It had some problems, but I was afraid to change it because of the repercussions. Well, it turns out that’s not a problem! So what you see is my first stab at a new site. A little background…

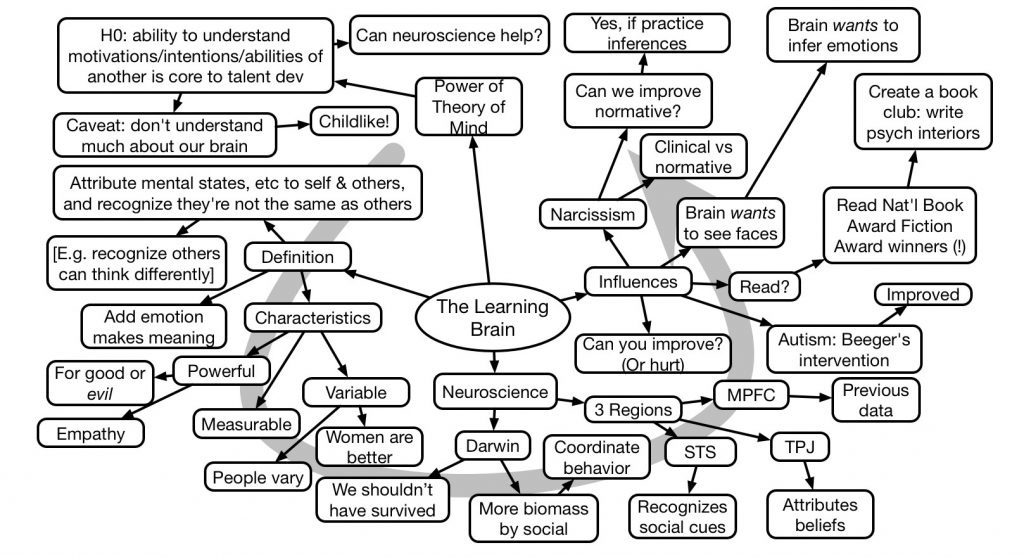

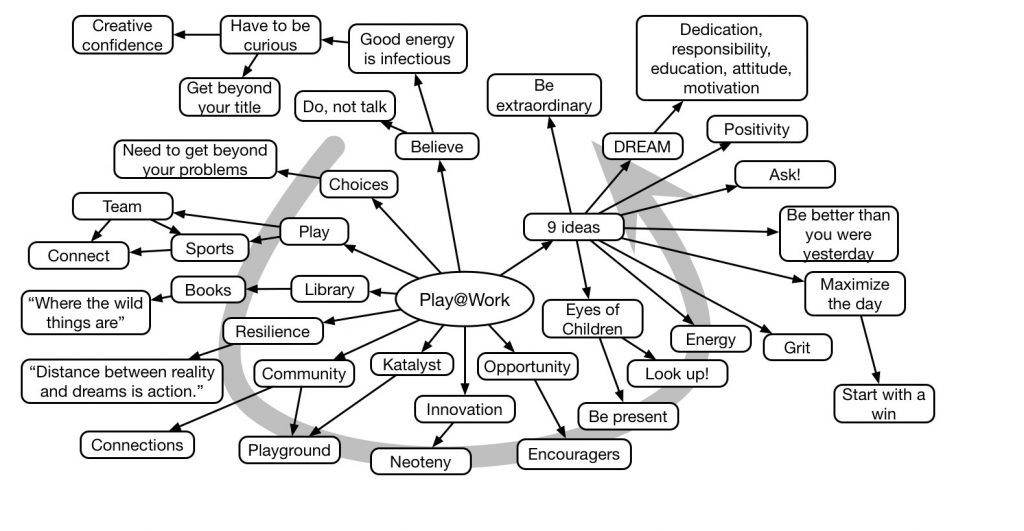

My ISP, a good friend, colleague, and mentor, was making some changes to how my sites are implemented. While my other sites (Quinnovation and the book sites) are all done in a WYSIWIG tool called RapidWeaver, Learnlets is a WordPress site. While I could do a blog in Rapidweaver, I’m afraid I’d lose my decade+ of posts! So, I’ve kept it in WordPress. And that’s handy for updating the site when I’m on the road (e.g. for mindmaps).

However, my ISP, in addition to being a tech guru, is also a security guru. He does tech for a living, and is kind enough to host me as well. To prevent some of the attacks that were happening to WordPress sites (hey, write a script that hammers WordPress vulnerabilities and point it to all their sites you can find), he instituted some security measures. One was that I couldn’t get into the PHP code for my sidebar! It made sense, because if anyone could get access to my admin code, they could not only change the look and feel (easy to fix), they could alter the code and put in malicious stuff. Not good. But…

I’d cobbled it together with cut-and-pasted code, but now I couldn’t edit it without downloading the raw source (once I could find the file in the WordPress hierarchies), editing it, and uploading. I couldn’t even access the sidebar editor! I had a second login for upgrading the site, but it wouldn’t allow access to things I wanted to customize. And I have a bad habit of tinkering! As things happen, I might want to add an image, or…what have you.

So, with this upgrade, I mentioned my problems with the site, and he installed some new themes I could play with. And, I found, it was pretty much click and type to create a new sidebar. Suddenly, it’s easy to change the site, without coding! It’s not perfect, but it’s better for mobile (a friend had complained about that).

As evidence, somehow it seized up in the midst of creating the first draft. I was going to have to re-create the new site! First, it would’ve been easy. I’d created most of the graphics and put them in a location. And, it remembered my previous choices, and restored them so I didn’t have to!

I asked my lad, who has a good digital aesthetic, to give me feedback on two of the options my colleague installed, and he liked this one, with a suggestion on the background (not a stock photo). I’m using the background image from the Designing mLearning book cover, but that can be changed. He didn’t like the idea of the bag of bulbs from the Quinnovation site, as he thought it was ‘stock’. I think it’s aligned with the notion of Quinnovations (or I wouldn’t have used it), and so too with Learnlets, but in honor of his opinion I’m sticking with this for now. And I appreciate that he shared his thinking!

Now that I have a draft up, what’s working and what’s not? I still need to figure out a way to let folks sign up for Learnlets as an email feed (beyond RSS, but through Feedblitz, the service I use), but other than that it’s pretty much the same. And easier to tweak! (E.g., I have subsequently gone to the Feedblitz site, used their tool to create the widget HTML code, and it’s now on the sidebar as well.)

So, the question is, what should I tweak? I’ll definitely listen on usability issues, and I’ll consider aesthetic ones ;). Regardless, thought I’d share the rationale and the process, because that’s what ‘working out loud’ is, and I think it’s part of the moves we need to see. And in return, getting feedback. So, what doesn’t work for you?