Most of our educational approaches – K12, Higher Ed, and organizational – are fundamentally wrong. What I see in schools, classrooms, and corporations are information presentation and knowledge testing. Which isn’t bad in and of itself, except that it won’t lead to new abilities to do! And this bothers me.

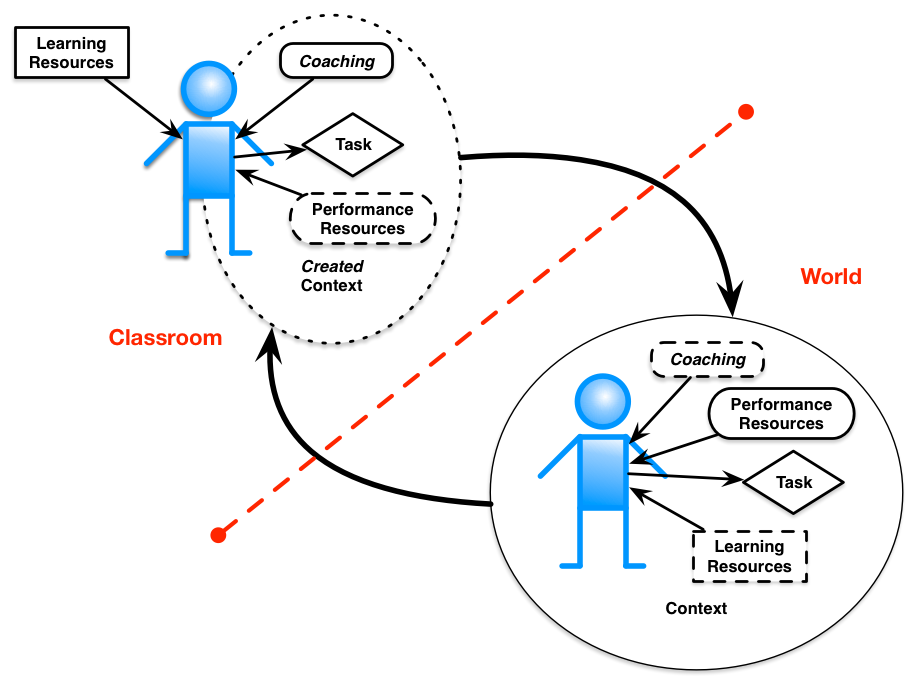

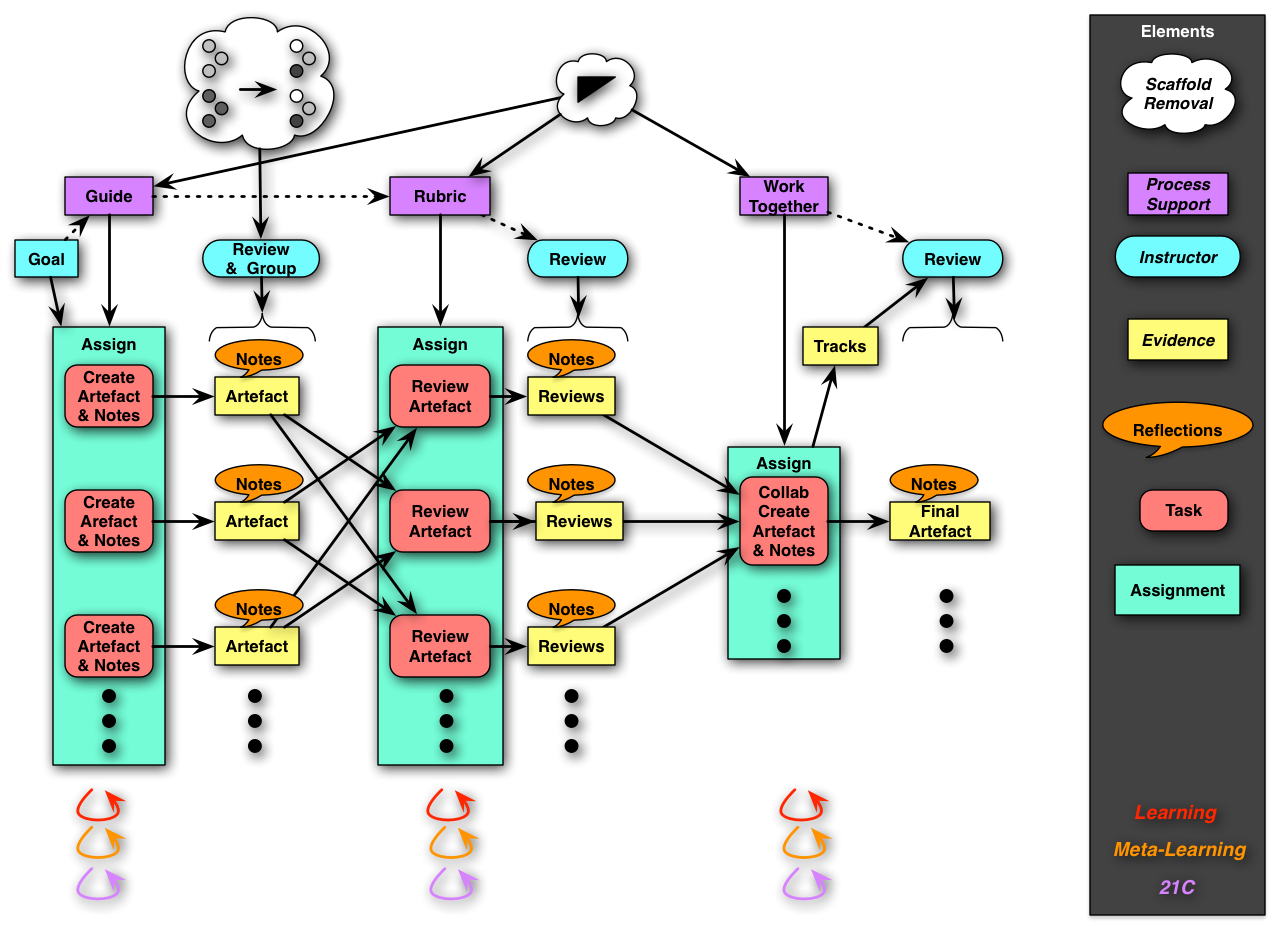

As a consequence, I took a stand trying to create a curricula that wasn’t about content, but instead about action. I elaborated it in some subsequent posts, trying to make clear that the activities could be connected and social, so that you could be developing something over time, and also that the output of the activity produced products – both the work and thoughts on the work – that serve as a portfolio.

I just was reading and saw some lovely synergistic thoughts that inspire me that there’s hope. For one, Paul Tough apparently wrote a book on the non-cognitive aspects of successful learners, How Children Succeed, and then followed it up with Helping Children Succeed, which digs into the ignored ‘how’. His point is that elements like ‘grit’ that have been (rightly) touted aren’t developed in the same way cognitive skills are, and yet they can be developed. I haven’t read his book (yet), but in exploring an interview with him, I found out about Expeditionary Learning.

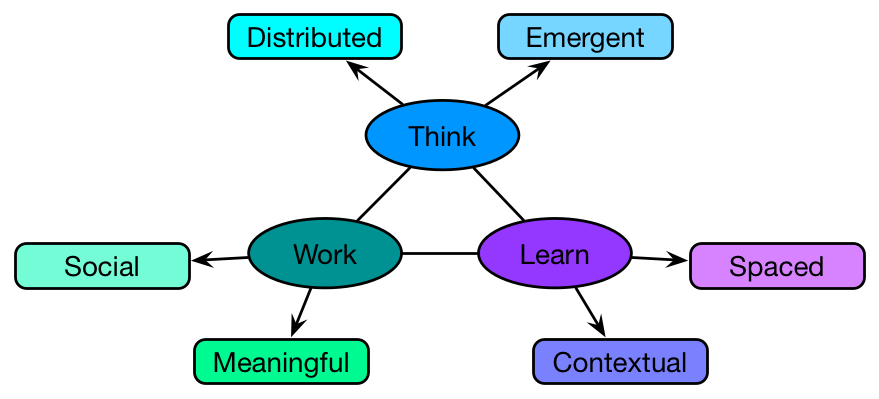

And what Expeditionary Learning has, I’m happy to discover, is an approach based upon deeply immersive projects that integrate curricula and require the learning traits recognized as important. Tough’s point is that the environment matters, and here are schools that are restructured to be learning environments with learning cultures. They’re social, facilitated, with meaningful goals, and real challenges. This is about learning, not testing. “A teacher’s primary task is to help students overcome their fears and discover they can do more than they think they can.”

And I similarly came across an article by Benjamin Riley, who’s been pilloried as the poster-child against personalization. And he embraces that from a particular stance, that learning should be personalized by teachers, not technology. He goes further, talking about having teachers understand learning science, becoming learning engineers. He also emphasizes social aspects.

Both of these approaches indicate a shift from content regurgitation to meaningful social action, in ways that reflect what’s known about how we think, work, and learn. It’s way past time, but it doesn’t mean we shouldn’t keep striving to do better. I’ll argue that in higher ed and in organizations, we should also become more aware of learning science, and on meaningful activity. I encourage you to read the short interview and article, and think about where you see leverage to improve learning. I’m happy to help!