One of the things I’m wrestling with right now is the transition from where we’re at to where we should be. I’ve already said we have to change, the question is how. A number of us have talked about what the future could and should look like (including but not limited to my ITA colleagues), but how do you get there from here? Whether it’s 70:20:10 or some other model, there simply has to be a better way.

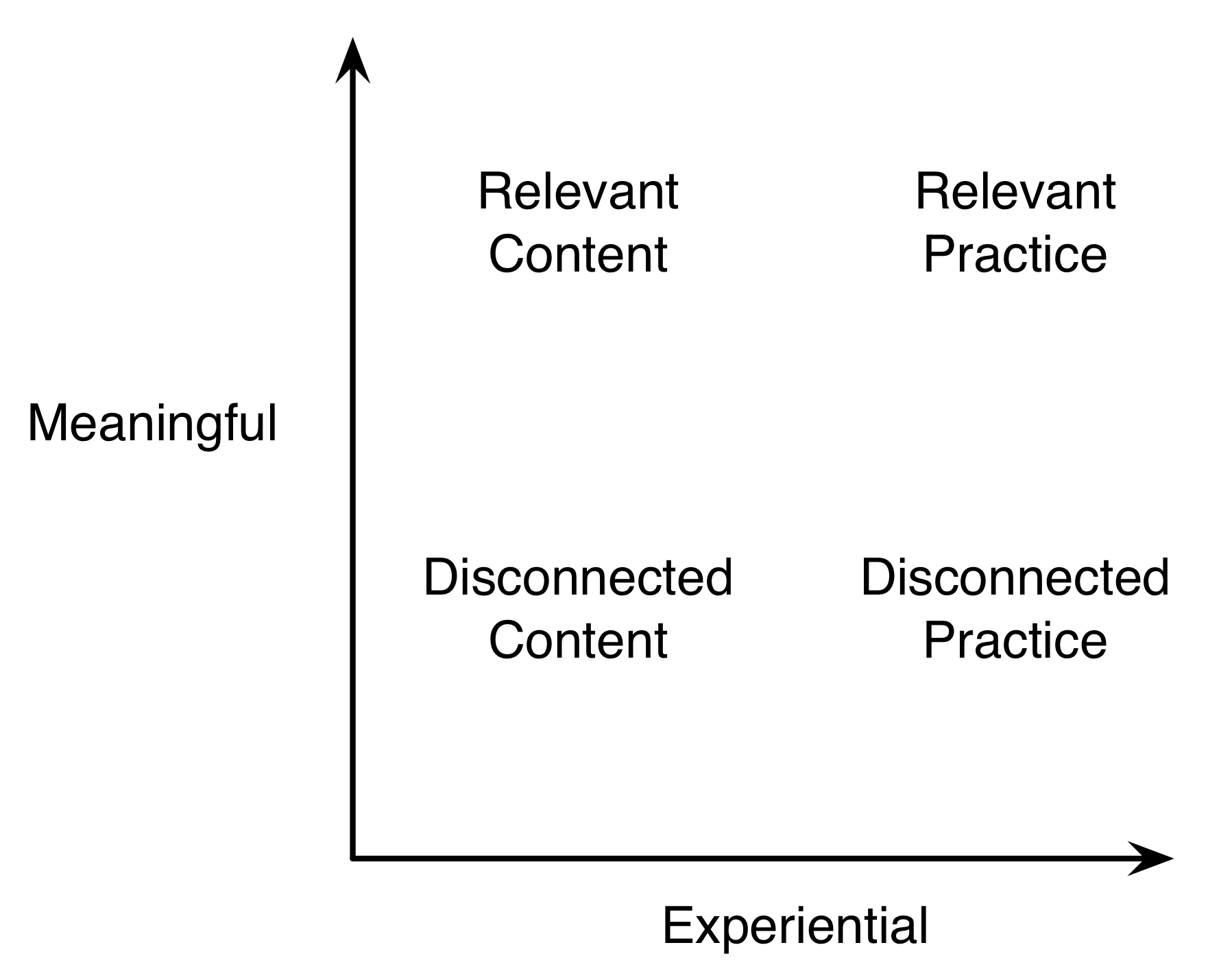

Right now, many organizations have training operations going on: people traveling to a location to sit down and have knowledge dumped on them, perhaps including a knowledge check: show up and throw up. With smile sheets to evaluate the outcome. And/or we have elearning: knowledge dump and test, or virtual classrooms where we do the same spray and pray. And it doesn’t work; it can’t. Well, it certainly is not going to lead to any meaningful outcome.

Granted, this is a gross stereotype, and in particular situations the training is hands on, there’s lots of practice, and maybe blended so the knowledge necessary is learned beforehand. Yes, this can lead to some real skill acquisition.

And don’t even bring up ‘compliance’. Sure, I know you have to do it, and until we get government to stop thinking seat time and content presentation is the same as behavior change, and lawyers to only care about CYA, we’ll be stuck with it. That, apparently, is just the cost of doing business. I’m talking about the things that matter to your company. Your skills, your ability to do.

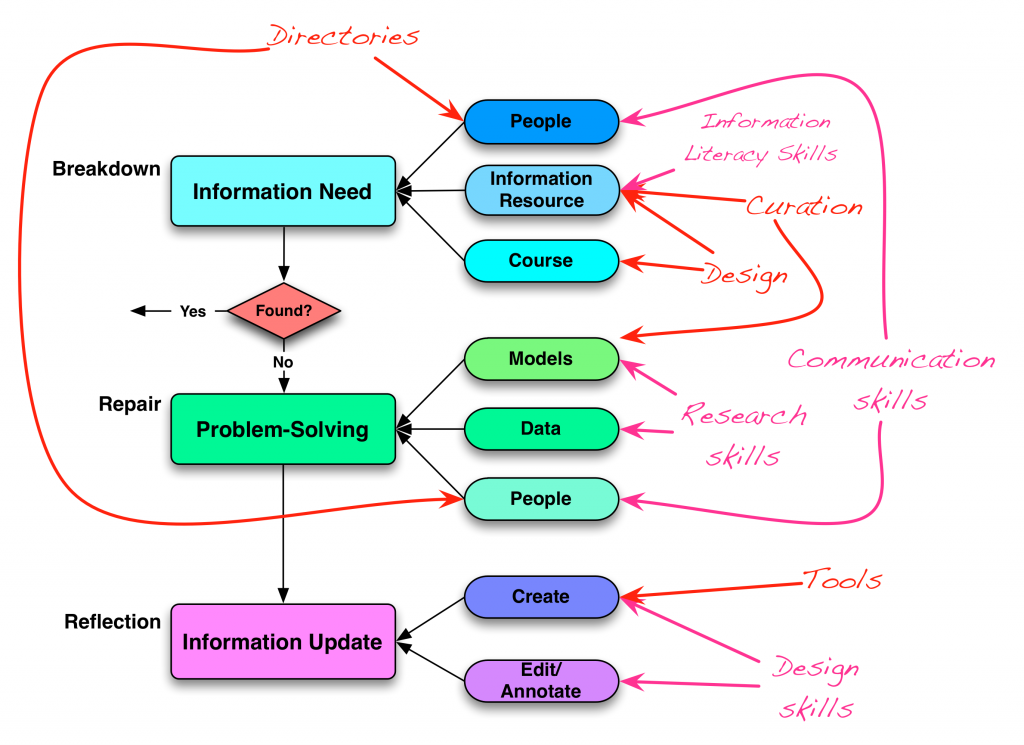

And we need to stop it. I think the first step is to kill all training. When you raise your head up and start talking about “but how are we going to meet this need”, then you start thinking anew: how can we go ‘world first‘, performance support 2nd, and meaningful practice last? Once you find you’ve exhausted all other options, then you might realize that formal training is needed, but it could and should be focused on doing, not telling.

This is only part of the picture, and perhaps I’m being deliberately controversial (who, me?), but it’s one way to be forced to think anew about what we’re doing and how we can help. We have to change, and we need to start figuring out how to get there. I’m open to ideas. I’ve heard some others, but that’s another post. What are your ideas?