Looking into motivation, particularly for learning, certain elements appear again and again. So I’ve heard ‘relevance’, ‘meaningfulness‘, consequences, and more. Friston suggests that we learn to minimize surprise. One I’ve heard, and wrestled with, is curiosity. It’s certainly aligned with surprise. So I’ve been curious about curiosity.

Tom Malone, in his Ph.D. thesis, talked about intrinsically motivating instruction, and had curiosity along with fantasy and challenge. Here he was talking about helping learners see that their understanding is incomplete. This is in line with the Free Energy Principle suggesting that we learn to do better at matching our expectations to real outcomes.

Yet, to me, curiosity doesn’t seem enough. Ok, for education, particularly young kids, I see it. You may want to set up some mismatch of expectations to drive them to want to learn something. But I believe we need more.

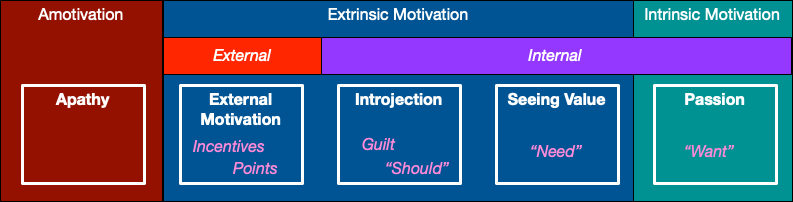

Matt Richter, in his well-done L&D conference presentation on motivation, discussed self-determination theory. He had a nice diagram (my revision here) that distinguished various forms of motivation. From amotivated, that is, not, there were levels of external motivation and then internal motivation. The ultimate is what he termed intrinsic motivation, but that’s someone wanting it of their own interest. Short of that, of course, you have incentive-driven behavior (gamification), and then what you’re guilted into (technically termed Introjection), to where you see value in it for yourself (e.g. WIIFM).

While intrinsic motivation, passion, sounds good, I think having someone be passionate about something is a goal too far. Instead, I see our goal as helping people realize that they need it, even if not ‘want’ it. That, to me, is where consequences kick in. If we can show them the consequence of having, or not, the skills, and do this for the right audience and skills, we can at least ensure that they’re in the ‘value’ dimension.

So, my take is that while we should value curiosity, we may not be able to ensure it. And we can ensure that, with good analysis and design, we can at least get them to see the value. That’s my current take after being curious about curiosity. I’d like to hear yours!