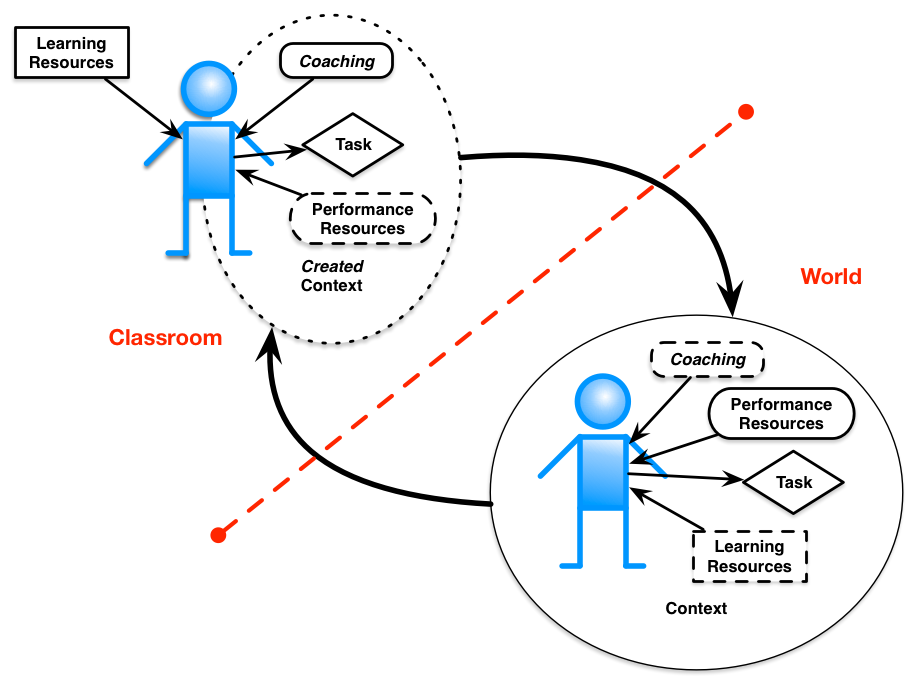

Yesterday, I clarified what I meant about microlearning. Earlier, I wrote about designing microlearning, but what I was really talking about was the design of spaced learning. So how should you design the type of microlearning I really feel is valuable?

To set the stage, here’re we’re talking about layering learning on performance in a context. However, it’s more than just performance support. Performance support would be providing a set of steps (in whatever ways: series of static photos, video, etc) or supporting those steps (checklist, lookup table, etc). And again, this is a good thing, but microlearning, I contend, is more.

To make it learning, what you really need is to support developing an ability to understand the rationale behind the steps, to support adapting the steps in different situations. Yes, you can do this in performance support as well, but here we’re talking about models.

What (causal) models give us is a way to explain what has happened, and predict what will happen. When we make these available around performing a task, we unpack the rationale. We want to provide an understanding behind the rote steps, to support adaptation of the process in difference situations. We also provide a basis for regenerating missing steps.

Now, we can also be providing examples, e.g. how the model plays out in different contexts. If what the learner is doing now can change under certain circumstances, elaborating how the model guides performing differently in different context provides the ability to transfer that understanding.

The design process, then, would be to identify the model guiding the performance (e..g. why we do things in this order, and it might be an interplay between structural constraints (we have to remove this screw first because…) and causal ones (this is the chemical that catalyzes the process). We need to identify and determine how to represent.

Once we’ve identified the task, and the associated models, we then need to make these available through the context. And here’s why I’m excited about augmented reality, it’s an obvious way to make the model visible. Quite simply, it can be layered on top of the task itself! Imagine that the workings behind what you’re doing are available if you want. That you can explore more as you wish, or not, and simply accept the magic ;).

The actual task is the practice, but I’m suggesting providing a model explaining why it’s done this way is the minimum, and providing examples for a representative sample of other appropriate contexts provides support when it’s a richer performance. Delivered, to be clear, in the context itself. Still, this is what I think really constitutes microlearning. So what say you?