As I did with Learnnovators, with Litmos I’ve also done a series of posts, in this case a year’s worth. Unlike the other series, which was focused on deeper eLearning design, they’re not linked thematically and instead cover a wide range of topics that were mutually agreed as being personally interesting and of interest to their argument.

So, we have presentations on:

- Blending learning

- Performance Support

- mLearning: Part 1 and Part 2

- Advanced Instructional Design

- Games and Gamification

- Courses in the Ecosystem

- L&D and the Bigger Picture

- Measurement

- Reviewing Design Processes

- New Learning Technologies

- Collaboration

- Meta-Learning

If any of these topics are of interest, I welcome you to check them out.

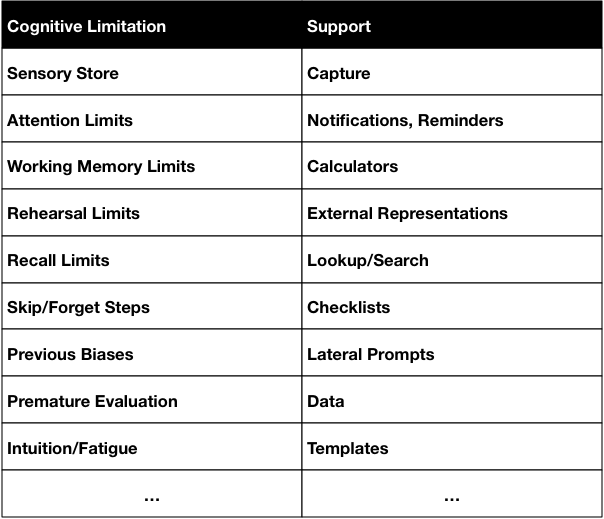

So, for instance, our senses capture incoming signals in a sensory store. Which has interesting properties that it has almost an unlimited capacity, but for only a very short time. And there is no way all of it can get into our working memory, so what happens is that what we attend to is what we have access to. So we can’t recall what we perceive accurately. However, technology (camera, microphone, sensors) can recall it all perfectly. So making capture capabilities available is a powerful support.

So, for instance, our senses capture incoming signals in a sensory store. Which has interesting properties that it has almost an unlimited capacity, but for only a very short time. And there is no way all of it can get into our working memory, so what happens is that what we attend to is what we have access to. So we can’t recall what we perceive accurately. However, technology (camera, microphone, sensors) can recall it all perfectly. So making capture capabilities available is a powerful support. This is similar to the way I’d seen Palm talk about the

This is similar to the way I’d seen Palm talk about the