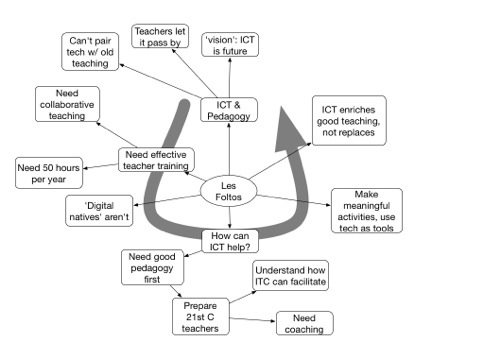

Les Foltos made a passionate and eloquent argument for good pedagogy first.

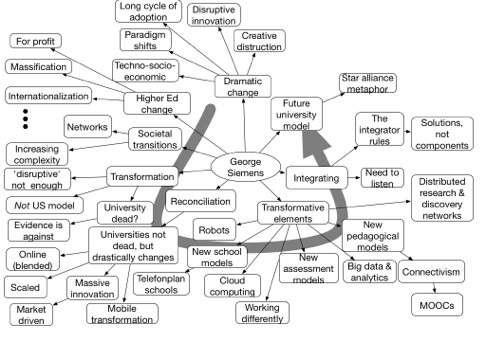

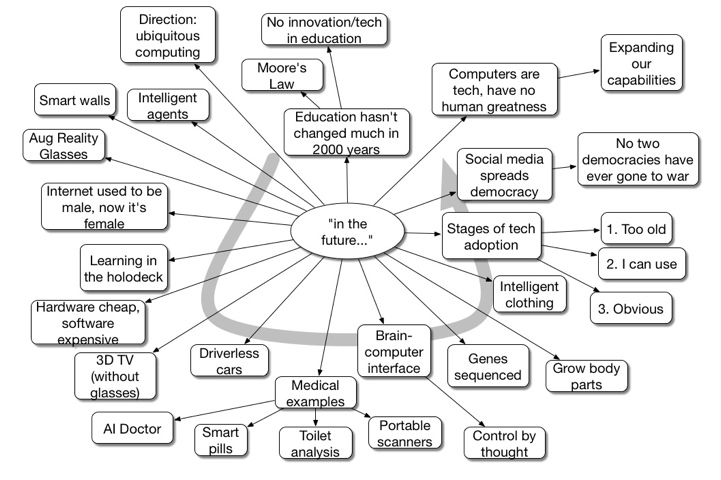

George Siemens #EDGEX2012 Mindmap

Reimagining Learning

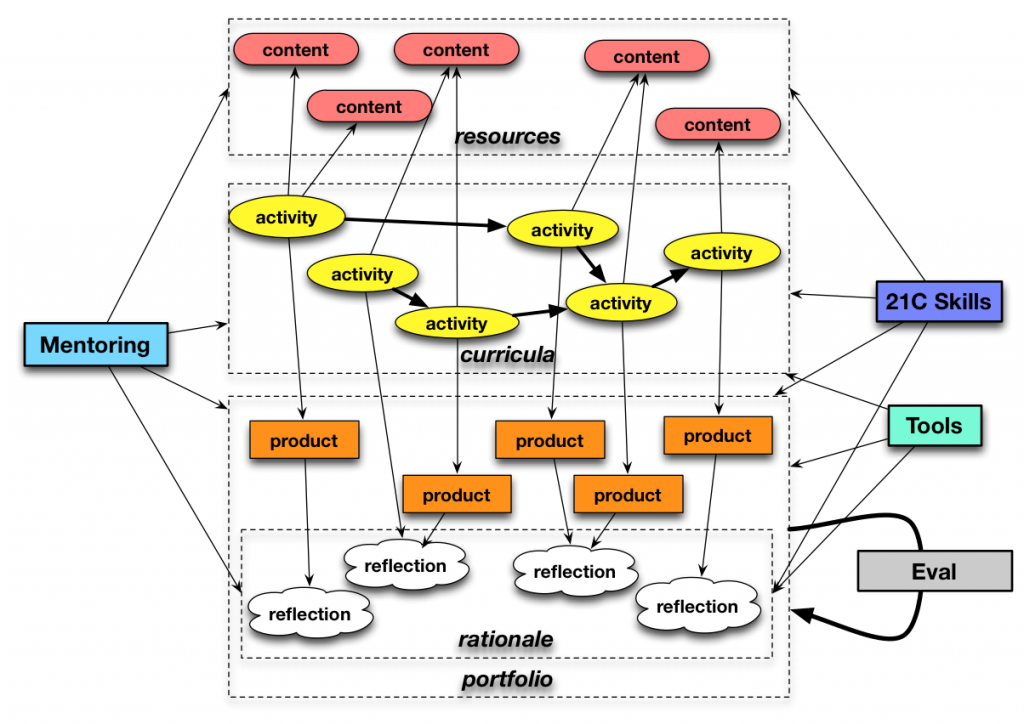

On the way to the recent Up To All Of Us unconference (#utaou), I hadn’t planned a personal agenda. However, I was going through the diagrams that I’d created on my iPad, and discovered one that I’d frankly forgotten. Which was nice, because it allowed me to review it with fresh eyes, and it resonated. And I decided to put it out at the event to get feedback. Let me talk you through it, because I welcome your feedback too.

Up front, let me state at least part of the motivation. I’m trying to capture rethinking about education or formal learning. I’m tired of anything that allows folks to think knowledge dump and test is going to lead to meaningful change. I’m also trying to ‘think out loud’ for myself. And start getting more concrete about learning experience design.

Let me start with the second row from the top. I want to start thinking about a learning experience as a series of activities, not a progression of content. These can be a rich suite of things: engagement with a simulation, a group project, a museum visit, an interview, anything you might choose for an individual to engage in to further their learning. And, yes, it can include traditional things: e.g. read this chapter.

Let me start with the second row from the top. I want to start thinking about a learning experience as a series of activities, not a progression of content. These can be a rich suite of things: engagement with a simulation, a group project, a museum visit, an interview, anything you might choose for an individual to engage in to further their learning. And, yes, it can include traditional things: e.g. read this chapter.

This, by the way, has a direct relation to Project Tin Can, a proposal to supersede SCORM, allowing a greater variety of activities: Actor – Verb – Object, or I – did – this. (For all I can recall, the origin of the diagram may have been an attempt to place Tin Can in a broad context!)

Around these activities, there are a couple of things. For one, content is accessed on the basis of the activities, not the other way around. Also, the activities produce products, and also reflections.

For the activities to be maximally valuable, they should produce output. A sim use could produce a track of the learner’s exploration. A group project could provide a documented solution, or a concept-expression video or performance. An interview could produce an audio recording. These products are portfolio items, going forward, and assessable items. The assessment could be self, peer, or mentor.

However, in the context of ‘make your thinking visible’ (aka ‘show your work’), there should also be reflections or cognitive annotations. The underlying thinking needs to be visible for inspection. This is also part of your portfolio, and assessable. This is where, however, the opportunity to really recognize where the learner is, or is not, getting the content, and detect opportunities for assistance.

The learner is driven to content resources (audios, videos, documents, etc) by meaningful activity. This in opposition to the notion that content dump happens before meaningful action. However, prior activities can ensure that learners are prepared to engage in the new activities.

The content could be pre-chosen, or the learners could be scaffolded in choosing appropriate materials. The latter is an opportunity for meta-learning. Similarly, the choice of product could be determined, or up to learner/group choice, and again an opportunity for learning cross-project skills. Helping learners create useful reflections is valuable (I recall guiding honours students to take credit for the work they’d done; they were blind to much of the own hard work they had put in!).

When I presented this to the groups, there were several questions asked via post-its on the picture I hand-drew. Let me address them here:

What scale are you thinking about?

This unpacks. What goes into activity design is a whole separate area. And learning experience design may well play a role beneath this level. However, the granularity of the activities is at issue. I think about this at several scales, from an individual lesson plan to a full curriculum. The choice of evaluation should be competency-based, assessed by rubrics, even jointly designed ones. There is a lot of depth that is linked to this.

How does this differ from a traditional performance-based learning model?

I hadn’t heard of performance-based learning. Looking it up, there seems considerable overlap. Also with outcome-based learning, problem-based learning, or service learning, and similarly Understanding By Design. It may not be more, I haven’t yet done the side-by-side. It’s scaling it up , and arguably a different lens, and maybe more, or not. Still, I’m trying to carry it to more places, and help provide ways to think anew about instruction and formal education.

An interesting aside, for me, is that this does segue to informal learning. That is, you, as an adult, choose certain activities to continue to develop your ability in certain areas. Taking this framework provides a reference for learners to take control of their own learning, and develop their ability to be better learners. Or so I would think, if done right. Imagine the right side of the diagram moving from mentor to learner control.

How much is algorithmic?

That really depends. Let me answer that in conjunction with this other comment:

Make a convert of this type of process out of a non-tech traditional process and tell that story…

I can’t do that now, but one of the attendees suggested this sounded a lot like what she did in traditional design education. The point is that this framework is independent of technology. You could be assigning studio and classroom and community projects, and getting back write-ups, performances, and more. No digital tech involved.

There are definite ways in which technology can assist: providing tools for content search, and product and reflection generation, but this is not about technology. You could be algorithmic in choosing from a suite of activities by a set of rules governing recommendations based upon learner performance, content available, etc. You could also be algorithmic in programming some feedback around tech-traversal. But that’s definitely not where I’m going right now.

Similarly, I’m going to answer two other questions together:

How can I look at the path others take? and How can I see how I am doing?

The portfolio is really the answer. You should be getting feedback on your products, and seeing others’ feedback (within limits). This is definitely not intended to be individual, but instead hopefully it could be in a group, or at least some of the activities would be (e.g. communing on blog posts, participating in a discussion forum, etc). In a tech-mediated environment, you could see others’ (anonymized) paths, access your feedback, and see traces of other’s trajectories.

The real question is: is this formulation useful? Does it give you a new and useful way of thinking about designing learning, and supporting learning?

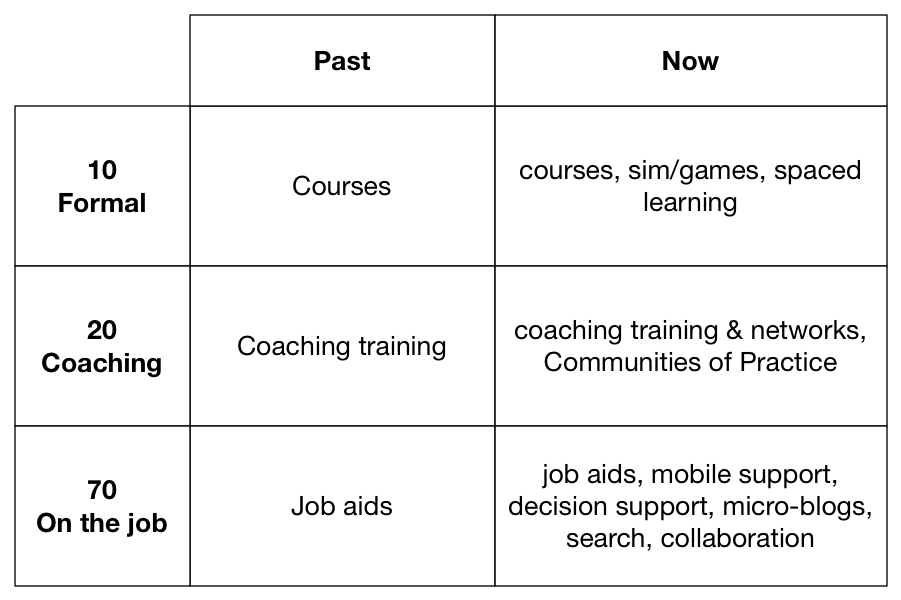

70:20:10 Tech

At the recent Up To All Of Us event (#utaou), someone asked about the 70:20:10 model. As you might expect, I mentioned that it’s a framework for thinking about supporting people at work, but it also occurred to me that there might be a reason folks have not addressed the 90, because, in the past, there might have been little that they could do. But that’s changed.

In the past, other than courses, there was little at could be done except providing courses on how to coach, and making job aids. The technology wasn’t advanced enough. But that’s changed.

What has changed are several things. One is the rise of social networking tools: blogs, micro-blogs, wikis, and more. The other is the rise of mobile. Together, we can be supporting the 90 in fairly rich ways.

What has changed are several things. One is the rise of social networking tools: blogs, micro-blogs, wikis, and more. The other is the rise of mobile. Together, we can be supporting the 90 in fairly rich ways.

For the 20, coaching and mentoring, we can start delivering that wherever needed, via mobile. Learners can ask for, or even be provided, support more closely tied to their performance situations regardless of location. We can also have a richer suite of coaching and mentoring happening through Communities of Practice, where anyone can be a coach or mentor, and be developed in those roles, too. Learner activity can be tracked, as well, leaving traces for later review.

For the 70, we can first of all start providing rich job aids wherever and whenever, including a suite of troubleshooting information and even interactive wizards. We also can have help on tap freed of barriers of time and distance. We can look up information as well, if our portals are well-designed. And we can find people to help, whether information or collaboration.

The point is that we no longer have limits in the support we can provide, so we should stop having limits in the help we *do* provide.

Yes, other reasons could still also be that folks in the L&D unit know how to do courses, so that’s their hammer making everything look like a nail, or they don’t see it as their responsibility (to which I respond “Who else? Are you going to leave it to IT? Operations?”). That *has* to change. We can, and should, do more. Are you?

Changing the Book game

I was boarding a plane away from home as Apple’s announcement was happening, so I haven’t had the chance to dig into the details as I normally would, but just the news itself shows Apple is taking on yet another industry. What Apple did to the music industry is a closer analogy to what is happening here than what they did to the phone industry, however.

As Apple recreated the business of music publishing, they’re similarly shifting textbook publishing. They’ve set a price cap (ok, perhaps just for high school, to begin), and a richer target product. In this case, however, they’re not revolutionizing the hardware, but the user experience, as their standard has a richer form of interaction (embedded quizzes) than the latest ePub standard they’re building upon. This is a first step towards the standard I’ve argued for, with rich embedded interactivity (read sims/games).

Apple has also democratized the book creation business, with authoring tools for anyone. They have kind of done that with GarageBand, but this is easier. Publishers will have the edge on homebrew for now, with a greater infrastructure to accommodate different state standards, and media production capabilities or relationships. That may change, however.

Overall, it will be interesting to see how this plays out. Apple, once again making life fun.

Performance Architecture

I’ve been using the tag ‘learning experience design strategy’ as a way to think about not taking the same old approaches of events über ales. The fact of the matter is that we’ve quite a lot of models and resources to draw upon, and we need to rethink what we’re doing.

The problem is that it goes far beyond just a more enlightened instructional design, which of course we need. We need to think of content architectures, blends between formal and informal, contextual awareness, cross-platform delivery, and more. It involves technology systems, design processes, organizational change, and more. We also need to focus on the bigger picture.

Yet the vision driving this is, to me, truly inspiring: augmenting our performance in the moment and developing us over time in a seamless way, not in an idiosyncratic and unaligned way. And it is strategic, but I’m wondering if architecture doesn’t better capture the need for systems and processes as well as revised design.

This got triggered by an exercise I’m engaging in, thinking how to convey this. It’s something along the lines of:

The curriculum’s wrong:

- it’s not knowledge objectives, it’s skills

- it’s not current needs, it’s adapting to change

- it’s not about being smart, it’s about being wise

The pedagogy’s wrong:

- it’s not a flood, but a drip

- it’s not knowledge dump, it’s decision-making

- it’s not expert-mandated, instead it’s learner-engaging

- it’s not ‘away from work’, it’s in context

The performance model is wrong:

- it’s not all in the head, it’s distributed across tools and systems.

- it’s not all facts and skill, it’s motivation and confidence

- it’s not independent, it’s socially developed

- it’s not about doing things right, it’s about doing the right thing

The evaluation is wrong:

- it’s not seat time, it’s business outcomes

- it’s not efficiency, at least until it’s effective

- it’s not about normative-reference, it’s about criteria

So what does this look like in practice? I think it’s about a support system organized so that it recognizes what you’re trying to do, and provides possible help. On top of that, it’s about showing where the advice comes from, developing understanding as an additional light layer. Finally, on top of that, it’s about making performance visible and looking at the performance across the previous level, facilitating learning to learn. And, the underlying values are also made clear.

It doesn’t have to get all that right away. It can start with just better formal learning design, and a bit of content granularity. It certainly starts with social media involvement. And adapting the culture in the org to start developing meta-learning. But you want to have a vision of where you’re going.

And what does it take to get here? It needs a new design that starts from the performance gap and looks at root causes. The design process then onsiders what sort of experience would both achieve the end goal and the gaps in the performer equation (including both technology aids and knowledge and skill upgrades), and consider how that develops over time recognizing the capabilities of both humans and technology, with a value set that emphasis letting humans do the interesting work. It’ll also take models of content, users, context, and goals, with a content architecture and a flexible delivery model with rich pictures of what a learning experience might look like and what learning resources could be. And an implementation process that is agile, iterative, and reflective, with contextualized evaluation. At least, that sounds right to me.

Now, what sounds right to you: learning experience design strategy, performance system design, performance architecture, <your choice here>?

Learning 2011 Day 2 Morning Mindmaps

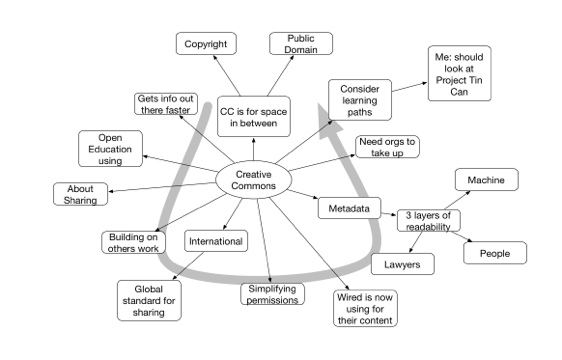

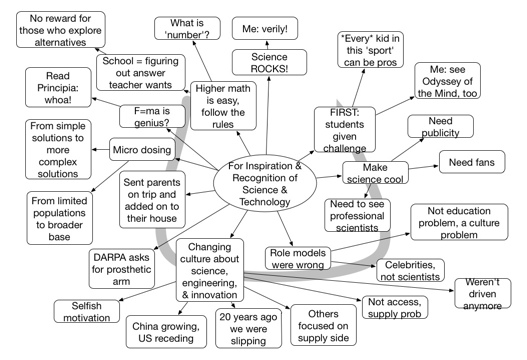

This morning Elliott interviewed Cathy Casserly from Creative Commons and Dean Kamen. Cathy was a passionate advocate for openness and sharing. She talked about going further into learning, and I was reminded about Project Tin Can, making a more general learning path. Dean recited his interesting childhood and then launched into his inspiring project to make science cool again.

Michio Kaku Keynote Mindmap

Don’t be Complacent and Content

Yesterday I attend SDL’s DITAFest. While it’s a vendor-driven show, there were several valuable presentations and information to help get clearer about designing content. And we do need to start looking at the possibilities on tap. Beyond deeper instructional design (tapping into both emotion and effective instruction, not the folk tales we tell about what good design is), we need to start looking at content models and content architecture.

Let me put this a bit in context. When I talk about the Performance Ecosystem, I’m talking about a number of things: improved instructional design, performance support, social learning and mobile. But the “greater integration” step is one that both yields immediate benefits, and sets the stage for some future opportunities. Here we’re talking investing in the underlying infrastructure to leverage the possibilities of analytics, semantics and more, and content architecture is a part of that.

Let me put this a bit in context. When I talk about the Performance Ecosystem, I’m talking about a number of things: improved instructional design, performance support, social learning and mobile. But the “greater integration” step is one that both yields immediate benefits, and sets the stage for some future opportunities. Here we’re talking investing in the underlying infrastructure to leverage the possibilities of analytics, semantics and more, and content architecture is a part of that.

So DITA is Darwin Information Typing Architecture, and what it is about is structuring content a bit. It’s an XML-based approach developed at IBM that lets you not only separate out content from how it’s expressed, but lets you add some semantics on top of it. This has been mostly used for material like product descriptions, such as technical writers produce, but it can be used for white papers, marketing communications, and any other information. Like eLearning. However, the elearning use is still idiosyncratic; one of the top DITA strategy consultants told me that the Learning and Training committee’s contribution has not yet been sufficient.

The important point, however, is that articulating content has real benefits. A panel of implementers mentioned reducing tool costs, reduced redundancy savings, and decreasing time to create and maintain information. There were also strategic benefits in breaking down silos and finding common ground with other groups in the organization. The opportunity to wrap quality standards around the content adds another opportunity for benefits. Server storage was another benefit. As learning groups start taking responsibility for performance support and other areas, these opportunities will be important.

And, the initial investment to start focusing on content more technically is a step along the path to start moving from web 2.0 to web 3.0; custom content generation for the learner or performer. A further step is context-sensitive customization. This is really only possible in a scalable way if you get your arms around paying tighter attention to defining content: tagging, mapping, and more.

It may seem scary, but the first steps aren’t that difficult, and it’s an investment in efficiencies initially, and into a whole new realm of capability going forward. It may not be for you tomorrow, but you have to have it on your radar.

Book Review Pointer

In case you didn’t see it, eLearn Mag has posted my book review of Mark Warschauer’s insightful book, Learning in the Cloud. To quote myself:

This is … a well-presented, concise, and documented presentation of just what is needed to make a working classroom, and how technology helps.

As one more teaser, let me provide the closing paragraph:

The ultimate message, however, is that this book is important, even crucial reading. This is a book that every player with a stake in the game needs to read: teachers, administrators, parents, and politicians. And not to put too delicate a point on it, this is what I think should be our next “man in the moon” project; implementing these ideas comprehensively, as a nation. He’s given us the vision, now it is up to us to execute.

I most strongly urge you, if you care about schooling, to read the book, and then promote the message.