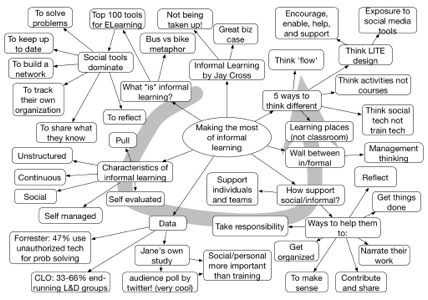

Jane Hart, in her personable style, told a compelling story of the what, why, and how of informal learning. She suggested it was about self-directed learning, that it’s already happening, but that there are valuable ways the L&D group can assist and support.

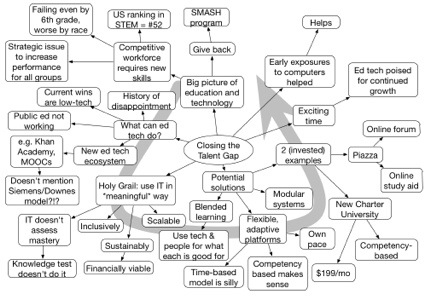

Mitch Kapor #iel12 Keynote Mindmap

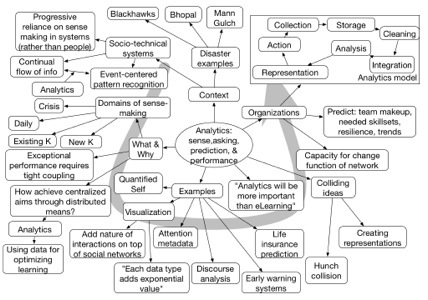

George Siemens #iel12 Keynote Mindmap

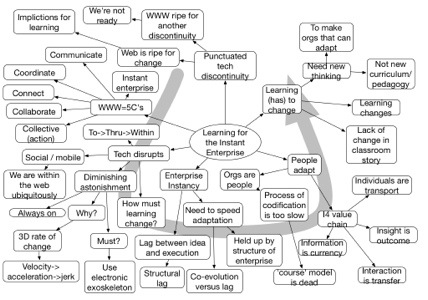

Tony O’Driscoll #iel12 Keynote Mindmap

Tony O’Driscoll kicked off the Innovations in eLearning Symposium with an entertaining and apt tour of the changes in business owing to information change, and the need to adapt. My take was that organizations have to become in a more organic relationship with their ecosystem by empowering their people to engage and act. His final message was that the learning community are the folks who have to figure this out and engage.

Taking the step

A while ago, I wrote an article in eLearnMag, stating that better design doesn’t take longer. In it, I suggested that while there would be an initial hiccup, eventually better design doesn’t take longer: the analysis process is different, but no less involved, the design process is deeper but results in less overall writing, and of course the development is largely the same. And I’m interested in exposing what I mean by the hiccup.

What surprised me is that I haven’t seen more movement. Of course, if you’re a one-person shop, the best you could probably do is attend a ‘Deeper ID’ workshop. But if you’re producing content on a reasonable scale, you should realize that there are several reasons you should be taking this on.

Most importantly, it’s for effectiveness. The learning I see coming out of not only training shops and custom content houses, but also internal units, is just not going to make a difference. If you’re providing knowledge and a knowledge test, I don’t care how well produced it is, it’s not going to make a difference. This is core to a unit’s mission, it seems to me.

It’s also a case of “not if, but when” when someone is going to come in with an effective competing approach. If you can’t do better, you’re going to be irrelevant. If you’re producing for others, your market will be eaten. If you’re producing internally, your job will be outsourced.

It’s also a case of “not if, but when” when someone is going to come in with an effective competing approach. If you can’t do better, you’re going to be irrelevant. If you’re producing for others, your market will be eaten. If you’re producing internally, your job will be outsourced.

Overall, it’s about not just surviving, but thriving.

Yes, the nuances are subtle, and it’s still possible to sell well-produced but not well-designed material, but that can’t last. People are beginning to wake up to the business importance of effective investments in learning, and the emergence of alternate models (Khan Academy, MOOCs, the list goes on) is showing new ways that will have people debating approaches. It may take a while, but why not get the jump on it?

And it’s not about just running a workshop. I do those, and like to do them, but I never pretend that they’re going to make as big a difference as could be achieved. They can’t, because of the forgetting curve. What would make a big difference isn’t much more, however. It’s about reactivating that knowledge and reapplying.

What I envision (and excuse me if I make this personal, but hey, it’s what I do and have done successfully) is getting to know the design processes beforehand, and customizing the workshop to your workflow: your business, your processes of working with SMEs, your design process, your tools, and representative samples of existing work. Then we run a workshop where we use your examples. Working through the process, exploring the deeper concepts, putting them into practice, and reflecting to cement the learning. Probably a day. People have found this valuable in an of itself.

However, I want to take it just a step further. I’ve found that being sent samples of subsequent work and commenting on it in several joint sessions is what makes the real difference. This reactivates the knowledge, identifies the ongoing mistakes, and gives a chance to remediate them. This is what makes it stick, and leads to meaningful change. You have to manage this in a non-threatening way, but that’s doable.

There are more intrusive, higher-overhead ways, but I’m trying to strike a balance between high value and minimal intensiveness to make a pragmatic but successful change. I’d bet that 90% of the learning being developed could be improved by this approach (which means that 90% of the learning being developed really isn’t a worthwhile investment!). It seems so obvious, but I’m not seeing the interest in change. So, what am I missing?

Getting Pragmatic About Informal

In my post on reconciling informal and informal, I suggested that there are practical things L&D groups can do about informal learning. I’ve detected a fair bit of concern amongst L&D folks that this threatens their jobs, and I think that’s misplaced. Consequently, I want to get a wee bit more specific than what I said then:

- they can make courses about how to use social media better (not everyone knows how to communicate and collaborate well)

- share best practices

- work social media into formal learning to make it easier to facilitate the segue into the workplace

- provide performance support for social media

- be facilitating the use of social media

- unearth good practices in the organization and share them

- foster discussion

I also noted “And, yes, L&D interventions there will be formal in the sense that they‘re applying rigor, but they‘re facilitating emergent behaviors that they don‘t own“. And that’s an important point. It’s wrapping support around activities that aren’t content generated by the L&D group. Two things:

- the expertise for much doesn’t reside in the L&D group and it’s time to stop thinking that it all can pass through the L&D group (there’s too much, too fast, and the L&D group has to find ways to get more efficient)

- there is expertise in the L&D group (or should be) that’s more about process than product and can and should be put into practice.

So, the L&D group has to start facilitating the sharing of information between folks. How can they represent and share their understandings in ways the L&D group can facilitate, not own? How about ensuring the availability of tools like blogs, micro-blogs, wikis, discussion forums, media file creating/sharing, and profiles, and helping communities learn to use them? Here’s a way that L&D groups can partner with IT and add real value via a synergy that benefits the company.

That latter bit, helping them learn to use them is also important. Not everyone is naturally a good coach or mentor, yet these are valuable roles. It’s not just producing a course about it, but facilitating a community around these roles. There are a lot of myths about what makes brainstorming work, but just putting people in a room isn’t it. If you don’t know, find out and disseminate it! How about even just knowing how to work and play well with others, how to ask for help in ways that will actually get useful responses, supporting needs for blogging, etc.

There are a whole host of valuable activities that L&D groups can engage in besides developing content, and increasingly the resources are likely to be more valuable addressing the facilitation than the design and development. It’s going to be just too much (by the time it’s codified, it’s irrelevant). Yes, there’ll still be a role for fixed content (e.g. compliance), but hopefully more and more curricula and content will be crowd-sourced, which increases the likelihood of it’s relevance, timeliness, and accuracy.

Start supporting activity, not controlling it, and you will likely find it liberating, not threatening.

Help? Two questions on mobile for you

In the process of writing a chapter on mobile for an elearning book, the editor took my suggestion for structure and then improved upon it. I’d suggested that we have two additional sections: one on hints and tips, and the others on common mistakes. His suggestion was to crowd-source the answers. And I think it’s a good idea, so let me ask for you help, and ask you to respond via comments or to me personally:

- What are the hints and tips you’ve found valuable for mlearning?

- What are the mlearning mistakes you’ve seen or experienced that you’d recommend others avoid?

I welcome seeing what you come up with!

Design Readings

Another book on design crossed my radar when I was at a retreat and in the stack of one of the other guests was Julie Dirksen’s book Design for How People Learn and Susan Weinschenk’s 100 Things Every Designer Needs to Know About People. This book provides a nice complement to Julie’s, focusing on straight facts about how we process the world.

Dr. Weinschenk’s book systematically goes through categories of important design considerations:

- How People See

- How People Read

- How People Remember

- How People Think

- How People Focus Their Attention

- What Motivates People

- People Are Social Animals

- How People Feel

- People Make Mistakes

- How People Decide

Under each category are important points, described, buttressed by research, and boiled down into useful guidelines. This includes much of the research I talk about when I discuss deeper Instructional Design, and more. While it’s written for UI designers mostly, it’s extremely relevant to learning design as well. And it’s easy reading and reference, illustrated and to-the-point.

There are some really definitive books that people who design for people need to have read or have to hand. This fits into the latter category as does Dirksen’s book, while Don Norman’s books, e.g. Design of Everyday Things fit into the former. Must knows and must haves.

Flipping assessment

Inspired by Dave Cormier’s learning contract, and previous work at learner-defined syllabi and assessment, I had a thought about learner-created project evaluation rubrics. I’m sure this isn’t new, but I haven’t been tracking this space (so many interests, so little time), so it’s a new thought for me at any rate ;).

It occurred to me that, at least for somewhat advanced learners (middle school and beyond?), I’d like to start having the learners propose evaluation criteria for rubrics. Why? Because, in the course of investigating what should be important, they’re beginning to learn about what is important. Say, for instance, they’re designing a better services model for a not-for-profit (one of the really interesting ways to make problems interesting is to make them real, e.g. service learning). They should create the criteria for success of the project, and consequently the criteria for the evaluation of the project. I wouldn’t assume that they’re going to get it right initially, and provide scaffolding, but eventually more and more responsibility devolves to the learner.

This is part of good design; you should be developing your assessment criteria as part of the analysis phase, e.g. before you start specifying a solution. This helps learners get a better grasp on the design process as well as the learning process, and helps them internalize the need to have quality criteria in mind. We’ve got to get away from a vision where the answers are ‘out there’, because increasingly they’re not.

This also ties into the activity model I’ve been talking about, in that the rationale for the assessment is discussed explicitly, make the process of learning and thinking transparent and ‘out loud’. This develops both domain skills and meta-learning skills.

It is also another ‘flip’ of the classroom to accompany the other ways we’re rethinking education. Viva La Revolucion!

Making rationale explicit

In discussing the activity-based learning model the other day, I realized that there had to be another layer to it. Just as a reflection by the learner on the product they produce as the outcome of an activity should be developed, there’s another way in which reflection should come into play.

What I mean here is that there should be a reflection layer on top of the curricula and the content as well, this time by the instructor and administration. In fact, there may need to be several layers.

For one, the choice of activities should be made explicit in terms of why they’re chosen and how they instantiate the curricula goals. This includes the choice of products and guidance for reflection activities. This is for a wide audience, including fellow teachers, administrators, parents, and legislators. Whoever is creating the series of activities should be providing a design rationale for their choice of activities.

Second, the choice of content materials associated with the activities should have a rationale. Again, for fellow teachers, administrators, parents, and legislators. Again, a design rationale makes a plausible framework for dialog and improvement.

In both cases, however, they’re also for the learners. As I subsequently indicated, I gradually expect learners to take responsibility for setting their own activities, as part of the process of becoming self learners. Similarly, the choice of products, content materials, and reflections will become the learners to improve their meta-learning skills.

All together, this is creating a system that is focused on developing meaningful content and meta-learning skills that develops learners into productive members of the society we’re transitioning into.

(And as a meta-note, I can’t figure out how to graft this onto the original diagram, without over-crowding the diagram, moving somehow to 3D, or animating the elements, or… Help!)