I was asked about, in regards to the Serious eLearning Manifesto, about how people could begin to realize the potential of eLearning. I riffed about this once before, but I want to spin it a different way. The key is making meaningful practice. And there are three components: align it, deepen it, and space it.

First, align it. What do I mean here? I mean make sure that your learning objective, what they’re learning, is aligned to a real change in the business. Something you know that, if they improve, it will have an impact on a measurable business outcome. This means two things, underneath. First, it has to be something that, if people do differently and better, it will solve a problem in what the organization is trying to do. Second, it has to be something learning benefits from. If it’s not a case where it’s a cognitive skill shift, it should be about using a tool, or replaced with using a tool. Only use a course when a course makes sense, and make sure that course is addressing a real need.

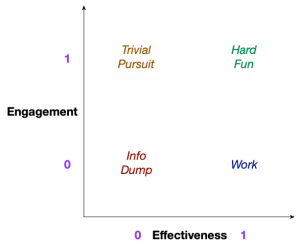

Second, deepen it. Abstract practice, and knowledge test are both less effective than practice that puts the learner in a context like they’ll be facing in the workplace, and having them make the same decisions they’ll need to be making after the learning experience. Contextualize it, and exaggerate the context (in appropriate ways) to raise the level of interest and importance to be closer to the level of engagement that will be involved in live performance. Make sure that the challenge is sufficient, too, by having alternatives that are seductive unless you really understand. Reliable misconceptions are great distractors, by the way. And have sufficient practice that leads from their beginning ability to the final ability they need to have, and so that they can’t get it wrong (not just until they get it right; that’s amateur hour).

Here’s where the third, space it, can come in. Will Thalheimer has written a superb document (PDF) explaining the need for spacing. You can space out the complexity of development, and sufficient practice, but we need to practice, rest (read: sleep), and then practice some more. Any meaningful learning really can’t be done in one go, but has to be spread. How much? As Will explains, that depends on how complex the task is, and how often the task will be performed and the gaps in between, but it’s a fair bit. Which is why I say learning should be expensive.

After these three steps, you’ll want to only include the resources that will lead to success, provide models and examples that will support success, etc, but I believe that, regardless, learners with good practice are likely to get more out of the learning experience than any other action you can take. So start with good practice, please!