I was thinking about how to make meaningful practice, and I had a thought that was tied to some previous work that I may not have shared here. So allow me to do that now.

Ideally, our practice has us performing in ways that are like the ways we perform in the real world. While it is possible to make alternatives available that represent different decisions, sometimes there are nuances that require us to respond in richer ways. I’m talking about things like writing up an RFP, or a response letter, or creating a presentation, or responding to a live query. And while these are desirable things, they’re hard to evaluate.

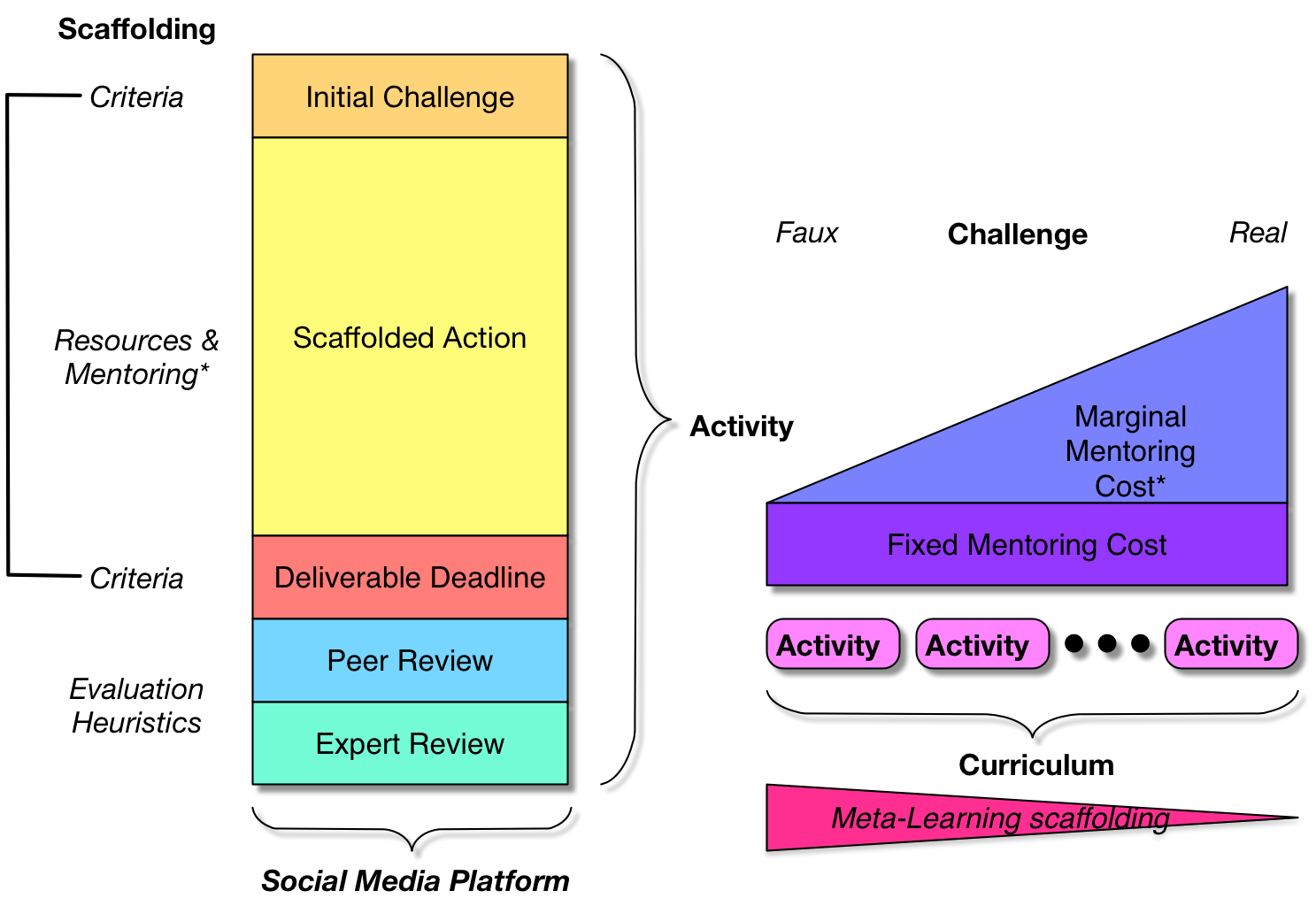

The problem is that our technology to evaluate freeform text is difficult, let alone anything more complex. While there are tools like latent semantic analysis that can be developed to read text, it’s complex to develop and it won’t work on spoken responses , let alone spreadsheets or slide decks (common forms of business communication). Ideally, people would evaluate them, but that’s not a very scalable solution if you’re talking about mentors, and even peer review can be challenging for asynchronous learning.

An alternative is to have the learner evaluate themselves. We did this in a course on speaking, where learners ultimately dialed into an answering machine, listened to a question, and then spoke their responses. What they then could do was listen to a model response as well as their response. Further, we could provide a guide, an evaluation rubric, to guide the learner in evaluating their response in respect to the model response (e.g. “did you remember to include a statement and examples”?).

This would work with more complex items, too. “Here’s a model spreadsheet (or slide deck, or document); how does it compare to yours?” This is very similar to the types of social processing you’d get in a group, where you see how someone else responded to the assignment, and then evaluate.

This isn’t something you’d likely do straight off; you’d probably scaffold the learning with simple tasks first. For instance, in the example I’m talking about we first had them recognize well- and poorly-structured responses, then create them from components, and finally create them in text before having them call into the answering machine. Even then, they first responded to questions they knew they were going to get before tasks where they didn’t know the questions. But this approach serves as an enriching practice on the way to live performance.

There is another benefit besides allowing the learner to practice in richer ways and still get feedback. In the process of evaluating the model response and using an evaluation rubric, the learner internalizes the criteria and the process of evaluation, becoming a self-evaluator and consequently a self-improving learner. That is, they use a rubric to evaluate their response and the model response. As they go forward, that rubric can serve to continue to guide as they move out into a performance situation.

There are times where this may be problematic, but increasingly we can and should mix media and use technology to help us close the gap between the learning practice and the performance context. We can prompt, record learner answers, and then play back theirs and the model response with an evaluation guide. Or we can give them a document template and criteria, take their response, and ask them to evaluate theirs and another, again with a rubric. This is richer practice and helps shift the learning burden to the learner, helping them become self-learners. I reckon it’s a good thing. I’ll suggest that you consider this as another tool in your repertoire of ways to create meaningful practice. What do you think?