In addition to my keynote and session at last week’s Immersive Learning University event, I was on a panel with Eric Bernstein, Andy Peterson, & Will Thalheimer. As we riffed about Immersive Learning, I chimed in with my usual claim about the value of exaggeration, and Will challenged me, which led to an interesting discussion and (in my mind) this resolution.

So, I talk about exaggeration as a great tool in learning design. That is, we too often are reigned in to the mundane, and I think whether it’s taking it a little bit more extreme or jumping off into a fantasy setting (which are similar, really), we bring the learning experience closer to the emotion of the performance environment (when it matters).

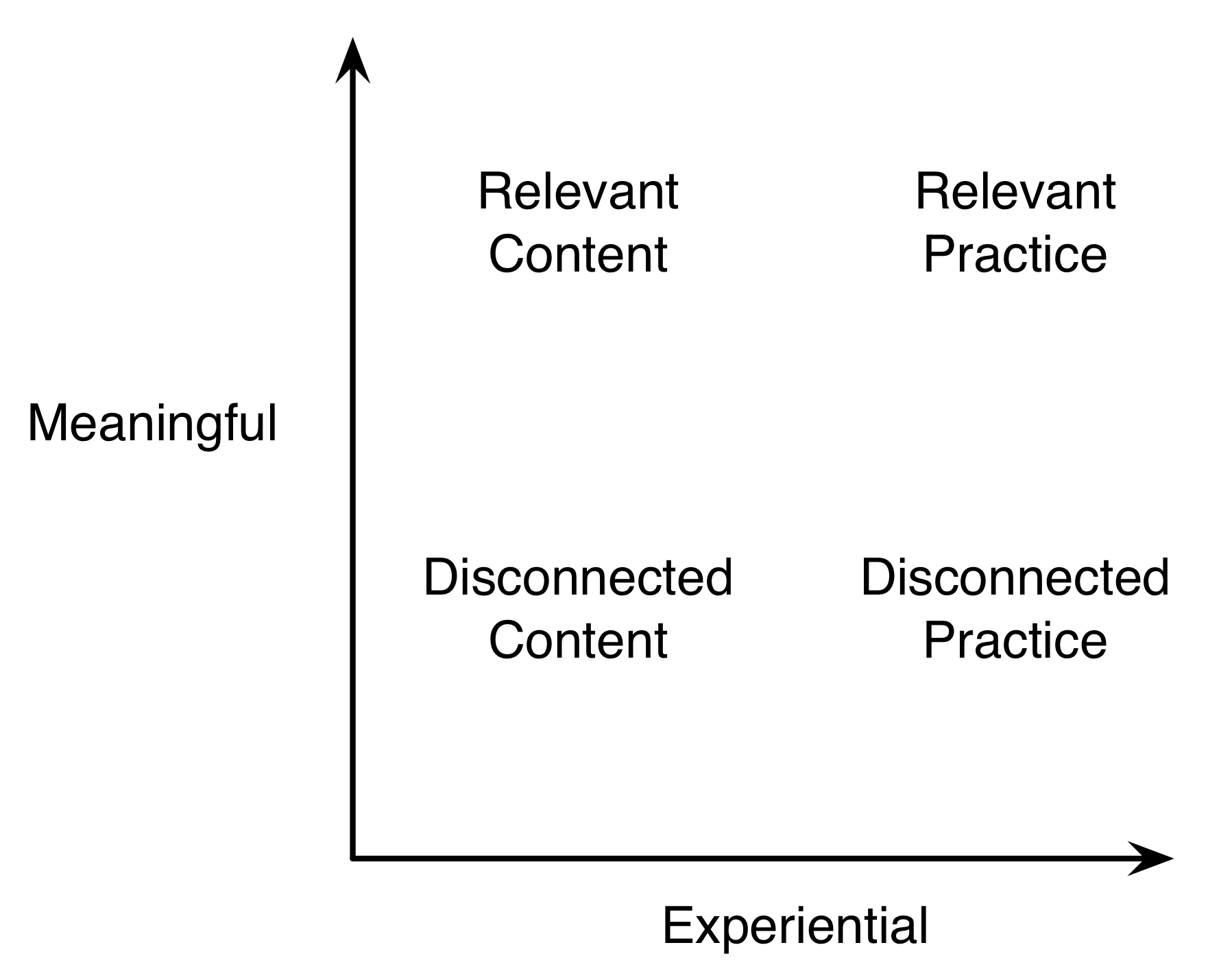

Will challenged me about the need for transfer, and that the closer the learning experience is to the performance environment, the better the transfer. Which has been demonstrated empirically. Eric (if memory serves) also raised the issue of alignment to the learning goals, and that you can’t overproduce if you lose sight of the original cognitive skills (we also talked about when such experiences matter, and I believe it’s when you need to develop cognitive skills).

And they’re both right, although I subsequently pointed out that when the transfer goal is farther, e.g. the specific context can vary substantially, exaggeration of the situation may facilitate transfer. Ideally, you would have practice across contexts spanning the application space, but that might not be feasible if we’re high up on the line going from training to education.

And of course, keeping the key decisions at the forefront is critical. The story setting can be altered around those decisions, but the key triggers for making those decisions and the consequences must map to reality, and the exaggeration has to be constrained to elements that aren’t core to the learning. Which should be minimized.

Which gets back to my point about the emotional side. We want to create a plausible setting, but one that’s also motivating. That happens by embedding the decisions in a setting that’s somewhat ‘larger than life’, where we’re emotionally engaged in ways consonant with the ones we will be when we’re performing.

Knowing what rules to break, and when, here comes down to knowing what is key to the learning and what is key to the engagement, and where they differ. Make sense?