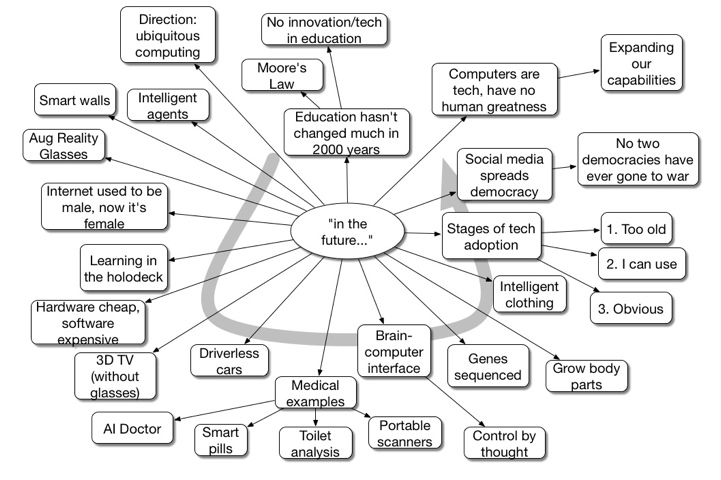

Michio Kaku keynoted the DevLearn keynote today. With his signature phrase,”in the future…”, he covered new techs and made tentative connections to learning.

Search Results for: mindmap

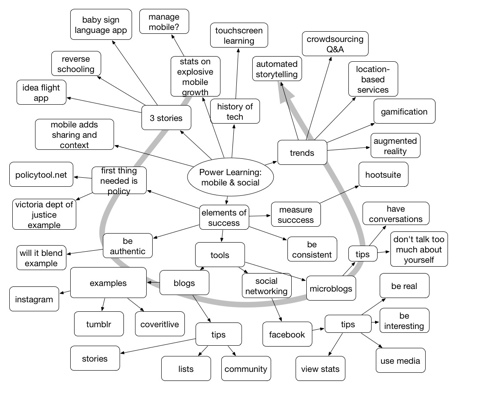

Amber MacArthur #mLearnCon Keynote Mindmap

This morning’s mLearnCon keynote was by journalist Amber MacArthur. She talked about the intersection of mobile and social, though mostly talking the social side. Definitely a fun presentation with lots of humorous examples.

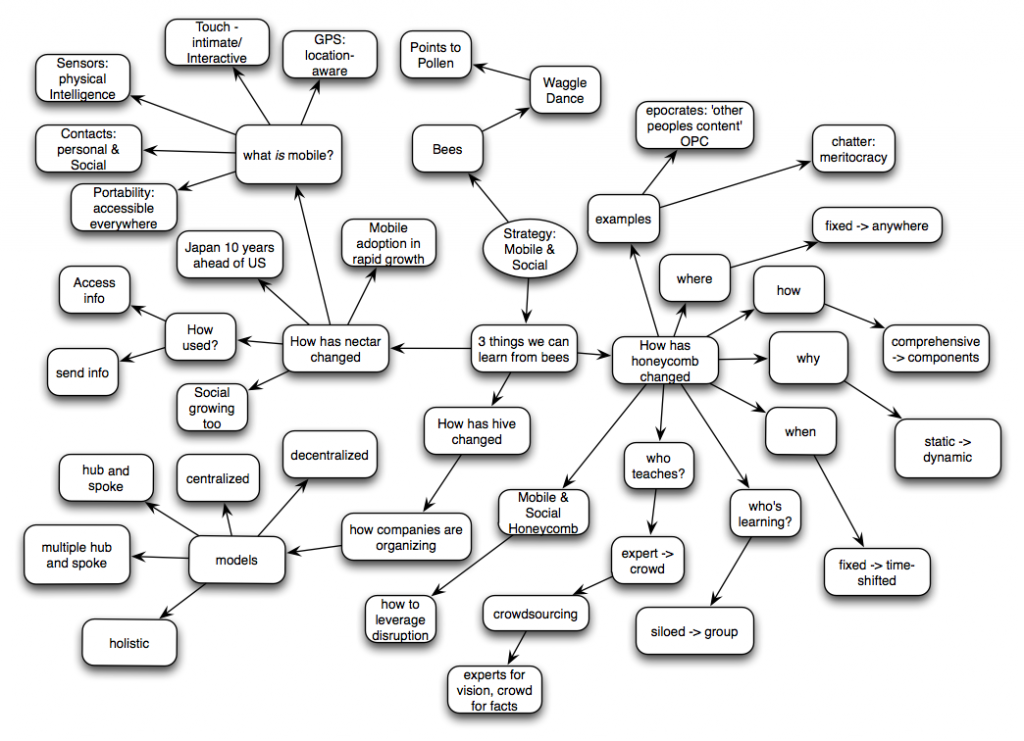

Jeremiah Owyang mLearnCon keynote mindmap

Jeremiah Owyang, analyst at Altimeter, keynoted the opening day of the eLearning Guild’s mLearnCon conference. He talked about the intersection of mobile and social, talking mobile definitions, organizational structures, and core transitions, using a metaphor of bees.

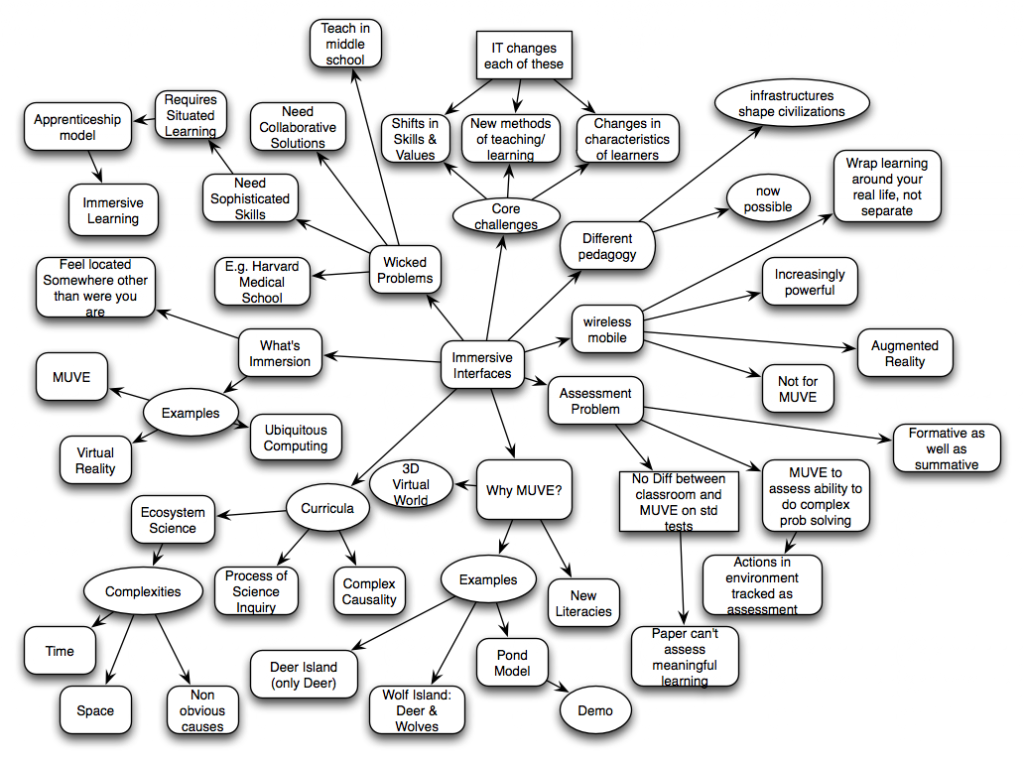

Chris Dede Keynote Mindmap

Chris Dede opened the Innovations in eLearning keynote with a speech that very much resonated with me and reflected things I’ve been blogging about here since Learnlets started, but has had the opportunity to build. His closing comment is intriguing: “infrastructures shape civilization”.

He talked about teaching skills to deal with wicked problems and developing new literacies, using MultiUser Virtual Environments.

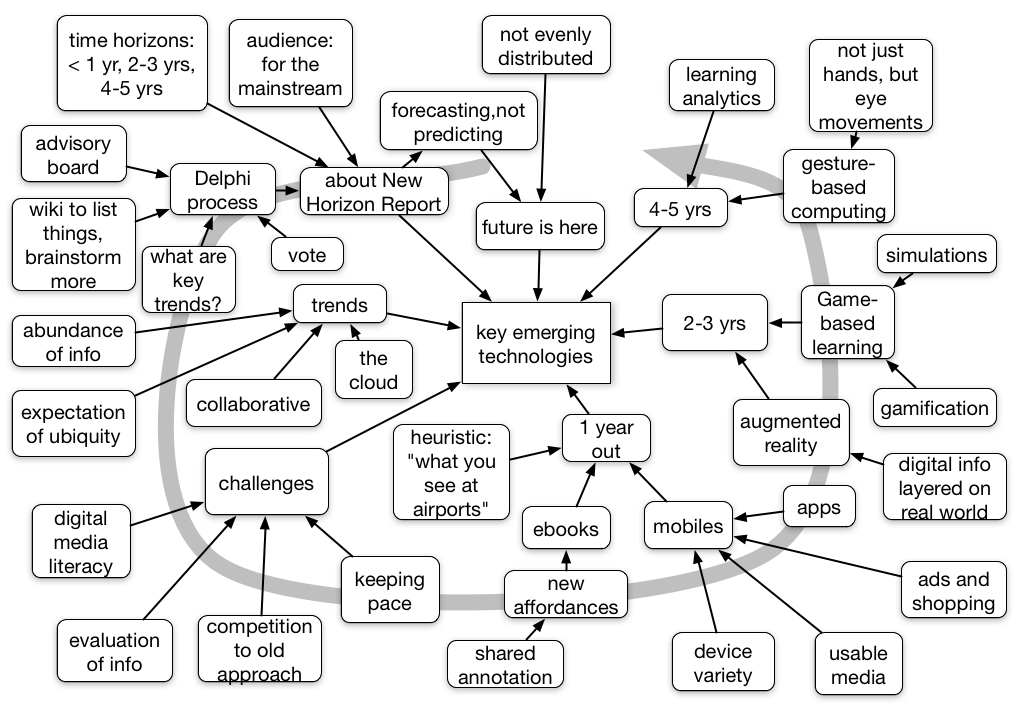

New Horizon Report: Alan Levine – Mindmap

This evening I had the delight to hear Alan Levine present the New Media Consortium’s New Horizon Report for 2011 to the ASTD Mt. Diablo chapter. As often happens, I mindmapped it. Their process is interesting, using a Delphi approach to converge on the top topics.

For the near term (< 1 year), he identified the two major technologies as ebooks and mobile devices (with a shoutout for my book: very kind). For the medium term (2-3 years), he pointed to augmented reality and game-based learning (though only barely touching on deeply immersive simulations, which surprised me). For the longer term (4-5 years), the two concepts were gesture-based computing and learning analytics.

A very engaging presentation.

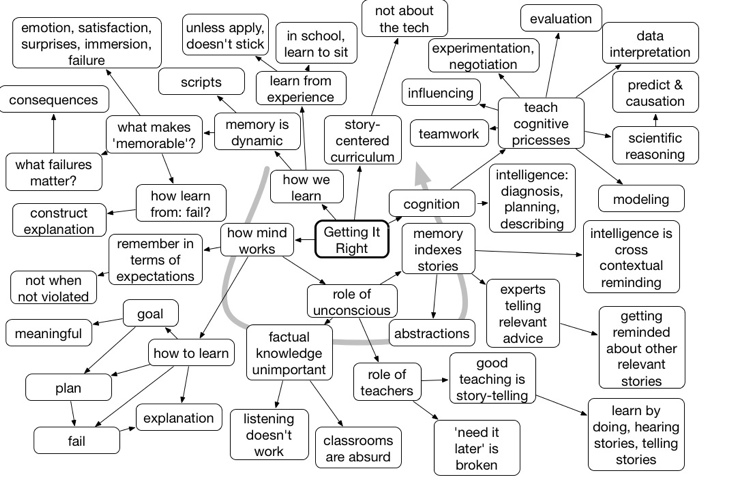

Roger Schank keynote mindmap

Today, Roger Schank keynoted the Learning Technologies UK conference, talking about cognitive science and learning. Obviously, I was in large agreement. And, as usual, I mid mapped it:

Alan Kay keynote mindmap from #iel2010

Who are mindmaps for?

In response to my recent mindmap of Andrew McAfee’s conference keynote (one of a number of mindmaps I’ve done), I got this comment:

Does the diagram work as a useful way of encapsulating the talk for someone who was there? Because, speaking as someone who wasn‘t, I find it almost entirely content-free. Just kind of a collection of buzz-phrases in thought bubbles, more or less randomly connected.

I‘m not trying to criticise his talk – which obviously I didn‘t hear – or his points – which I still have no idea about – but the diagram as a method of conveying information is a total failure to this sample size of one. Possibly more useful as a refresher mechanism for people who got the talk in its original form?

Do mindmaps work for readers? Well, I have to admit one reason I mindmap is completely personal. I do it to help me process the presentation. Depending on the speaker, I can thoughtfully reprocess the information, or sometimes just take down interesting comments, but there are several benefits: In figuring out the ways to link, I’m capturing the conceptual structure of the talk (really, they’re concept maps), and I’m also occupying my personal bandwidth enough to allow me to focus on the talk without my mind chasing down one path and missing something. Er, mostly…

Then, for a second category, those who actually heard the talk, they might be worthwhile reflection and re-processing. I’d welcome anyone weighing in on that. I don’t have access to someone else’s example to see whether it would work for me.

Then, there are the potential viewers, like the commenter, for whom it’s either possible or not to process any coherent idea out of the presentation. I looked back at the diagram for McAfee’s keynote, and I can see that I was cryptic, missing some keywords in communicating. This was for two reasons: one, he was quick, and it was hard to get it down before he’d moved on. Two, he was eloquent, and because he was quick I couldn’t find time to paraphrase. And there’s a more pragmatic reason; I try to constrain the size of the mindmap, and I’m always rearranging to get it to fit on one page. That effort may keep me more terse than is optimal for unsupported processing.

I will take issue with “more or less randomly connected”, however. The connections are quite specific. In all the talks I’ve done this for, there have been several core points that are elaborated, in various ways by talk, but each talk tends to be composed of a replicated structure. The connections capture that structure. For instance, McAfee repeatedly took a theme, used an example to highlight it, then derived a takehome point and some corollaries. There would be ways to more eloquently convey that structure (e.g. labeled links, color coding), but the structure isn’t always laid out beforehand (it’s emergent), and is moving fast enough that I couldn’t do it on the fly.

I could post-process more, but in the most recent two cases I wanted to get it up quickly: when I tweeted I was making the mindmap, others said they were eager to see it, so I hung on for some minutes after the keynotes to get it up quickly. McAfee himself tweeted “dang, that was FAST – nice work!”

I did put the arrow in the background to guide the order in which the discussion came, as well, but apparently it is too telegraphic for the non-attendee. It happens I know the commenter well, and he’s a very smart guy, so if he’s having trouble, that’s definitely an argument that the raw mindmap alone is not communicative, at least not without perhaps some post-processing to make the points clear.

Really valuable to get the feedback, and worthwhile to reflect on what the tradeoffs are and who benefits. It may be that these are only valuable for fellow attendees. Or just me. I may have to consider a) not posting, b) slowing down and doing more post-processing, or…? Comments welcome!

Zimmerman Keynote Mindmap DevLearn 09

Eric Zimmerman spoke eloquently on games as the second day keynote at DevLearn. In it, he talked about how systems thinking was important, how games are systems of rules and consequently develop systems thinking. He talked about how our play brings meaning to the rules, and that creating spaces of possible outcomes allow us to explore.

He ended up advocating that we design for possibilities of unexpected outcomes to create meaning for our learners. Cammy Bean has blogged the presentation too.

How to visualize generative?

I’ve been thinking a fair bit about generative learning of late. Not least, because it’s one of the things I’ll be talking about at our LDA Learning Science Conference, coming up (Nov for the background vids and discussion, early Dec for the week of live with presenters). It’s a refresh of the successful event we held last year, but keep what worked, and adding what’s new! And, I’m still wrestling with it, particularly how to represent it!

First, generative learning is the complement to retrieval practice. Research by luminaries such as Robert Bjork, Henry Roedigger, Ericsson, etc, have told us of the value of retrieval practice for memory. Which is all about strengthening our ability to get information out of long-term memory to solve problems. However, we first need to get our models and examples into long-term memory first. I’ve previously termed this elaboration, but generative activities may be a more proactive way of thinking about it.

It’s really about connecting new information to old. We know that’s valuable! And we can present it, but generative activities have learners actively processing information. So, for instance, what Craik & Lockhart talked about as elaborative processing. We can connect it to personal experience, such as asking what this explains that we previously had observed. Or, we can (and must) connect it to previous information, so we can integrate it more tightly into our understanding. So, we can diagram it via a mindmap or otherwise, draw it, write it in different terms, etc. . I suggest that many of Thiagi and Matt Richter’s activities do just this!

Then, as you have probably know, I’m fond of diagrams. And so, I want to find a way to represent the value of generative learning, in ways we can remember and apply. What I’m struggling with is representing the process of elaboration. It could be a sequence of networks, but I’ve been somewhat loath simply because it seems like too much trouble! Which, of course, isn’t a good reason. Another reason (as he reaches for justification), is that networks are a level of abstraction away, and not really cognitive. So maybe I need to show concept relationships being formed?

As you can tell, I’m not really there yet. But, in the spirit of ‘learning out loud’, I thought I’d share where I am, not least to prompt some responses with ideas! So, what ideas or pointers can you share?