One of the things that has been a recurring theme across things I’ve been looking at lately is experience. Too often we confound age with experience. And, of course, sometimes it’s that we should be talking about it. So, a brief rant on age or experience.

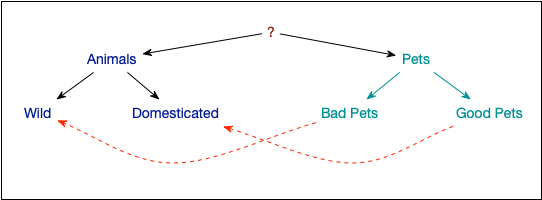

First, I’ll bring up the ‘generations’ myth. It’s appealing, as our brains like buckets for things. We’re kinda wired that way. The only problem is that generations as a concept has been looked at and debunked. Heck, in Ancient Greek days they were complaining that ‘kids just have no respect”! And if you think about it, thinking that someone in Los Angeles CA of a certain age has more in common with someone in Nepal of the same age versus another Angelenõ of a different age is kinda ridiculous.

And, those ‘defining’ events? They affect every conscious person! And it’s so context dependent. A local event may not mean much to you, unless it affects you somehow, and then you share more with everyone else so affected. There’s actually a simpler explanation. Say, for instance, that “young folks want classes while old folks don’t”. That’s explainable by stage of life: when you’re young you need credentials, but later on you can point to your experience.

People share values, and gain motivation by the same underlying factors (differently across culture and personality), and more. Just look at the research on self-determination theory! Attributing to age rather than explaining by experience is a mistake. So, for instance, my kids, who arguably fit the label ‘digital natives’, still come to me (decreasingly, I’ll admit) for tech problems.

Then, there are many things that change as you develop in a domain. For instance, in our Learning Science Conference, my colleague Matt Richter was talking about feedback, and very clearly pointed out how what useful feedback is changes as you gain experience. This holds true for examples, too, the type of useful example changes. Also for practice: with more experience, you need more challenge.

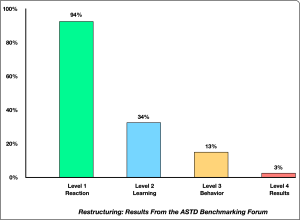

Which, as we further see, is how we go wrong. We do the ‘one size fits all’, not recognizing that things need to change. To be fair, we also do the wrong practice (knowledge test rather than application to problems), give the wrong feedback to begin with (right/wrong), the list goes on. But even when we’re trying to do it right, we forget things like adapting for initial and developing experience. Yet, it’s a factor for instance in how much practice you need, how much spacing, etc.

This problem does go more broadly. We hear it in hiring (age discrimination). Of course, that’s only one problem. For example, gender, race, physical and neurological differences, and more are also present. Sadly. Okay, soapbox: DEI, done right, leads to better outcomes! Actually, that’s got an evidence-base, so probably more than soapbox. Still. So, consider experience as one of the factors distinguishing individuals. Folks can’t control their age, but they can determine their experience. So use it!