Today I read that Anthropic has released Claude for Education (thanks, David ;). And, it triggered some thinking. So, I thought I’d share. I haven’t fully worked out my thoughts, so this is preliminary. Still, here’re some triggered reflections on Intelligent Tutoring via models.

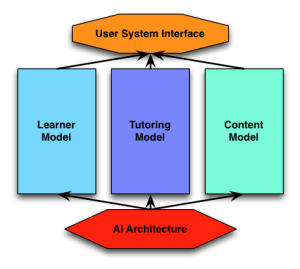

So, as I’ve mentioned, I’ve been an AI groupie. Which includes tracking the AI and education field, since that’s the natural intersection of my interests. Way back when, Stellan Ohlsson abstracted the core elements of an intelligent tutoring system (ITS), which include a student (learner) model, a domain (expert on the content) model, and an instruction (tutoring) model. So, a student with a problem takes an action, and then we see what an expert in the domain would do. From that basis, the pedagogy determines what to do next. They’ve been built, work in research, and even been successfully employed in the real world (see Carnegie Learning).

So, as I’ve mentioned, I’ve been an AI groupie. Which includes tracking the AI and education field, since that’s the natural intersection of my interests. Way back when, Stellan Ohlsson abstracted the core elements of an intelligent tutoring system (ITS), which include a student (learner) model, a domain (expert on the content) model, and an instruction (tutoring) model. So, a student with a problem takes an action, and then we see what an expert in the domain would do. From that basis, the pedagogy determines what to do next. They’ve been built, work in research, and even been successfully employed in the real world (see Carnegie Learning).

Now, I’ve largely been pessimistic about the generative AI field, for several reasons. These include that it’s:

- evolutionary, not revolutionary (more and more powerful processors using slight advances on algorithms yields a quantum bump)

- predicated on theft and damage (IP and environmental issues)

- likely will lead to ill use (laying off folks to reduce costs for shareholder returns)

- based upon biz models boosted by VC funds and as yet still volatile (e.g. don’t pick your long term partners yet)

Yet, I’ve been upbeat for AI overall, so it’s mostly the hype and the unresolved issues that are bugging me. So, seeing the features touted for this new system made me think of a potential way in which we might get the desired output. Which is how I (and we) should evolve.

As background, several decades back I was leading a team developing an adaptive learning system. The problem with ITS is that the content model is hard to build; they had to capture how experts reasoned in the field, and then model it through symbolic rules. In this instance I had the team focus on the tutoring model instead, and used a content model based upon learning objects with the relationships between them capturing the knowledge. Thus, you had to be careful in the content development. (This was an approach we got running. A commercial company subsequently brought it to market successfully a decade after our project. Of course, our project was burned to the ground by greed and ego.)

So, what I realized is that, with the right constraints, you could perhaps do an intelligent tutoring system. So, first, the learner model might be primed by a pre-test, but is built by learner actions. The content model could come from training on textbooks. You could do either a symbolic processing of the prose (a task AI can do), or a machine learning (e.g. LLM) version by training. Then, the tutoring model could be symbolic, capturing the best of our rules, or trained on a (procured, not stolen) database of interventions (something Kaplan was doing, for instance). (In our system, we wrote rules, but had parameters that could be tuned by machine learning over time to get better.)

My thought was that, in short, we can start having cross-domain tutoring. We can have a good learning model, and use the auto-categorization of content. Now, this does beg the problem of knowledge versus skills, which I still worry about. (And, continue to look at.) Still, it appears that the particular solution is looking at this opportunity. I’ll be keen to see how it goes; maybe we can have learning support. If we blend this and a coaching engine…maybe the dream I articulated a long time ago might come to fruition.