In thinking through how to design courses that lead to both engaging experiences and meaningful outcomes, I’ve been working on the component activities. As part of that, I’ve been looking at elements such as pedagogy in pre-, in-, and post-class sessions so that there are principled reasons behind the design.

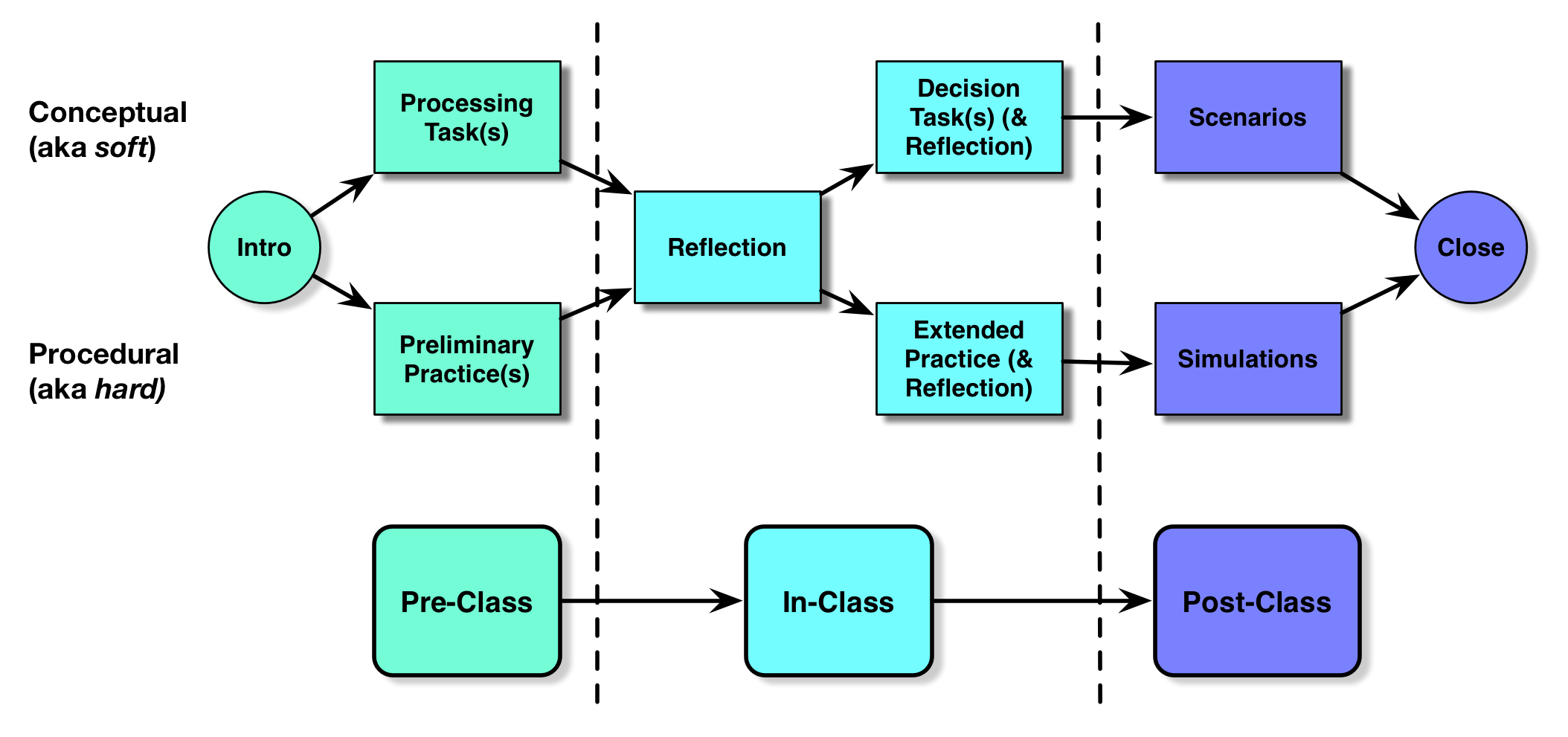

So, here I’m looking at trying for guidance to align what happens in all three sections. In this case, two major types of activities have emerged: more procedural activities, such as using equipment appropriately; and more conceptual activities such as making the right decisions of what to say and do. These aren’t clearly discriminated, but it’s a coarse description.

So, here I’m looking at trying for guidance to align what happens in all three sections. In this case, two major types of activities have emerged: more procedural activities, such as using equipment appropriately; and more conceptual activities such as making the right decisions of what to say and do. These aren’t clearly discriminated, but it’s a coarse description.

Of course, there’s an introduction that both emotionally and cognitively prepares the learner for the coming learning experience.

So for conceptual tasks, what we’re looking to do is drive learning to content. In typical approaches, you’d be presenting conceptual information (e.g. ‘click to see more‘) and maybe asking quiz questions. Here, I’m looking to make the task of processing the information to generate something, whether a document, presentation, or whatever, and that the processing is close to the way the information will be used. So they might create a guide for decisions (e.g. a decision tree), or a checklist, or something that requires them to use the information. (And if the information doesn’t support doing, it’s probably not necessary.) As support, in a recent conversation I heard that interviewed organizations said that making better decisions were the keys to better job performance.

Whereas in the procedural approach, we really want to give them practice in the task. It may be scaffolded, e.g. simplified, but it’s the same practice that they’ll need to be able to perform after the learning experience. Ideally, they’ll have to explore and use content resources to figure out how to do it appropriate, in a guided exploration sense, rather than just be given the steps.

In both cases, models are key to helping them determine what needs to happen. Also in both cases, an instructor should be reviewing their output. In the conceptual case, learners might get feedback on their output, and have a chance to revise their creation. In the case of the practice, the experience is likely a simulation, and the learner should be getting feedback about their success. In either case, the instructor has information about how the cohort is doing. So…

…for in-class learning, the learners should be reflecting on their performances, and the instructor should be facilitating that at the beginning, using the information about what’s working (and not). Then there should be additional activities that the learners engage in that require them interacting with the material, processing (conceptual) or applying (procedural) it with each other and then with facilitated reflection.

Finally, the learners after class should be getting given elaborative activities. In the case of the conceptual task, coming up with an elaborated version or some additional element that helps cement the learning would be valuable. The practice or activity should get fleshed out to the point where the learner will be capable of appropriately acting after the learning experience, owing to sufficient practice and appropriate decontextualization. The goal is for retention over time and transfer to all appropriate situations.

Am I making sense here?

For a 50,000 ft view, and for “formal” learning, it works. I’m not sure that it addresses or provides for elements that are harder to encapsulate within an event, e.g.:

– Pre-requisite selection criteria validation (would be pre-Intro, so maybe that’s not within the scope of what you are trying to define)

– Pre-requisite skill/knowledge testing and remediation (would be pre-Intro, or maybe Intro, so ditto)

– Performance support (really out of scope, probably, but people do learn with the help of PS)

– Spaced practice

– Problem-based learning

I don’t know how this would work if one were trying to apply, for example, Kolb’s experiential learning model. Reflection is identified, but not seeing the cycle here. Maybe this is out of scope as well, or belongs at a lower altitude.

Maybe I’m getting bogged down by the “-class” tags across the bottom. Are you only looking at what could be lumped under the “formal learning” category, or are you looking at a more general view of teaching/learning strategies?

Thoughtful post. It reminds me of what’s happening at @techninjatodd’s middle school in Navasota, Texas.

His was the evidence-based voice that turned me on to ft he flipped learning experience. In your model, flipping involves the learning doing something prior to the event. This could be watching a video, reading a story or scenario, or meeting with your manager to review what their expectations are for you post-event.

Check out this 2013 blog post of Todd’s for more.. http://nesloneyflipped.blogspot.com/search?updated-min=2013-01-01T00:00:00-08:00&updated-max=2014-01-01T00:00:00-08:00&max-results=50

Bill, not a big fan of pretest, unless you use it to the learners advantage. And you’re absolutely right, this isn’t about the initial qualification to study (that’s important, but not here). I do have a mantra (at a level of more detail than this) that if they’re supported in the performance environment, they should be supported in the learning environment. And it does support spaced practice, that’s the whole reason to do related practice pre-, in-, and post-class. And it’s very definitely problem-based learning, there’re tasks (conceptual processing or practice) that are guided but not totally determined, requiring them to process information in context. Maybe I didn’t make that clear enough. And Kolb’s model is based upon his learning style model, which doesn’t stand up to psychometric scrutiny (cf Coffield, et al). There’re inherently multiple cycles of activity, content access, feedback, and reflection however. Now, this is very clearly contextualized with a classroom portion, and very clearly focused on formal learning. For specific reasons that I should’ve perhaps made clear.

And to the point of spaced practice, Urbie, I do not see the pre-class just being content exposure (e.g. watching a video), but instead practice before class, in class, and post class. It may be variations on a theme (scaffolded from simple to complex), but it’s multiple activities where they actually have to put the knowledge into action. It could be scenarios, or meetings, of course.

Clark- this make a ton of sense to me (“class” metaphor may throw some folks, but I can translate that to most contexts which I would apply the model).

This actually seems very close to what I am currently working on in redesigning new hire orientation. The “pre-class” in my world is more of a “preparation prior to taking up time with a mentor”. And yes, I have actually used the words “we want them to come prepared for class”. The key thing for us was taking a huge load off of manager’s shoulders, better preparing employees for deeper mentoring engagements early in their career vs forcing the managers in a position of starting with a blank slate.

The question on pre-testing I see as a pretty unique scenario. We get a ton of new hires with industry experience. Pretests help all parties involved understand where they are, and what remains to be developed. I dislike the word pre-test, since test often comes with connotation of “meeting a bar”- this evaluation is for understanding where to best invest development efforts. We also use pre-testing in our spaced interventions. Thus, we quickly learn what 20% of performance is the problem, and provide support/content/training on that area instead of drone them through 100% of a training intervention and then test (it also gives us great metrics organization-wide on what skills atrophy and common pain points; helps us determine where the puck is headed so we skate to it vs trying to boil the ocean).