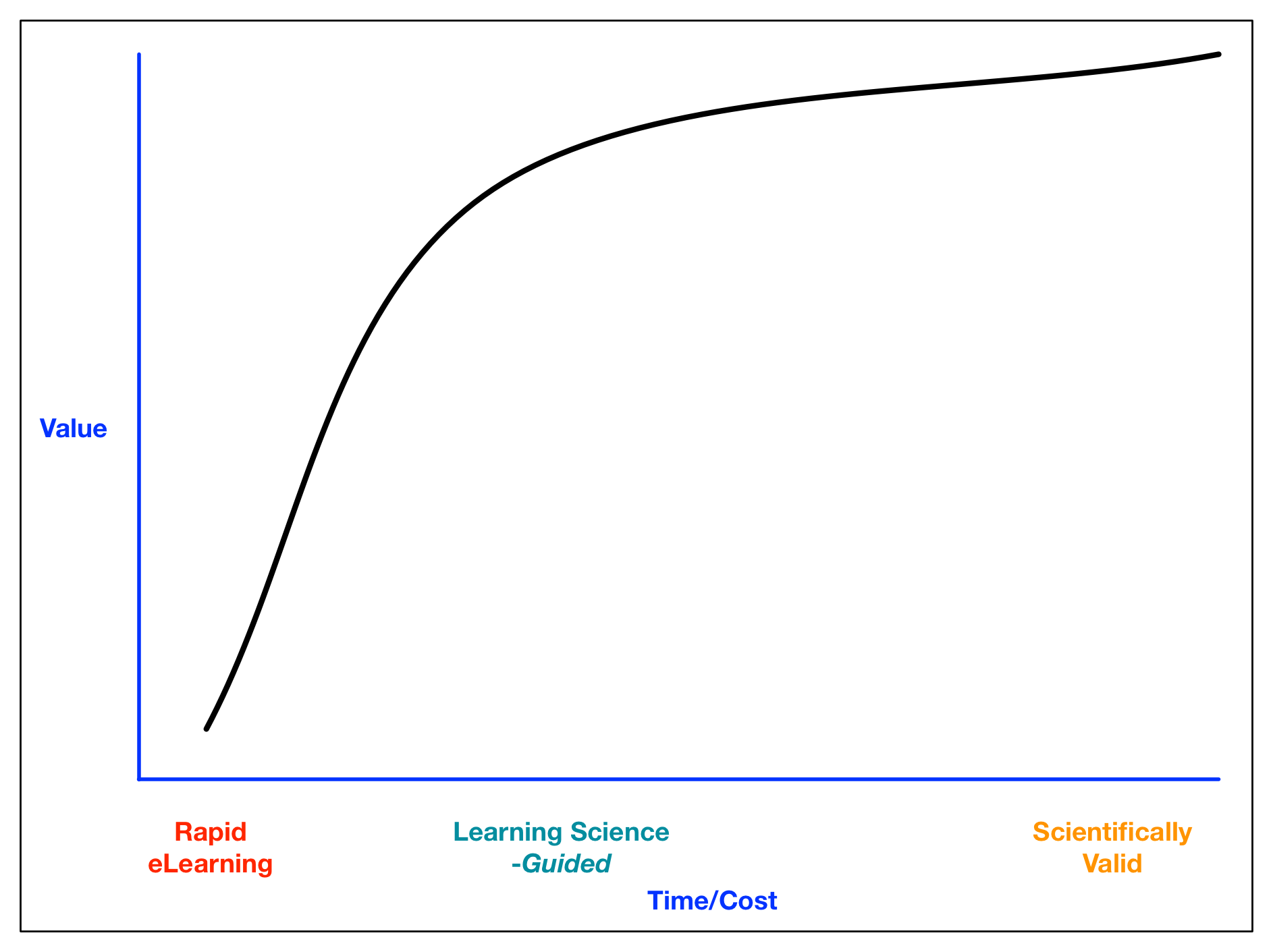

I’ve been on quite the roll of late, calling out some bad practices and calling for learning science. And it occurs to me that there could be some pushback. So let me be clear, I strongly suggest that the types of learning that are needed are not info dump and knowledge test, by and large. What does that mean? Let’s break it down.

First, let me suggest that what’s going to make a difference to organizations is not better fact-remembering. There are times when fact remembering is needed, such as medical vocabulary (my go-to example). When that needs to happen, tarted up drill-and-kill (e.g .quiz show templates, etc) are the way to do it. Getting people to remember rote facts or arbitrary things (like part names) is very difficult. And largely unnecessary if people can look it up, e.g. the information is in the world (or can be). There are some things that need to be known cold, e.g. emergency procedures, hence the tremendous emphasis on drills in aviation and the military. Other than that, put it in the world, not the head. Look up tables, info sheets, etc are the solution. And I’ll argue that the need for this is less than 5-10% of the time.

So what is useful? I’ll argue that what is useful is making better decisions. That is, the ability to explain what’s happened and react, or predict what will happen and make the right choice as as consequence. This comes from model-based reasoning. What sort of learning helps model-based reasoning? Two types, in a simple framework. You need to process the models to help them be comprehended, and use them in context to make decisions with the consequences providing feedback. Yes, there likely will be some content presentation, but it’s not everything, and instead is the core model with examples of how it plays out in context. That is, annotated diagrams or narrated animations for the models; comic books, cartoons, or videos for the examples. Media, not bullet points.

The processing that helps make models stick includes having learners generate products: giving them data or outcomes and having them develop explanatory models. They can produce summary charts and tables that serve as decision aids. They can create syntheses and recommendations. This really leads to internalization and ownership, but it may be more time-consuming than worthwhile. The other approach is to have learners make predictions using the models, explaining things. Worst case, they can answer questions about what this model implies in particular contexts. So this is a knowledge question, but not a “is this an X or a Y”, but rather “you have to achieve Z, would you use approach X, or approach Y”.

Most importantly, you need people to use the models to make decisions like they’ll be making in the workplace. That means scenarios and simulations. Yes, a mini-scenario of one question is essentially a multiple choice (though better written with a context and a decision), but really things tend to be bundled up, and you at least need branching scenarios. A series of these might be enough if the task isn’t too complex, but if it’s somewhat complex, it might be worth creating a model-based simulation and giving the learners lots of goals with it (read: serious game).

And, don’t forget, if it matters (and why are you bothering if it doesn’t), you need to practice until they can’t get it wrong. And you need to be facilitating reflection. The alternatives to the right answer should reflect ways learners often go wrong, and address them individually. “No, that’s not correct, try again” is a really rude way to respond to learner actions. Connect their actions to the model!

What this also implies is that learning is much more practice than content presentation. Presenting content and drilling knowledge (particularly in about an 80/20 ratio), is essentially a waste of time. Meaningful practice should be more than half the time. And you should consider putting the practice up front and driving them to the content, as opposed to presenting the content first. Make the task make the content meaningful.

Yes, I’m making these numbers up, but they’re a framework for thinking. You should be having lots of meaningful practice. There’s essentially no role for bullet points or prose and simplistic quizzes, very little role for tarted up quizzes, and lots of role for media on the content side and branching scenarios and model-driven interactions on the interaction side. This kind of is an inverse of the tools and outputs I see. Hence my continuing campaign for better learning. Make sense?