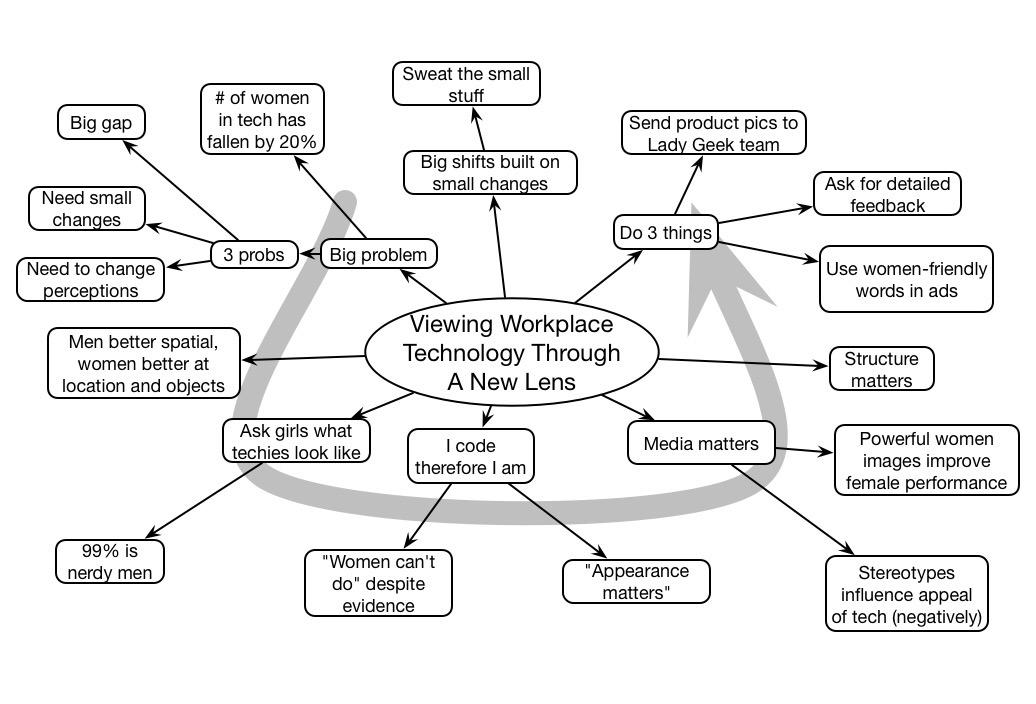

Belinda Parmar addressed the critical question of women in tech in a poignant way, pointing out that the small stuff is important: language, imagery, context. She concluded with small actions including new job description language and better female involvement in product development.

Archives for October 2014

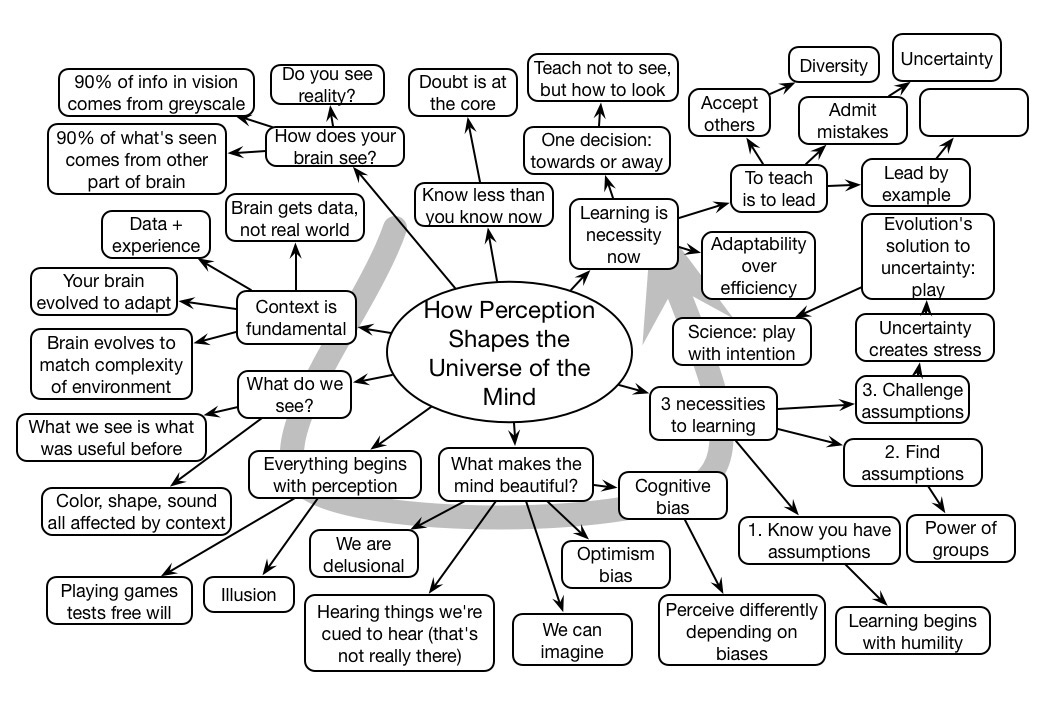

Beau Lotto #DevLearn Keynote Mindmap

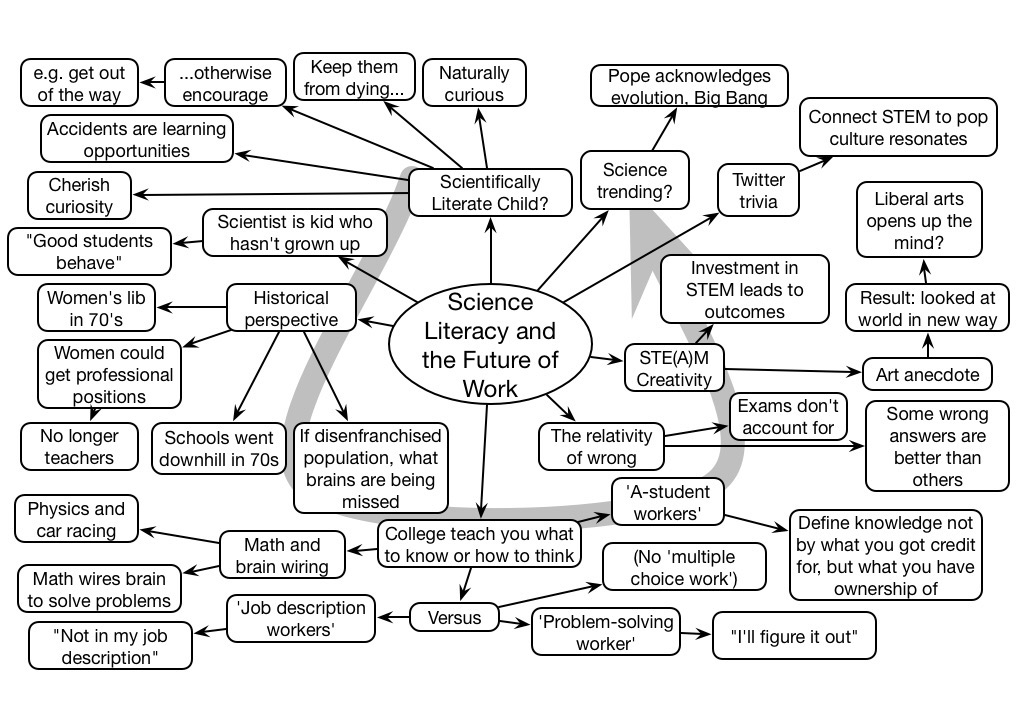

Neil deGrasse Tyson #DevLearn Keynote Mindmap

Cognitive prostheses

While our cognitive architecture has incredible capabilities (how else could we come up with advances such as Mystery Science Theater 3000?), it also has limitations. The same adaptive capabilities that let us cope with information overload in both familiar and new ways also lead to some systematic flaws. And it led me to think about the ways in which we support these limitations, as they have implications for designing solutions for our organizations.

The first limit is at the sensory level. Our mind actually processes pretty much all the visual and auditory sensory data that arrives, but it disappears pretty quickly (within milliseconds) except for what we attend to. Basically, your brain fills in the rest (which leaves open the opportunity to make mistakes). What do we do? We’ve created tools that allow us to capture things accurately: cameras and microphones with audio recording. This allows us to capture the context exactly, not as our memory reconstructs it.

A second limitation is our ‘working’ memory. We can’t hold too much in mind at one time. We ‘chunk’ information together as we learn it, and can then hold more total information at one time. Also, the format of working memory largely is ‘verbal’. Consequently, using tools like diagramming, outlines, or mindmaps add structure to our knowledge and support our ability to work on it.

Another limitation to our working memory is that it doesn’t support complex calculations, with many intermediate steps. Consequently we need ways to deal with this. External representations (as above), such as recording intermediate steps, works, but we can also build tools that offload that process, such as calculators. Wizards, or interactive dialog tools, are another form of a calculator.

Processing information in short term memory can lead to it being retained in long term memory. Here the storage is almost unlimited in time and scope, but it is hard to get in there, and isn’t remembered exactly, but instead by meaning. Consequently, models are a better learning strategy than rote learning. But external sources like the ability to look up or search for information is far better than trying to get it in the head.

Similarly, external support for when we do have to do things by rote is a good idea. So, support for process is useful and the reason why checklists have been a ubiquitous and useful way to get more accurate execution.

In execution, we have a few flaws too. We’re heavily biased to solve new problems in the ways we’ve solved previous problems (even if that’s not the best approach. We’re also likely to use tools in familiar ways and miss new ways to use tools to solve problems. There are ways to prompt lateral thinking at appropriate times, and we can both make access to such support available, and even trigger same if we’ve contextual clues.

We’re also biased to prematurely converge on an answer (intuition) rather than seek to challenge our findings. Access to data and support for capturing and invoking alternative ways of thinking are more likely to prevent such mistakes.

Overall, our use of more formal logical thinking fatigues quickly. Scaffolding help like the above decreases the likelihood of a mistake and increases the likelihood of an optimal outcome.

When you look at performance gaps, you should look to such approaches first, and look to putting information in the head last. This more closely aligns our support efforts with how our brains really think, work, and learn. This isn’t a complete list, I’m sure, but it’s a useful beginning.

#DevLearn Schedule

As usual, I will be at DevLearn (in Las Vegas) this next week, and welcome meeting up with you there. There is a lot going on. Here’re the things I’m involved in:

- On Tuesday, I’m running an all day workshop on eLearning Strategy. (Hint: it’s really a Revolutionize L&D workshop ;). I’m pleasantly surprised at how many folks will be there!

- On Wednesday at 1:15 (right after lunch), I’ll be speaking on the design approach I’m leading at the Wadhwani Foundation, where we’re trying to integrate learning science with pragmatic execution. It’s at least partly a Serious eLearning Manifesto session.

- On Wednesday at 2:45, I’ll be part of a panel on mlearning with my fellow mLearnCon advisory board members Robert Gadd, Sarah Gilbert, and Chad Udell, chaired by conference program director David Kelly.

Of course, there’s much more. A few things I’m looking forward to:

- The keynotes:

- Neil DeGrasse Tyson, a fave for his witty support of science

- Beau Lotto talking about perception

- Belinda Parmar talking about women in tech (a burning issue right now)

- DemoFest, all the great examples people are bringing

- and, of course, the networking opportunities

DevLearn is probably my favorite conference of the year: learning focused, technologically advanced, well organized, and with the right people. If you can’t make it this year, you might want to put it on your calendar for another!

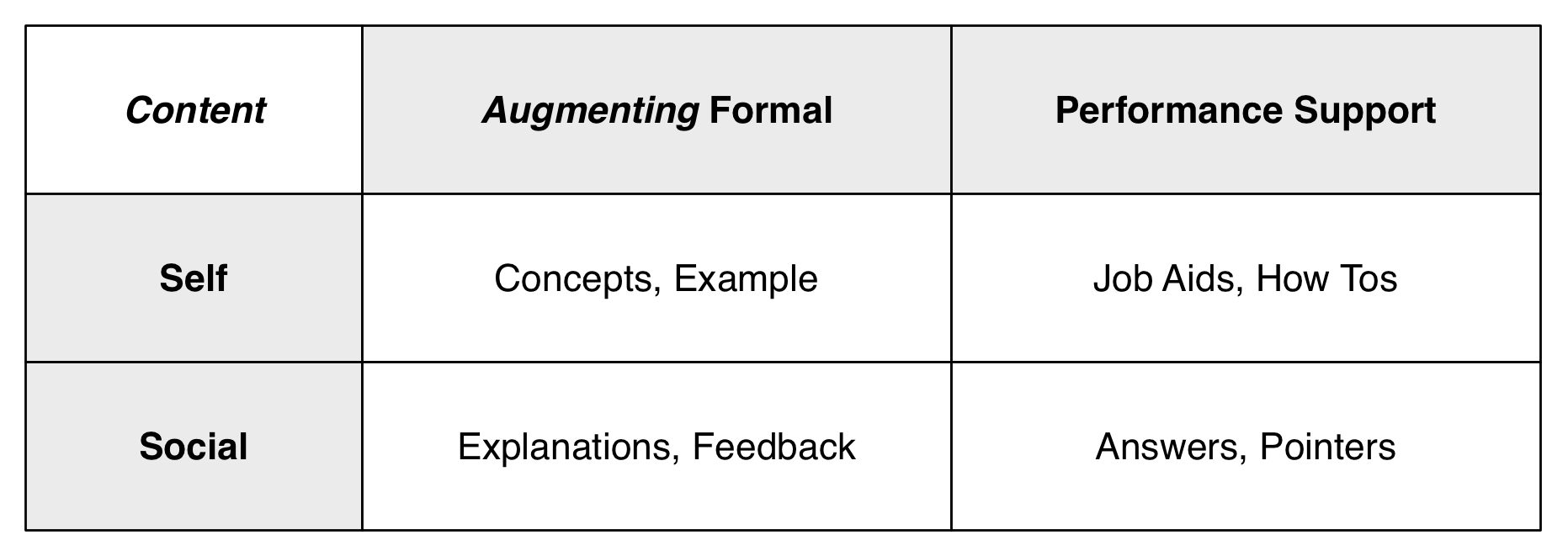

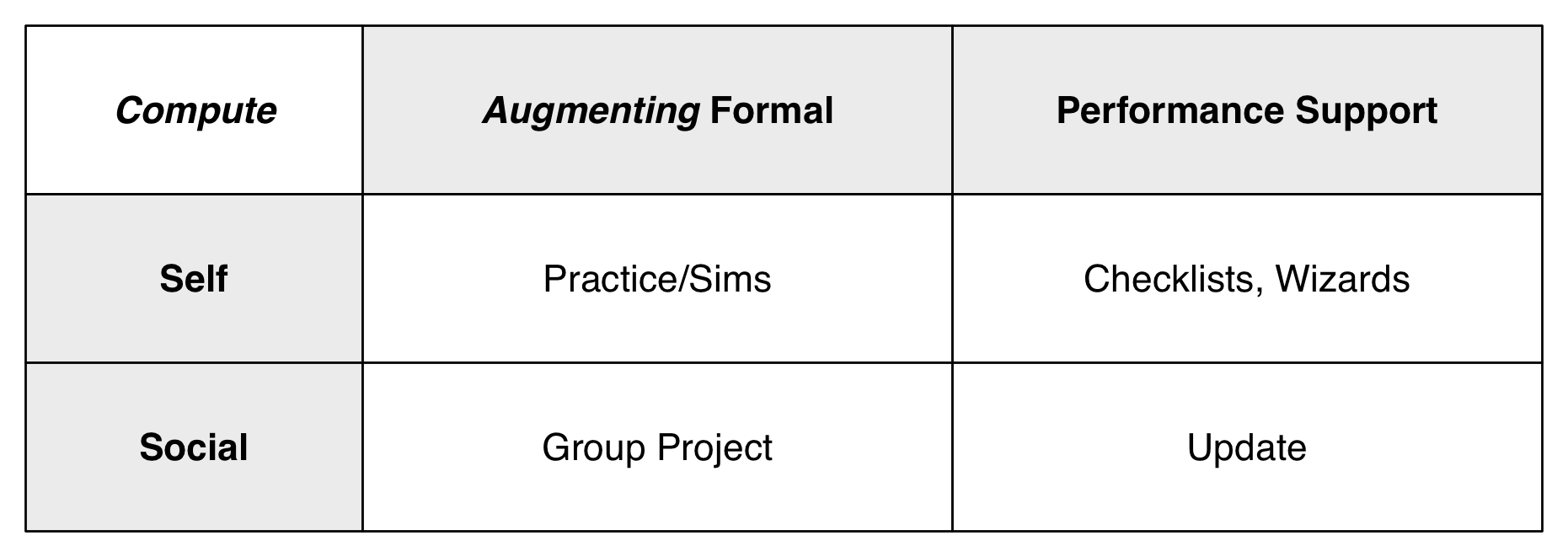

Extending Mobile Models

In preparation for a presentation, I was reviewing my mobile models. You may recall I started with my 4C‘s model (Content, Compute, Communicate, & Capture), and have mapped that further onto Augmenting Formal, Performance Support, Social, & Contextual. I’ve refined it as well, separating out contextual and social as different ways of looking at formal and performance support. And, of course, I’ve elaborated it again, and wonder whether you think this more detailed conceptualization makes sense.

So, my starting point was realizing that it wasn’t just content. That is, there’s a difference between compute and content where the interactivity was an important part of the 4C’s, so that the characteristics in the content box weren’t discriminated enough. So the new two initial sections are mlearning content and mlearning compute, by self or social. So, we can be getting things for an individual, or it can be something that’s socially generated or socially enabled.

So, my starting point was realizing that it wasn’t just content. That is, there’s a difference between compute and content where the interactivity was an important part of the 4C’s, so that the characteristics in the content box weren’t discriminated enough. So the new two initial sections are mlearning content and mlearning compute, by self or social. So, we can be getting things for an individual, or it can be something that’s socially generated or socially enabled.

The point is that content is prepared media, whether text, audio, or video. It can be delivered or accessed as needed. Compute, interactive capability, is harder, but potentially more valuable. Here, an individual might actively practice, have mixed initiative dialogs, or even work with others or tools to develop an outcome or update some existing shared resources.

The point is that content is prepared media, whether text, audio, or video. It can be delivered or accessed as needed. Compute, interactive capability, is harder, but potentially more valuable. Here, an individual might actively practice, have mixed initiative dialogs, or even work with others or tools to develop an outcome or update some existing shared resources.

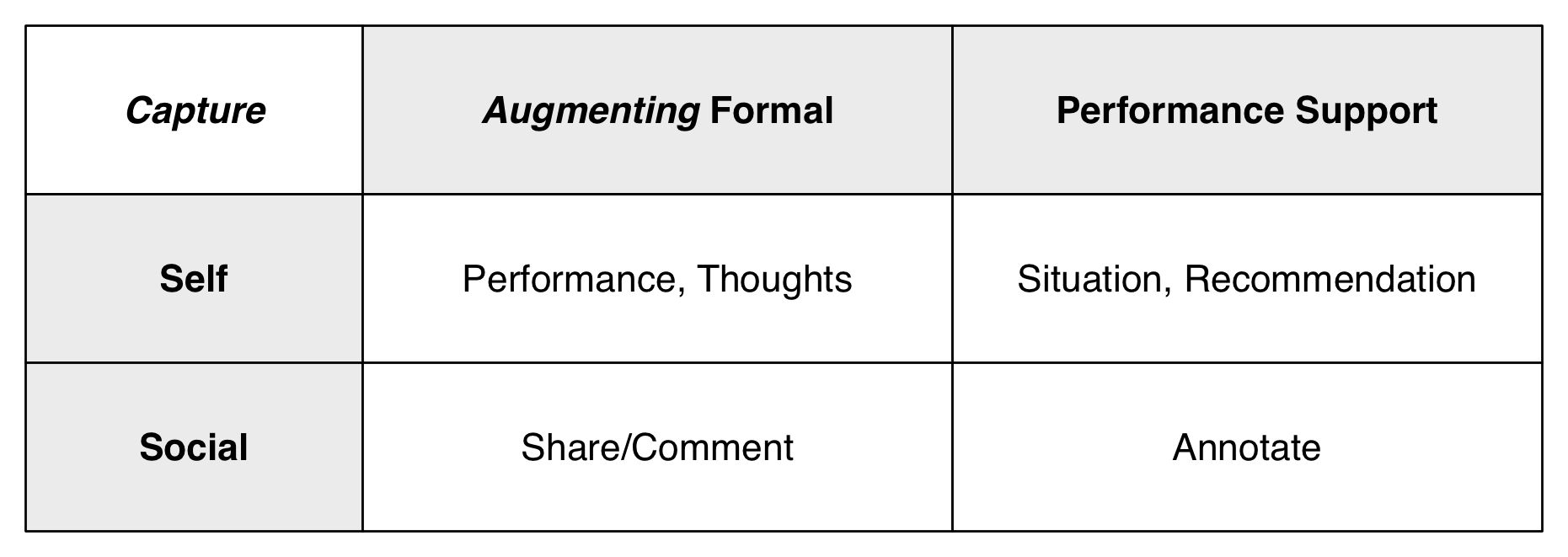

Things get more complex when we go beyond these elements. So I had capture as one thing, and I’m beginning to think it’s two: one is the capture of current context and keeping sharing that for various purposes, and the other is the system using that context to do something unique.

Things get more complex when we go beyond these elements. So I had capture as one thing, and I’m beginning to think it’s two: one is the capture of current context and keeping sharing that for various purposes, and the other is the system using that context to do something unique.

To be clear here, capture is where you use the text insertion, microphone, or camera to catch unique contextual data (or user input). It could also be other such data, such as a location, time, barometric pressure, temperature, or more. This data, then, is available to review, reflect on, or more. It can be combinations, of course, e.g. a picture at this time and this location.

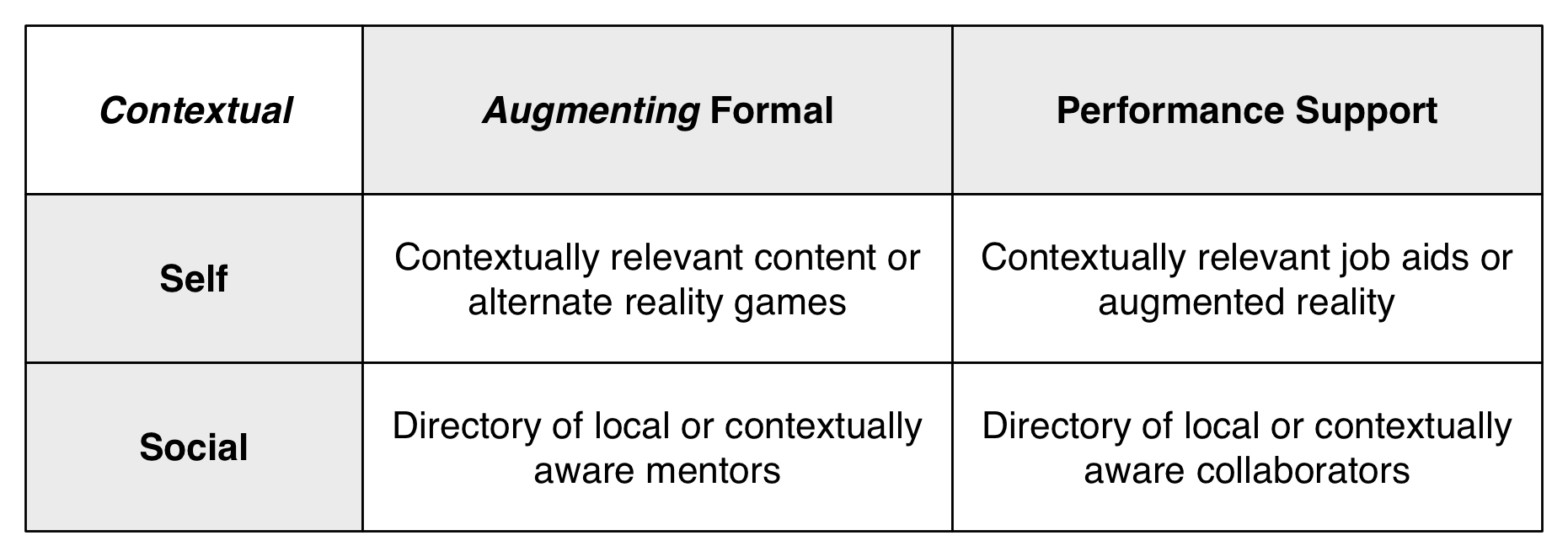

Now, if the system uses this information to do something different than under other circumstances, we’re contextualizing what we do. Whether it’s because of when you are, providing specific information, or where you are, using location characteristics, this is likely to be the most valuable opportunity. Here I’m thinking alternate reality games or augmented reality (whether it’s voiceover, visual overlays, what have you).

Now, if the system uses this information to do something different than under other circumstances, we’re contextualizing what we do. Whether it’s because of when you are, providing specific information, or where you are, using location characteristics, this is likely to be the most valuable opportunity. Here I’m thinking alternate reality games or augmented reality (whether it’s voiceover, visual overlays, what have you).

And I think this is device independent, e.g. it could apply to watches or glasses or..as well as phones and tablets. It means my 4 C’s become: content, compute, capture, and contextualize. To ponder.

So, this is a more nuanced look at the mobile opportunities, and certainly more complex as well. Does the greater detail provide greater benefit?

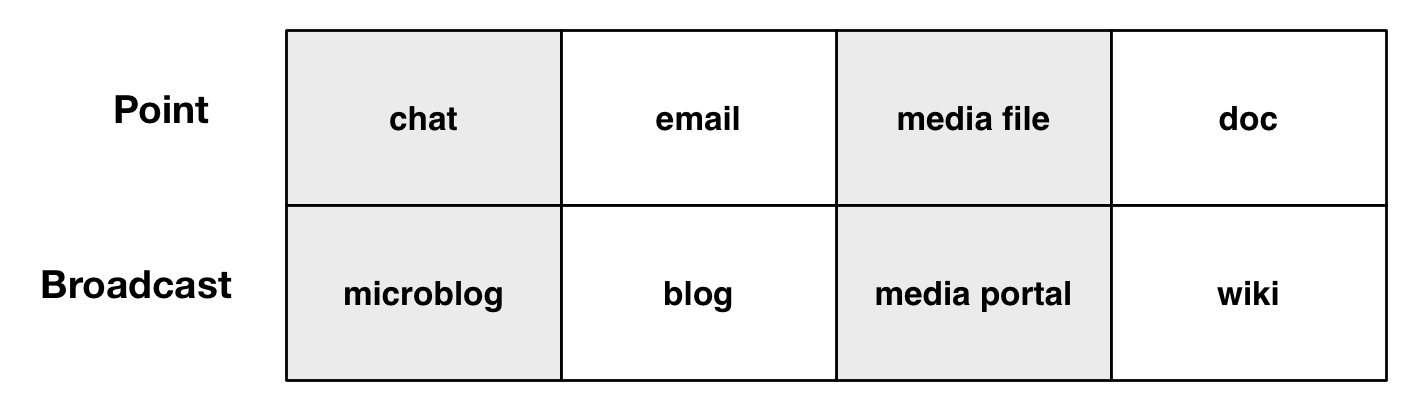

Sharing pointedly or broadly

In a (rare) fit of tidying, I was moving from one note-taking app to another, and found a diagram I’d jotted, and it rekindled my thinking. The point was characterizing social media in terms of their particular mechanisms of distribution. I can’t fully recall what prompted the attempt at characterization, but one result of revisiting was thinking about the media in terms of whether they’re part of a natural mechanism of ‘show your work’ (ala Bozarth)/’work out loud’ (ala Jarche).

The question revolves around whether the media are point or broadcast, that is whether you specify particular recipients (even in a mailing or group list), or whether it’s ‘out there’ for anyone to access. Now, there are distinctions, so you can have restricted access on the ‘broadcast’ mode, but in principle there’re two different mechanisms at work.

The question revolves around whether the media are point or broadcast, that is whether you specify particular recipients (even in a mailing or group list), or whether it’s ‘out there’ for anyone to access. Now, there are distinctions, so you can have restricted access on the ‘broadcast’ mode, but in principle there’re two different mechanisms at work.

It should be noted that in the ‘broadcast’ model, not everyone may be aware that there’s a new message, if they’re not ‘following’ the poster of the message, but it should be findable by search if not directly. Also, the broadcast may only be an organizational network, or it can be the entire internet. Regardless, there are differences between the two mechanisms.

So, for example, a chat tool typically lets you ping a particular person, or a set list. On the other hand, a microblog lets anyone decide to ‘follow’ your quick posts. Not everyone will necessarily be paying attention to the ‘broadcast’, but they could. Typically, microblogs (and chat) are for short messages, such as requests for help or pointers to something interesting. The limitations mean that more lengthy discussions typically are conveyed via…

Formats supporting unlimited text, including thoughtful reflections, updates on thinking, and more tend to be conveyed via email or blog posts. Again, email is addressed to a specific list of people, directly or via a mail list, openly or perhaps some folks receiving copies ‘blind’ (that is, not all know who all is receiving the message. A blog post (like this), on the other hand, is open for anyone on the ‘system’.

The same holds true for other media files besides text. Video and audio can be hidden in a particular place (e.g. a course) or sent directly to one person. On the other hand, such a message can be hosted on a portal (YouTube, iTunes) where anyone can see. The dialog around a file provides a rich augmentation, just as such can be happening on a blog, or edited RTs of a microblog comment.

Finally, a slightly different twist is shown with documents. Edited documents (e.g. papers, presentations, spreadsheets) can be created and sent, but there’s little opportunity for cooperative development. Creating these in a richer way that allows for others to contribute requires a collaborative document (once known as a wiki). One of my dreams is that we may have collaboratively developed interactives as well, though that still seems some way off.

The point for showing out loud is that point is only a way to get specific feedback, whereas a broadcast mechanism is really about the opportunity to get a more broad awareness and, potentially, feedback. This leads to a broader shared understanding and continual improvement, two goals critical to organizational improvement.

Let me be the first to say that this isn’t necessarily an important, or even new, distinction, it’s just me practicing what I preach. Also, I recognize that the collaborative documents are fundamentally different, and I need to have a more differentiated way to look at these (pointers or ideas, anyone), but here’s my interim thinking. What say you?

#itashare

Types of meaningful processing

In an previous post, I argued for different types and ratios for worthwhile learning activities. I’ve been thinking about this (and working on it) quite a bit lately. I know there are other resources that I should know about (pointers welcome), but I’m currently wrestling with several types of situations and wanted to share my thinking. This is aside from scenarios/simulations (e.g. games) that are the first, best, learning practice you can engage in, of course. What I’m looking for is ways to get learners to do processing in ways that will assist their ability to do. This isn’t recitation, but application.

So one situation is where the learner has to execute the right procedure. This seems easy, but the problem is that they’re liable to get it right in practice. The problem is that they still can get it wrong when in real situations. An idea I had heard of before, but was reiterated through Socratic Arts (Roger Schank & cohorts) was to have learners observe (e.g. video) of someone performing it and identifying whether it was right or not. This is a more challenging task than just doing it right for many routine but important tasks (e.g. sanitation). It has learners monitor the process, and then they can turn that on themselves to become self-monitoring. If the selection of mistakes is broad enough, they’ll have experience that will transfer to their whole performance.

Another task that I faced earlier was the situation where people had to interpret guidelines to make a decision. Typically, the extreme cases are obvious, and instructors argue that they all are, but in reality there are many ambiguous situations. Here, as I’ve argued before, the thing to do is have folks work in groups and be presented with increasingly ambiguous situations. What emerges from the discussion is usually a rich unpacking of the elements. This processing of the rules in context exposes the underlying issues in important ways.

Another type of task is helping people understand applying models to make decisions. Rather than present them with the models, I’m again looking for more meaningful processing. Eventually I’ll expect learners to make decisions with them, but as a scaffolding step, I’m asking them to interpret the models in terms of their recommendations for use. So before I have them engage in scenarios, I’ll ask them to use the models to create, say, a guide to how to use that information. To diagnose, to remedy, to put in place initial protections. At other times, I’ll have them derive subsequent processes from the theoretical model.

One other example I recall came from a paper that Tom Reeves wrote (and I can’t find) where he had learners pick from a number of options that indicated problems or actions to take. The interesting difference was then there was a followup question about why. Every choice was two stages: decision and then rationale. This is a very clever way to see if they’re not just getting the right answer but can understand why it’s right. I wonder if any of the authoring tools on the market right now include such a template!

I know there are more categories of learning and associated tasks that require useful processing (towards do, not know, mind you ;), but here are a couple that are ‘top of mind’ right now. Thoughts?

The resurgence of games?

I talked yesterday about how some concepts may not resonate immediately, and need to continue to be raised until the context is right. There I was talking about explorability and my own experience with service science, but it occurred to me that the same may be true of games.

Now, I’ve been pushing games as a vehicle for learning for a long time, well before my book came out on the topic. I strongly believe that next to mentored live practice (which doesn’t scale well), (serious) games are the next best learning opportunity. The reasons are strong:

- safe practice: learners can make mistakes without real consequences (tho’ world-based ones can play out)

- contextualized practice (and feedback): learning works better in context rather than on abstract problems

- sufficient practice: a game engine can give essentially infinite replay

- adaptive practice: the game can get more difficult to develop the learner to the necessary level

- meaningful practice: we can choose the world and story to be relevant and interesting to learners

the list goes on. Pretty much all the principles of the Serious eLearning Manifesto are addressed in games.

Now, I and others (Gee, Aldrich, Shaffer, again the list goes on) have touted this for years. Yet we haven’t seen as much progress as we could and should. It seemed like there was a resurgence around 2009-2010, but then it seemed to go quiet again. And now, with Karl Kapp’s Gamification book and the rise of interest in gamification, we have yet another wave of interest.

Now, I’m not a fan of the extrinsic gamification, but it appears there’s a growing awareness of the difference between extrinsic and intrinsic. And I’m seeing more use of games to develop understanding in at least K12 circles. Hopefully, the awareness will arise in higher ed and corp too.

As some fear, it’s too costly, but my response is twofold:

- games aren’t as expensive as you fear; there are lots of opportunities for games in lower price ranges (e.g. $100K), don’t buy into the $1M and up mentality

- they’re actually likely to be effective (as part of a complete learning experience), compared to many if not most of the things being done in learning

So I hope we might finally go beyond Clicky Clicky Bling Bling, (tarted quiz shows, cheesy videos and more) and get to interaction that actually leads to change. Here’s hoping!

Service Thinking and the Revolution?

A colleague I greatly respect, who has a track record of high impact in important positions, has been a proponent of service science. And I confess that it hadn’t really penetrated. Yet last week I heard about it in a way that resonated much more strongly and got me thinking, so let me share where it’s leading my thinking, and see what you say.

One time I heard something exciting, a concept called interface ‘explorability‘ when I was doing a summer internship at NASA while a grad student. When I brought it back to the lab, my advisor didn’t really resonate. Then, some time later (a year or two) he was discussing a concept and I mentioned that it sounded a lot like that ‘explorability’, and he suddenly wanted to know more. The point being that there is a time when you’re ready to hear a message. And that’s me with service science.

The concept is considering a mutual value generation process between provider and customer, and engineering it across the necessary system components and modular integrations to yield a successful solution. As organizations need to be more customer-centric, this perspective yields processes to do that in a very manageable, measurable way. And that’s the perspective I’d been missing when I’d previously heard about it, but Hastings & Saperstein presented it last week at the Future of Talent event in the form of Service Thinking, which brought the concept home.

I wondered how it compared to Design Thinking, another concept sweeping instructional design and related fields, and it appears to be synergistic but perhaps a superset. While nothing precludes Design Thinking from producing the type of outcome Service Thinking is advocating, I’m inferring that Service Thinking is a bit more systematic and higher level.

The interesting idea for me was to think of bringing Service Thinking to the role of L&D in the organization. If we’re looking systematically at how we can bring value to the customer, in this case the organization, systematically, we have a chance to look at the bigger picture, the Performance & Development view instead of the training view. If we take the perspective of an integrated approach to meeting organizational execution and innovation needs, we may naturally develop the performance ecosystem.

We need to take a more comprehensive approach, where we integrate technology capabilities, resources, and people into an integrated whole. I’m looking at service thinking, as perhaps an integration of the rigor of systems thinking with the creative customer focus of design thinking, as at least another way to get us there. Thoughts?