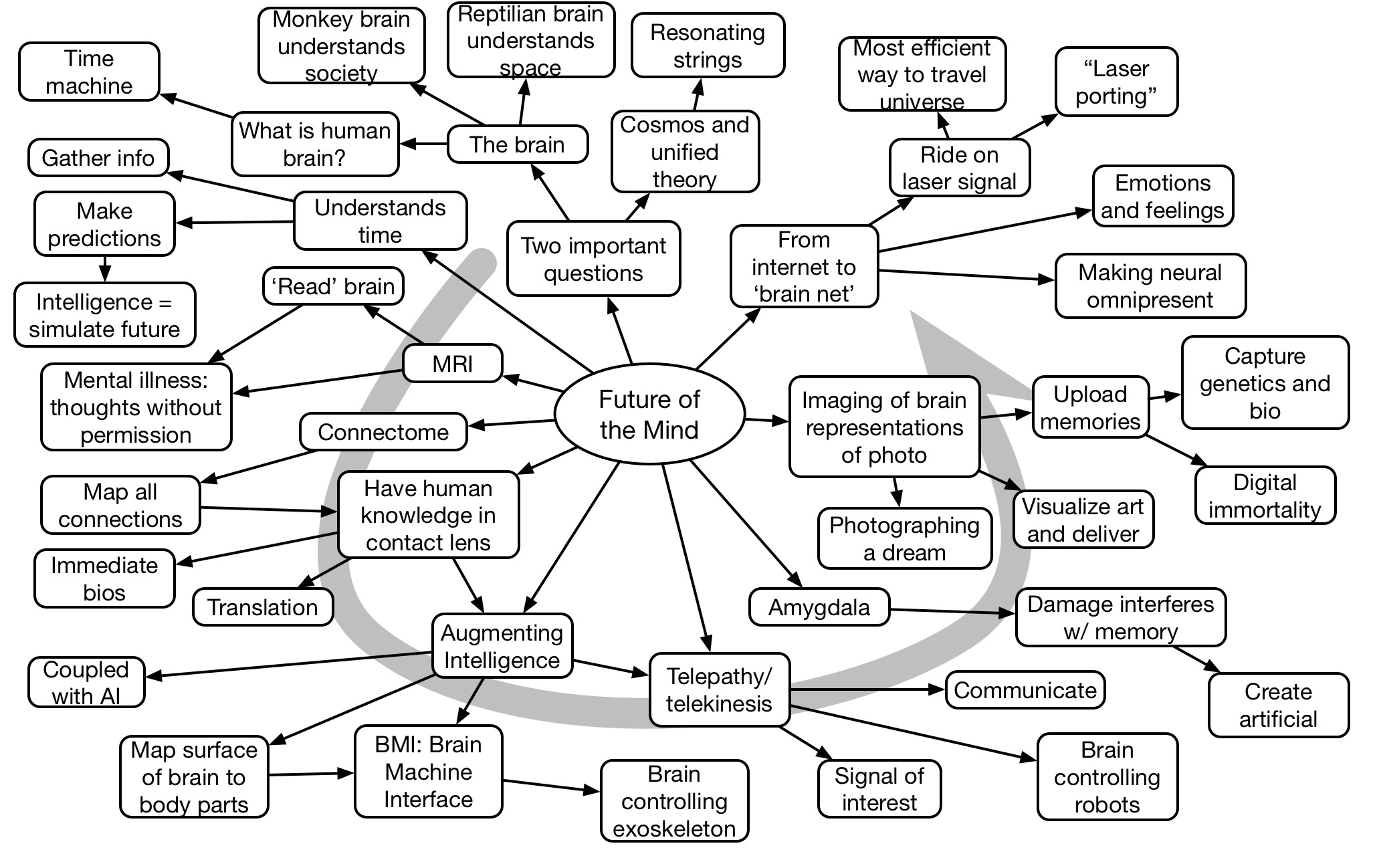

Michio Kaku opened the second day of DevLearn with a keynote on the future of the mind. He portrayed extrapolations of current research to some speculative ideas of what our future could mean. He talked about research from physics (?!?) on MRI, AI, and more to provide new capabilities.