Conference season has commenced. Two are already in the books. Three I know about are coming up, and I’m playing a role in two. So, what’s up, and when? Here’s what I know.

So, first, the Learning Development Accelerator (LDA) is running the Creating a Motivating Work Environment Summit. This is in conjunction with the Center for Self-Determination Theory, so it’s scrutable. I’m not part of this, except as a participant. It follows the usual LDA format: access to videos created by the presenters, followed by live sessions at two different times. The videos are already up, and the live sessions are coming soon, Nov 3 – 7! It’s all online, which makes it easy to attend, and the live sessions will be recorded. There’s a stellar lineup of speakers, naturally! I’m increasingly finding the value in the theory, so I look forward to the session. Caveat: I’m a Co-Director of the LDA, so I have a vested interest in the success, but I still think it’s of interest (at least to me).

Then, the Learning Guild is holding the next DevLearn conference, and I’ll be doing several things. My Wed is pretty full, as I’m starting by hosting a Morning Buzz on building a learning culture. Hosting isn’t the same as presenting, but instead just facilitating the conversation. Then I’m presenting on the spacing of learning. I’ve been actively engaged in developing a spaced learning strategy, and will be sharing the key principles from learning science research. As well as what’s not (yet) known! I’ll be signing books right after that at the event bookstore. On Thursday, I’m part of a panel on AI (which will probably be interpreted as Generative AI), and will be my usual critical self ;). Of course, I’ll also be wandering the halls and expo. If you’re there, say hello!

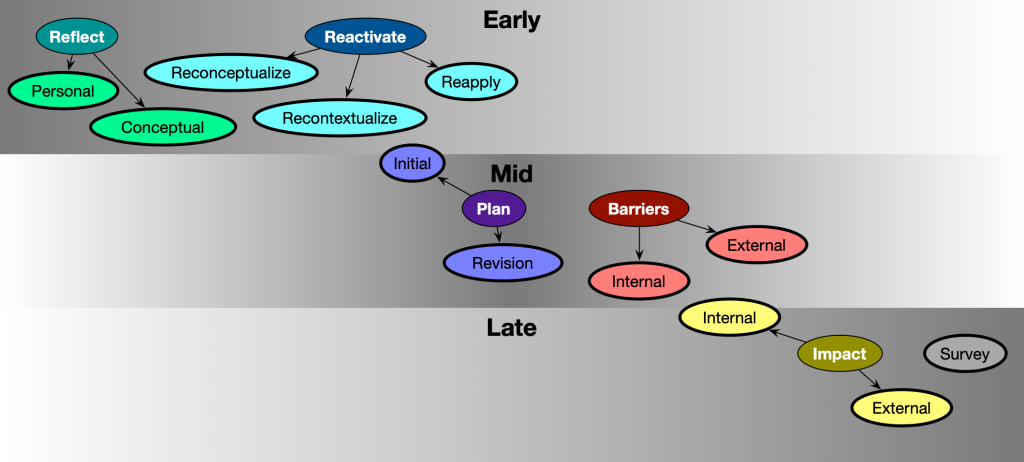

Finally, the LDA is also running our second Learning Science Conference. (If you attended last year, you get a big discount!). It uses the same format asa the Motivation Summit, above, that is with specifically curated content in presentations, and live sessions Dec 8 – 12. It starts 3 Nov, and I’m active in this one too. I’ll again be presenting two sections. The first will be on getting information into, and out of, long-term memory, specifically generative and retrieval practice. I talked about the latter, last year, so I’m refining that (my own understanding evolves as does the field), and adding more on generative. Similarly with social and informal learning, which I’ll be presenting again. That is, I’ll be rehashing the old, and adding a bit new.

There’re new speakers, too. We’ve the honor of having Gale Sinatra and Jim Hewitt, and Rich Mayer will be doing a special session with Ruth Clark. Other presenters include my fellow co-Director, Matt Richter, along with Stella Lee and Nidhi Sachdeva. There’ll be special sessions, such as with Will Thalheimer. Of course, we’ll have a debate, here with me going head to head with authors Bianca Baumann and Mike Taylor on marketing and motivation. We also will have a panel with greats Julie Dirksen, Jane Bozarth, and Koreen Pagano. And more.

Sure, there’re lots of ways to get on top of learning science and good design. There’s the Serious eLearning Manifesto, books (e.g. my recommended reading list), blogs (like this one), magazines likeTraining, eLearn, journals, and more. However, getting together with your fellow practitioners, live or online, is a real boon, and so conference season is a great opportunity. Hope to see you somewhere soon!