I’ve documented some quips in the past, but apparently not this one yet. Prompted by a nice article by Connie Malamed on creativity, I’m reminded of a saying, and the underlying thinking. Here’s both the quip and some more on systematic creativity. First, the quip:

Systematic creativity is not an oxymoron!

In her article, Connie talks about what creativity is, why it’s important, and then about steps you can take to increase it. I want to dig a wee bit further into the cognitive and formal aspects of this to backstop her points. (Also, of course, to make the point that a cognitive perspective provides important insight.)

As background, I’ve been focused on creating learning experiences. This naturally includes cognition as the basis for learning, experiences, and design. So I’ve taken eclectic investigations on all three. For instruction, I continue to track for insights from behavioral, social, cognitive, post-cognitive, even machine learning. On the engagement side, I continue to explore games, drama, fiction, UI/UX, roleplay, ‘flow’, and more. Similarly, for design my explorations include architecture, software engineering, graphic, product, information, and more.

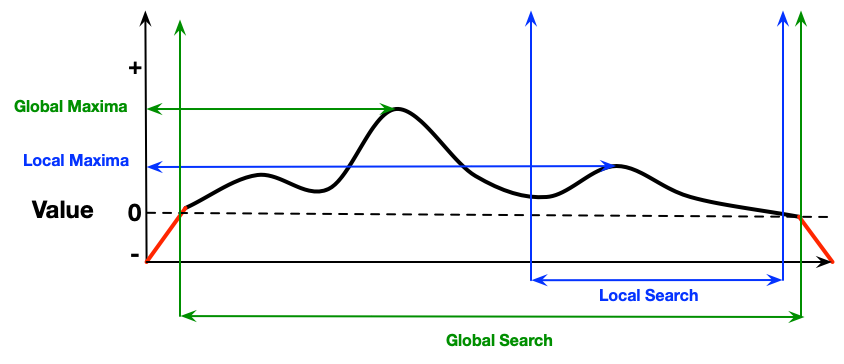

One of the interesting areas comes from computer science, searching through problem-spaces for solutions. If we think of the solution set as a space, some solutions are better than others. It may not be a smooth continuum, but instead we might have local maxima that are ‘ok’, but there’s another elsewhere that’s better. If we are too lax in our search, we might only find the local maxima. However, there are ways to increase the chances of exploring a broader space, making a more global search. (Of course, this can be multidimensional.)

Practically, this includes several possibilities. For one, having a diverse team increases the likelihood that we’ll be exploring more broadly. (On the flip side, having folks who all think alike mean all but one are redundant. ;). For another, brainstorming properly keeps the group from prematurely converging. We can use lateral random prompts to push us to other areas. And so on. I wrote a series of four posts about design that included a suite of heuristics to increase the likelihood of finding a good solution. Connie’s suggestions do likewise.

I also suppose this is a mental model that we can use to help think about designing. Mental models are bases for predictions and decisions. In this case, having the mental model can assist in thinking through practices that are liable to generate better design practices. How do we keep from staying localized? How do we explore the solution space in a manner that goes broad, but not exhaustively (in general, we’re designing under time and cost constraints).

Creativity is the flip side of innovation. It takes the former to successfully execute on the latter. It’s a probabilistic game, but we can increase our odds by certain practices that emerge from research, theory, and practice. We also want to include emotion in the picture as well, in our design practices as well as in our solutions. When we do, we’re more likely to explore the space effectively, and increase our chances for the best solution. That’s a worthwhile endeavor, I’ll suggest. What are your systematic creativity approaches?