Jane Hart has been widely and wisely known for her top 100 Tools for Learning (you too can register your vote). As a public service announcement, I list my top 10 tools for learning as well:

- Google search: I regularly look up things I hear of and don’t know. It often leads me to Wikipedia (my preferred source, teachers take note), but regularly (e.g. 99.99% of the time) provides me with links that give me the answer i need.

- Twitter: I am pointed to many amazing and interesting things via Twitter.

- Skype: the Internet Time Alliance maintains a Skype channel where we regularly discuss issues, and ask and answer each other’s questions.

- Facebook: there’s another group that I use like the Skype channel, and of course just what comes in from friends postings is a great source of lateral input.

- WordPress: my blogging tool, that provides regular reflection opportunities for me in generating them, and from the feedback others provide via comments.

- Microsoft Word: My writing tool for longer posts, articles, and of course books, and writing is a powerful force for organizing my thoughts, and a great way to share them and get feedback.

- Omnigraffle: the diagramming tool I use, and diagramming is a great way for me to make sense of things.

- Keynote: creating presentations is another way to think through things, and of course a way to share my thoughts and get feedback.

- LinkedIn: I share thoughts there and track a few of the groups (not as thoroughly as I wish, of course).

- Mail: Apple’s email program, and email is another way I can ask questions or get help.

Not making the top 10 but useful tools include Google Maps for directions, Yelp for eating, Good Reader as a way to read and annotate PDFs, and Safari, where I’ve bookmarked a number of sites I read every day like news (ABC and Google News), information on technology, and more.

So that’s my list, what’s yours? I note, after the fact, that many are social media. Which isn’t a surprise, but reinforces just how social learning is!

Share with Jane in one of the methods she provides, and it’s always interesting to see what emerges.

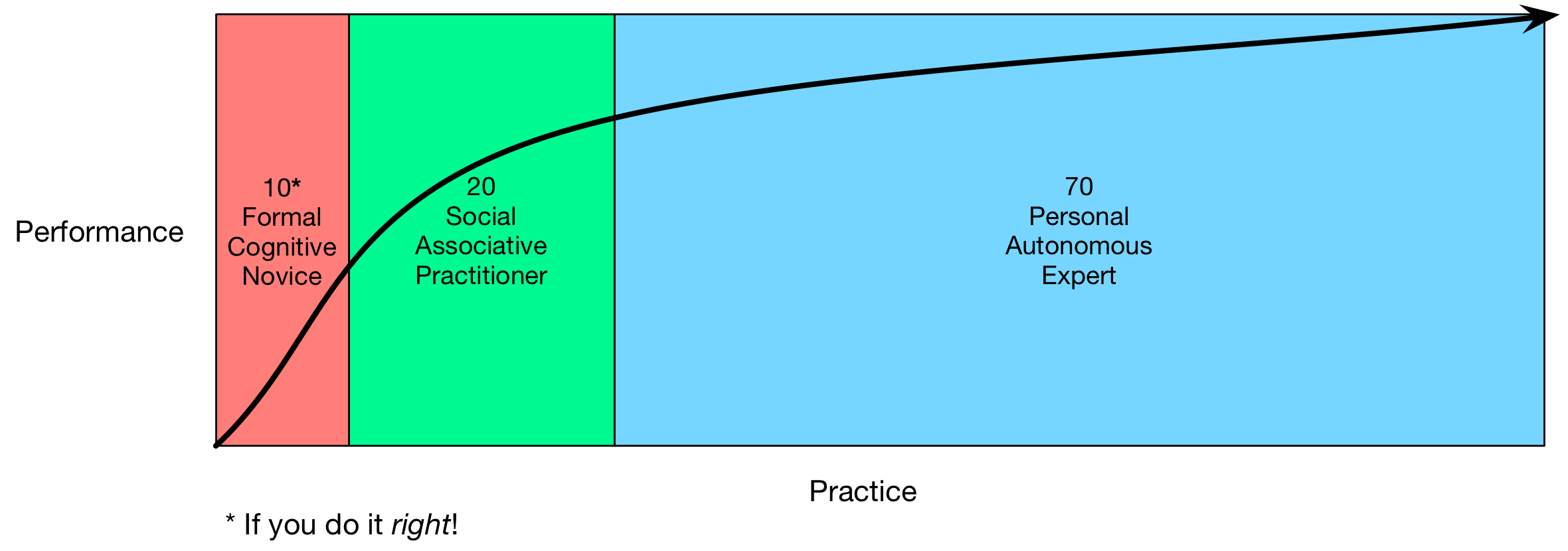

This, to me, maps more closely to 70:20:10, because you can see the formal (10) playing a role to kick off the semantic part of the learning, then coaching and mentoring (the 20) support the integration or association of the skills, and then the 70 (practice, reflection, and

This, to me, maps more closely to 70:20:10, because you can see the formal (10) playing a role to kick off the semantic part of the learning, then coaching and mentoring (the 20) support the integration or association of the skills, and then the 70 (practice, reflection, and