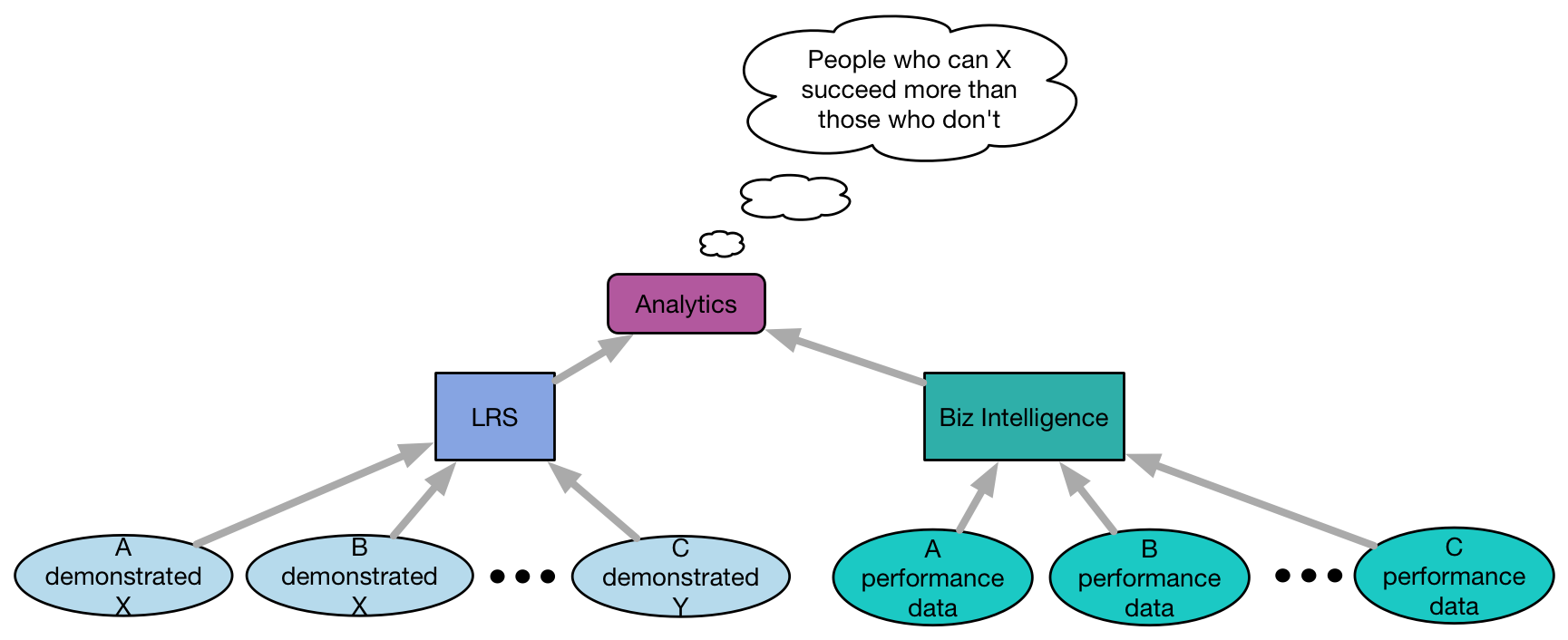

A couple of weeks ago, I had the pleasure of attending the xAPI Base Camp, to present on content strategy. While I was there, I remembered that I have some colleagues who don’t see the connection between xAPI and learning. And it occurred to me that I hadn’t seen a good diagram that helped explain how this all worked. So I asked and was confirmed in my suspicion. And, of course, I had to take a stab at it.

What I was trying to capture was how xAPI tracked activity, and that could then be used for insight. I think one of the problems people have is that they think xAPI is a solution all in itself, but it is just a syntax for reporting.

What I was trying to capture was how xAPI tracked activity, and that could then be used for insight. I think one of the problems people have is that they think xAPI is a solution all in itself, but it is just a syntax for reporting.

So when A might demonstrate a capability at a particular level, say at the end of learning, or by affirmation from a coach or mentor, that gets recorded in a Learning Record Store. We can see that A and B demonstrated it, and C demonstrated a different level of capability (it could also be that there’s no record for C, or D, or…).

From there, we can compare that activity with results. Our business intelligence system can provide aggregated data of performance for A (whatever A is being measured on: sales data, errors, time to solve customer problems, customer satisfaction, etc). With that, we can see if there are the correlations we expect, e.g. everyone who demonstrated this level of capability has reliably better performance than those who didn’t. Or whatever you’re expecting.

Of course, you can mine the data too, seeing what emerges. But the point is that there are a wide variety of things we might track (who touched this job aid, who liked this article, etc), and a wide variety of impacts we might hope for. I reckon that you should plan what impacts you expect from your intervention, put in checks to see, and then see if you get what you intended. But we can look at a lot more interventions than just courses. We can look to see if those more active in the community perform better, or any other question tied to a much richer picture than we get other ways.

Ok, so you can do this with your own data generating mechanisms, but standardization has benefits (how about agreeing that red means stop?). So, first, does this align with your understanding, or did I miss something? And, second does this help, at all?

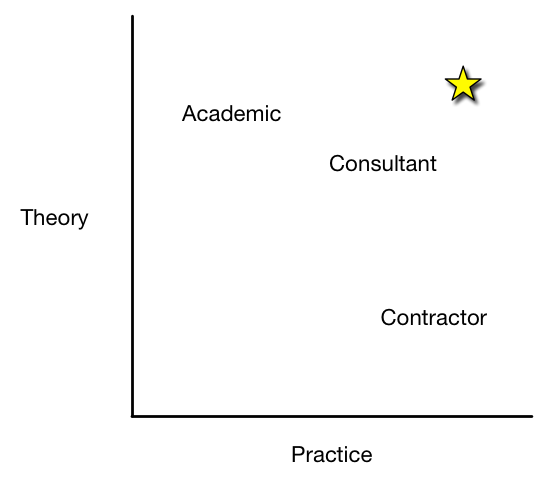

I was reminded of it, and realize I see it slightly differently. So I’d put someone high on the theory/information side as an academic or researcher, whether they’re in an institution or not. They know the theories behind the outcomes, and may study them, but don’t apply them . And I’d put someone who can execute against a particular model as a contractor. You know what you want done, and you hire someone to do it.

I was reminded of it, and realize I see it slightly differently. So I’d put someone high on the theory/information side as an academic or researcher, whether they’re in an institution or not. They know the theories behind the outcomes, and may study them, but don’t apply them . And I’d put someone who can execute against a particular model as a contractor. You know what you want done, and you hire someone to do it.