So, I was recently at the DevLearn conference, and it was, as always, fun. Though, as you might expect, there as non-stop discussion of AI. Of course, even on the panel I was on (with about 130 other Guild Masters; hyperbole, me?) it was termed AI tho’ everyone was talking Large Language Models (LLMs). In preparation, I started thinking about LLMs and their architecture. What I have realized (and argued) is that people are misusing LLMs. What became clear to me, though, is why. And I realize there’s another, and probably better, approach. So let’s talk going beyond LLMs.

As background, you tune LLMs (and the architecture, whether applied to video, audio, or language) to a particular task. Using text/language as an example, their goal is to create good sounding language. And they’ve become very good at it. As has been said, they create what sounds like good answers. (They’re not, as hype has it, revolutionary, just evolutionary, but they appear to be new.)

I made that point on the panel, asking the audience how many thought LLMs made good answers, and there was a reasonable response. Then I asked how many thought it made what sounded like good answers. My point was that they’re not the same. So, they don’t necessarily make good design! (Diane Elkins pointed out that they trained on average, so they create average. If you’re below average, they’re good, if you’re above average, they’ll do worse than what you’d do.) I ranted that tech-enabled bad design is still bad design!

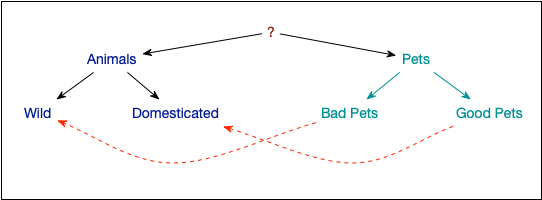

However, I’ve been a fan of predictive coding, as it poses a plausible model of cognition. Then I heard about active inference. And, in a quick search, found out that together, they’re much closer to actual thinking. In particular, combined, they approach artificial general intelligence (AGI for short, and wrongly attributed to our current capability). I admit that I don’t go fully into the math, but conceptually, they build a model of the world (as we do). Moreover, they learn, and keep learning. That is, they’re not training on a set of language statements to learn language, but they’re building explanations of how the world works.

I think that when we want really good systems to know about a domain (say business strategy) and provide good guidance, this is the type of architecture. What I said there, and will say again here, is that this is where we should be applying our efforts. We’re not there yet, and I’m not sure how far the models have evolved. On the other hand, if we were applying the resources going to LLMs… Look, I’m not saying there aren’t roles for LLMs, but too often they’re being used inappropriately I think we can do better when we go beyond LLMs. You heard it here first ;).