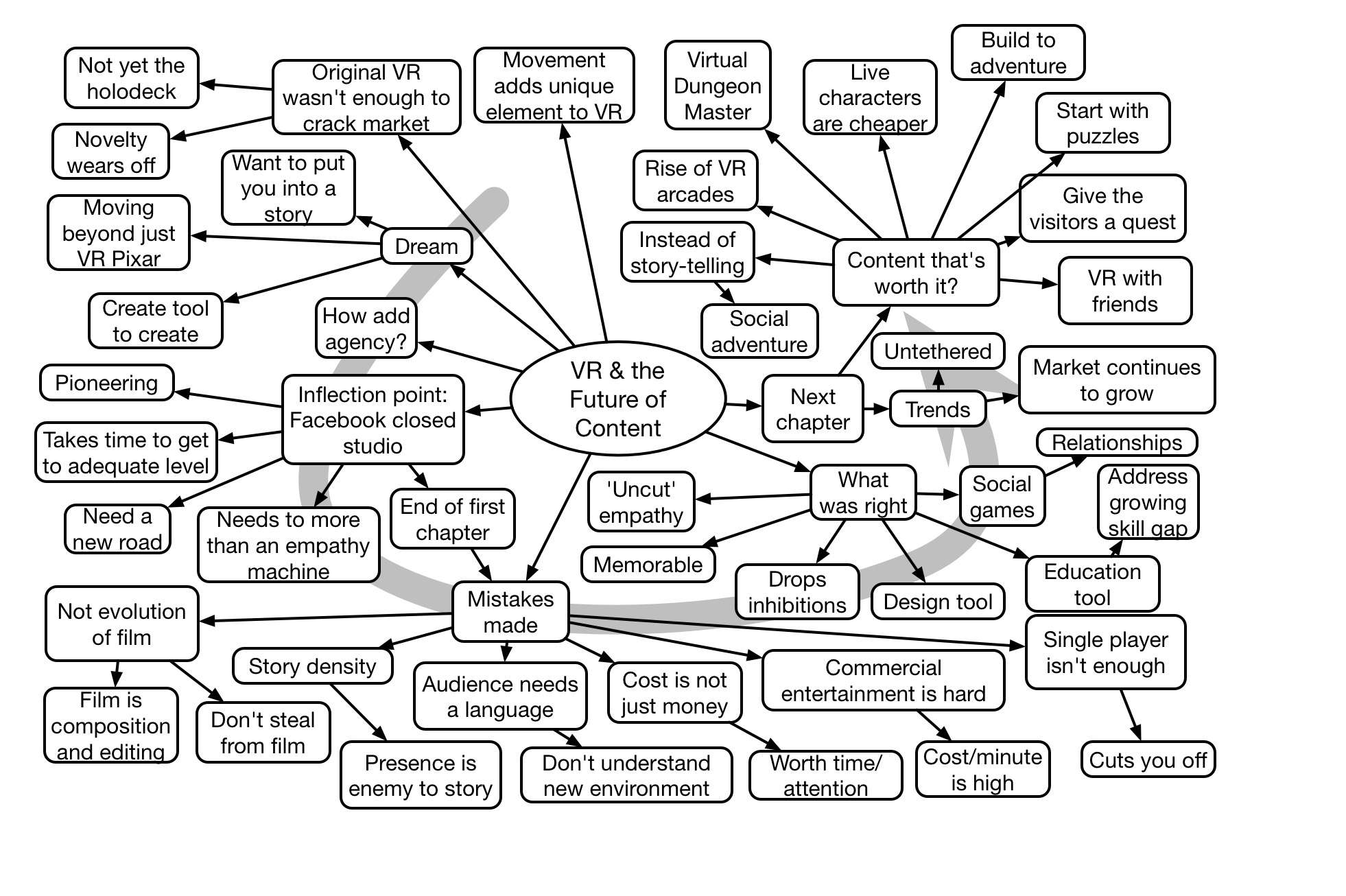

Maxwell Planck opened the eLearning Guild’s Realities 360 conference with a thoughtful and thought-provoking talk on VR. Reflecting on his experience in the industry, he described the transition from story telling to where he thinks we should go: social adventure. (I want to call it “adventure together”. :). A nice start to the event.

What is the Future of Work?

Just what is the Future of Work about? Is it about new technology, or is it about how we work with people? We’re seeing amazing new technologies: collaboration platforms, analytics, and deep learning. We’re also hearing about new work practices such as teams, working (or reflecting) out loud, and more. Which is it? And/or how do they relate?

Just what is the Future of Work about? Is it about new technology, or is it about how we work with people? We’re seeing amazing new technologies: collaboration platforms, analytics, and deep learning. We’re also hearing about new work practices such as teams, working (or reflecting) out loud, and more. Which is it? And/or how do they relate?

It’s very clear technology is changing the way we work. We now work digitally, communicating and collaborating. But there’re more fundamental transitions happening. We’re integrating data across silos, and mining that data for new insights. We can consolidate platforms into single digital environments, facilitating the work. And we’re getting smart systems that do things our brains quite literally can’t, whether it’s complex calculations or reliable rote execution at scale. Plus we have technology-augmented design and prototyping tools that are shortening the time to develop and test ideas. It’s a whole new world.

Similarly, we’re seeing a growing understanding of work practices that lead to new outcomes. We’re finding out that people work better when we create environments that are psychologically safe, when we tap into diversity, when we are open to new ideas, and when we have time for reflection. We find that working in teams, sharing and annotating our work, and developing learning and personal knowledge mastery skills all contribute. And we even have new practices such as agile and design thinking that bring us closer to the actual problem. In short, we’re aligning practices more closely with how we think, work, and learn.

Thus, either could be seen as ‘the Future of Work’. Which is it? Is there a reconciliation? There’s a useful way to think about it that answers the question. What if we do either without the other?

If we use the new technologies in old ways, we’ll get incremental improvements. Command and control, silos, and transaction-based management can be supported, and even improved, but will still limit the possibilities. We can track closer. But we’re not going to be fundamentally transformative.

On the other hand, if we change the work practices, creating an environment where trust allows both safety and accountability, we can get improvements whether we use technology or not. People have the capability to work together using old technology. You won’t get the benefits of some of the improvements, but you’ll get a fundamentally different level of engagement and outcomes than with an old approach.

Together, of course, is where we really want to be. Technology can have a transformative amplification to those practices. Together, as they say, the whole is greater than the some of the parts.

I’ve argued that using new technologies like virtual reality and adaptive learning only make sense after you first implement good design (otherwise you’re putting lipstick on a pig, as the saying goes). The same is true here. Implementing radical new technologies on top of old practices that don’t reflect what we know about people, is a recipe for stagnation. Thus, to me, the Future of Work starts with practices that align with how we think, work, and learn, and are augmented with technology, not the other way around. Does that make sense to you?

Augmented Reality Lives!

Augmented Reality (AR) is on the upswing, and I think this is a good thing. I think AR makes sense, and it’s nice to see both solid tool support and real use cases emerging. Here’s the news, but first, a brief overview of why I like AR.

Augmented Reality (AR) is on the upswing, and I think this is a good thing. I think AR makes sense, and it’s nice to see both solid tool support and real use cases emerging. Here’s the news, but first, a brief overview of why I like AR.

As I’ve noted before, our brains are powerful, but flawed. As with any architecture, any one choice will end up with tradeoffs. And we’ve traded off detail for pattern-matching. And, technology is the opposite: it’s hard to get technology to do pattern matching, but it’s really good at rote. Together, they’re even more powerful. The goal is to most appropriately augment our intellect with technology to create a symbiosis where the whole is greater than the sum of the parts.

Which is why I like AR: it’s about annotating the world with information, which augments it to our benefit. It’s contextual, that is, doing things because of when and where we are. AR augments sensorily, either auditory or visual (or kinesthetic, e.g. vibration). Auditory and kinesthetic annotation is relatively easy; devices generate sounds or vibrations (think GPS: “turn left here”). Non-coordinated visual information, information that’s not overlaid visually, is presented as either graphics or text (think Yelp: maps and distances to nearby options). Tools already exist to do this, e.g. ARIS. However, arguably the most compelling and interesting is the aligned visuals.

Google Glass was a really interesting experiment, and it’s back. The devices – glasses with camera and projector that can present information on the glass – were available, but didn’t do much because of where you were looking. There were generic heads-up displays and camera, but little alignment between what was seen and what was consequently presented to the user with additional information. That’s changed. Google Glass has a new Enterprise Edition, and it’s being used to meet real needs and generate real outcomes. Glasses are supporting accurate placement in manufacturing situations requiring careful placement. The necessary components and steps are being highlighted on screen, and reducing errors and speeding up outcomes.

And Apple has released it’s Augmented Reality software toolkit, ARKit, with features to make AR easy. One interesting aspect is built-in machine learning, which could make aligning with objects in the world easy! Incompatible platforms and standards impede progress, but with Google and Apple creating tools for each of their platforms, development can be accelerated. (I hope to find out more at the eLearning Guild’s Realities 360 conference.)

While I think Virtual Reality (VR) has an important role to play for deep learning, I think contextual support can be a great support for extending learning (particularly personalization), as well as performance support. That’s why I’m excited about AR. My vision has been that we’ll have a personal coaching system that will know where and when we are and what our goals are, and be able to facilitate our learning and success. Tools like these will make it easier than ever.

Accountability and Safety

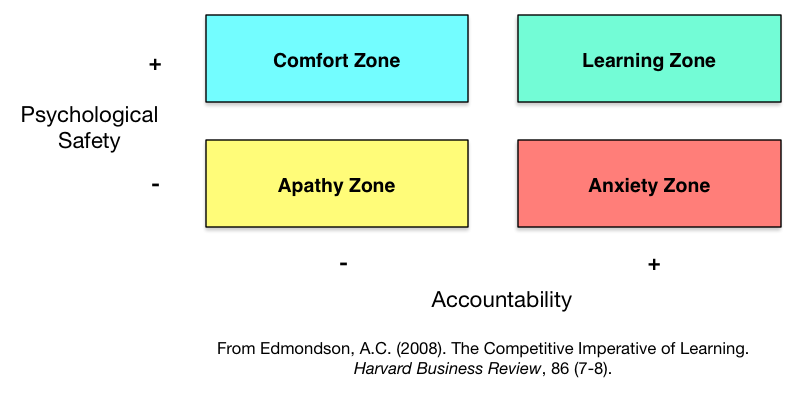

In much of the discussion about tapping into the power of people via networks and communities, we hear about the learning culture we need. Items like psychological safety, valuing diversity, openness, and time for reflection are up front. And I’m as guilty of this as anyone! However, one other element that appears in the more rigorous discussions (including Edmondson’s Teaming and Sutton & Rao’s Scaling Up Excellence) is accountability. (Which isn’t to say that it doesn’t appear in other pictures, but it’s certainly not foreground.) And it’s time to address this.

So, the model for becoming agile is creating an environment where people learn, alone and together. But it’s informal learning. When you research, problem-solve, design, etc, you don’t know the answer when you start! Yet it’s not like anyone else has the answer, either. And we know that the output is better when we search more broadly through the possible solution space. (Which is what we’re doing, really.) This means we need diverse inputs to keep us from prematurely converging. Or searching too narrow a space. We also need also those different voices to contribute, or we won’t get there. And we have to be open to new ideas, or we could inadvertently cut off part of the solution space. We also need time and tools for reflection (hence reflecting out loud).

Typically, the process is iterative (real innovations percolate/ferment/incubate; they aren’t ‘driven’): going away, doing tasks, and returning. Here, we need to ensure people are contributing, doing the work. We don’t want to micromanage it, but we do want to assist people because we shouldn’t assume that they’re effective self and social learners. In short, we can’t squelch the feeling of autonomy to accompany purpose (ala Dan Pink’s Drive), yet the job must get done!

When there’s purpose, and community, accountability is natural. When we comprehend how what we’re doing contributes, when we have reciprocal trust with our colleagues that we’ll each do our part, and when there’s transparency about what’s happening, it’s a natural. A transactional model, when it’s a network and not a community, doesn’t feel safe, and doesn’t work as well. As Edmondson documents it, you want to be in the learning zone where you have both accountability and safety. And that’s not an easy balance.

When there’s purpose, and community, accountability is natural. When we comprehend how what we’re doing contributes, when we have reciprocal trust with our colleagues that we’ll each do our part, and when there’s transparency about what’s happening, it’s a natural. A transactional model, when it’s a network and not a community, doesn’t feel safe, and doesn’t work as well. As Edmondson documents it, you want to be in the learning zone where you have both accountability and safety. And that’s not an easy balance.

So one of the steps to get there is to ensure accountability is part of the picture. And it’s not just calling someone on the carpet. Done right, it’s a tracking and regular support to succeed, so accountability is an ongoing relationship that suggests we want you to succeed, and we’ll help you do that. Accountability shouldn’t be a surprise! (Transparency helps.)

When we talk about the high-minded principles of making it safe and helping people feel welcome, some can view this as all touchy-feely and worry that it won’t get things done. Which isn’t true, but I think it helps if we keep accountability in the picture to assuage those concerns. Ultimately, we want outcomes, but new and improved ones, not just the same old things. The status quo isn’t really acceptable today. In this increasingly dynamic environment, the ability to adapt is key. And that’s the Learning Zone. Are you ready to go there?

Reflection ‘out loud’

I am a fan of Show Your Work and Work Out Loud, but I’m wondering about whether they could mislead. Not that that’s the intent, of course, but they don’t necessarily include reflection, a critical component. I believe they care about it, but the phrase don’t implicitly require annotating your thoughts. And I think it’s important.

The original phrase that resonated for me was ‘narrate your work’, which to me was more than just showing it. When teachers told you to show your work, they just wanted intermediate steps. But Alan Schoenfeld’s research has documented that’s valuable to show the thinking behind the steps. What’s your rationale for taking this step?

Teachers would be able to identify where you went wrong, but that doesn’t necessarily say why you went wrong. On things as simple as multi-column subtraction, the answer would tell you whether they borrowed wrong or reversed the number or other specific misconceptions that would reveal themselves in the result. But on more complex problems, the intermediate steps may not preserve the rationale.

The design rationale approach emerged on complex projects for just this reason. New people could question earlier decisions and if they weren’t documented, you’d revisit them unnecessarily. It’s important to capture not only the decision, but the criteria used, the others considered, etc. It is this thinking about what drove decisions that helps people understand your thinking and thereby improve it.

I don’t really think “reflection out loud” is the right term. I like ‘narrate your work’ or ‘share your thinking’ perhaps better. And I do believe that those talking about working out loud and sharing your work do intend this, it’s just that too often I’ve seen people take the surface implications of a phrase and skip the real importance (*cough* Kirkpatrick’s levels *cough*). So, worst case, I’ve confused the terminology space, but hopefully I’ve also helped illuminate the valuable underpinning. And practiced what I’m preaching ;). Your thoughts?

A Bad Tart

Good learning requires a basis for intrinsic interest. The topic should be of interest to the learner, a priori or after the introduction. If the learner doesn’t ‘get’ why this learning is relevant to them, it doesn’t stick as well. And this isn’t what gamification does. So tarting up content is counter-productive. It’s a bad (s)tart!

Ok, to be clear, there’re two types of gamification. The first, important, and relevant type of gamification is using game design techniques to embed learning topic into meaningful series of decisions, where the context and actions taken affect the outcomes in important ways, and the challenge is appropriate. However, that’s not the one that’s getting all the hype.

Instead, the hype is around PBL (which, sadly isn’t Problem-Based Learning but instead is Points, Badges, & Leaderboards). If we wrap this stuff around our learning, we’ll make it more engaging. And, at least initially, we’ll see that. At least in enthusiasm. But how about retention and transfer? And will there be a drop-off when the novelty wears off?

Yes, we can tart up drill-and-kill, and should, if that’s what’s called for (e.g. accurate retention of information). But that’s not what works for skills. And the times it’s actually relevant are scarce. For skills, we want appropriate retrieval. And that means something else.

Retention and transfer of new skills requires contextualized retrieval and application to decisions that learners need to be able to make. And that’s scenarios (or, at least, mini-scenarios). We need to put learners into situations requiring applying the knowledge to make decisions. Then the consequences play out.

If you’re putting your energy into finding gratuitous themes to wrap around knowledge recitation instead of making intrinsically meaningful contexts for knowledge application, you’re wasting time and money. You’re not going to develop skills.

I actually don’t mind if you want to tart up after you’ve done the work of making the skill practice meaningful. But only after! If you’re skipping the important practice design, you’re letting down your learners. As well as the organization. And typically we don’t need to spend unnecessary time.

Please, for your learners’ sake, find out about both sorts of gamification, distinguish between them, and then use them appropriately. PBL is ok when rote knowledge has to be drilled, or after you’ve done good practice design.

Writing For Learning: Patti Shank book

While I ordinarily refuse (on principle, otherwise I’d get swamped and become a PR hack; and I never promise a good review), I acquiesced to Patti Shank’s offer of a copy of her new book Write and Organize for Deeper Learning. I’ve been a fan of her crusade for science in learning (along with others like Will Thalheimer, Julie Dirksen, and Michael Allen, to name a few co-conspirators on the Serious eLearning Manifesto), and I can say I’m glad she offered and delivered. This is a contribution to the field, with a focus on writing.

The first of a potential series on evidence-based processes in learning design, this one is focused on content: writing and organization. In four overall strategies with 28 total tactics, she takes you through what you should do and why. Practicing what she preaches, and using the book itself recursively as an example, she uses simple words and trimmed down prose to focus on what you need to know, and guidance to generalize it. She also has practice exercises to help make the material stick.

Which isn’t to say there aren’t quibbles: for one, there’s no index! I wanted to look for ‘misconceptions’ (an acid test in my mind), but it’s in there. Still, there’s less on cognitive models to guide performance than I would like. And not enough on misconceptions, But there’re lots of good tips that I wouldn’t have thought of including, and are valuable.

These are small asides. Some of it’s generic (writing for the web, for instance, similarly argues for whitespace) and some specific to learning, but it’s all good advice, and insufficiently seen. What’s there will certainly improve the quality of your learning design. If you write prose for elearning, you definitely should read, and heed, this book. I note, by the way, that my readability index for this blog always falls too high according to her standards ;).

She has a list of other potential books, and I can hope that she will at least deliver the one on designing practice and feedback (what I think is the biggest opportunity to improve elearning: what people do), but also examples, job aids, evaluating, objectives, and more. I hope this series continues to develop, based upon the initial delivery here.

Jay Cross Memorial Award 2017: Marcia Conner

The Internet Time Alliance Jay Cross Memorial Award is presented to a workplace learning professional who has contributed in positive ways to the field of Real Learning and is reflective of Jay‘s lifetime of work. Recipients champion workplace and social learning practices inside their organization and/or on the wider stage. They share their work in public and often challenge conventional wisdom. The Jay Cross Memorial Award is given to professionals who continuously welcome challenges at the cutting edge of their expertise and are convincing and effective advocates of a humanistic approach to workplace learning and performance.

The Internet Time Alliance Jay Cross Memorial Award is presented to a workplace learning professional who has contributed in positive ways to the field of Real Learning and is reflective of Jay‘s lifetime of work. Recipients champion workplace and social learning practices inside their organization and/or on the wider stage. They share their work in public and often challenge conventional wisdom. The Jay Cross Memorial Award is given to professionals who continuously welcome challenges at the cutting edge of their expertise and are convincing and effective advocates of a humanistic approach to workplace learning and performance.

We announce the award on 5 July, Jay‘s birthday. Following his death in November 2015, the partners of the Internet Time Alliance (Jane Hart, Harold Jarche, Charles Jennings, and myself) resolved to continue Jay‘s work. Jay Cross was a deep thinker and a man of many talents, never resting on his past accomplishments, and this award is one way to keep pushing our professional fields and industries to find new and better ways to learn and work.

The Internet Time Alliance Jay Cross Memorial Award for 2017 is presented to Marcia Conner. Marcia was an early leader in the movement for individual and social learning, and an innovator. As a Senior Manager at Microsoft, she developed new training practices and wrote an accessible white paper on the deeper aspects of learning design. She subsequently was the Information Futurist at PeopleSoft. She also served as a co-founder and editor at Learnativity, an early online magazine.

Marcia co-organized and co-hosted the Creating a Learning Culture conference at the Darden School of Business at the University of Virginia, leading to a book of the same title. As an advocate for the power of learning, alone and together, she wrote Learn More Now and co-wrote The New Social Learning (now in it‘s second edition) with Tony Bingham of the Association for Talent Development. She also was the instigator who organized the team for the twitter chat #lrnchat, which continues to this day.

Marcia‘s a recognized leader, writing for Fast Company, and keynoting conferences around the world. She currently helps organizations go beyond their current approaches, changing their culture. She‘s also in the process of moving her focus beyond organizations, to society. In her words, “I‘m in pursuit of meaningful progress, with good faith and honesty, girded by what I know we are capable of doing right now. When we assemble all that is going on at the edges of culture, technology, and (dare I say) business, we find a wildly hopeful view of the future. People doing extraordinary things, on a human scale, that has the potential to change everything for the better.â€

Marcia was a friend of Jay‘s for many years (including organizing the creation of his Wikipedia page), and we‘re proud to recognize her contributions.

Helen Blunden was the inaugural award winner in 2016.

An objective request

So, I’d like to ask a favor of you. I would like to improve my thinking about elearning design, and where this starts are objectives. Or outcomes. Now, they can be easy, or challenging. I’d like to see some of the latter.

So, I’d like to ask a favor of you. I would like to improve my thinking about elearning design, and where this starts are objectives. Or outcomes. Now, they can be easy, or challenging. I’d like to see some of the latter.

When the goal is fairly obvious, it’s simple to address. They need to be better at doing X? Well then, practice lots of X! But there are more challenging situations. You know the ones: weak verbs that aren’t about doing, where the performance is vague, where it’s about knowledge.

I’d like to get concrete. So…

I’d greatly appreciate it if you would share your challenging learning objectives (or outcomes you’re supposed to come up with objectives for) with me. The ones you dread. If they’re proprietary, feel free to generify them. I will also keep you anonymous. Feel free to ask for help, and I’ll respond appropriately.

What will I do with them? It depends. These may get written up as a post, if I see themes. They certainly are likely to be included in any workshops about deeper elearning design. I just want to work from real things, not my made up examples (as fabulous as they might be ;).

Feel free to comment here, or if you see this via LinkedIn or some other channel respond in whatever channel makes the most sense. Let me know if you’d like a reply.

And thanks in advance.

FocusOn Learning reflections

If you follow this blog (and you should :), it was pretty obvious that I was at the FocusOn Learning conference in San Diego last week (previous 2 posts were mindmaps of the keynotes). And it was fun as always. Here are my reflections on what happened a bit more, as an exercise in meta-learning.

There were three themes to the conference: mobile, games, and video. I’m pretty active in the first two (two books on the former, one on the latter), and the last is related to things I care and talk about. The focus led to some interesting outcomes: some folks were very interested in just one of the topics, while others were looking a bit more broadly. Whether that’s good or not depends on your perspective, I guess.

Mobile was present, happily, and continues to evolve. People are still talking about courses on a phone, but more folks were talking about extending the learning. Some of it was pretty dumb – just content or flash cards as learning augmentation – but there were interesting applications. Importantly, there was a growing awareness about performance support as a sensible approach. It’s nice to see the field mature.

For games, there were positive and negative signs. The good news is that games are being more fully understood in terms of their role in learning, e.g. deep practice. The bad news is that there’s still a lot of interest in gamification without a concomitant awareness of the important distinctions. Tarting up drill-and-kill with PBL (points, badges, and leaderboards; the new acronym apparently) isn’t worth significant interest! We know how to drill things that must be, but our focus should be on intrinsic interest.

As a side note, the demise of Flash has left us without a good game development environment. Flash is both a development environment and a delivery platform. As a development environment Flash had a low learning threshold, and yet could be used to build complex games. As a delivery platform, however, it’s woefully insecure (so much so that it’s been proscribed in most browsers). The fact that Adobe couldn’t be bothered to generate acceptable HTML5 out of the development environment, and let it languish, leaves the market open for another accessible tool. And Unity or Unreal provide good support (as I understand it), but still require coding. So we’re not at an easily accessible place. Oh, for HyperCard!

Most of the video interest was either in technical issues (how to get quality and/or on the cheap), but a lot of interest was also in interactive video. I think branching video is a real powerful learning environment for contextualized decision making. As a consequence the advent of tools that make it easier is to be lauded. An interesting session with the wise Joe Ganci (@elearningjoe) and a GoAnimate guy talked about when to use video versus animation, which largely seemed to reflect my view (confirmation bias ;) that it’s about whether you want more context (video) or concept (animation). Of course, it was also about the cost of production and the need for fidelity (video more than animation in both cases).

There was a lot of interest in VR, which crossed over between video and games. Which is interesting because it’s not inherently tied to games or video! In short, it’s a delivery technology. You can do branching scenarios, full game engine delivery, or just video in VR. The visuals can be generated as video or from digital models. There was some awareness, e.g. fun was made of the idea of presenting powerpoint in VR (just like 2nd Life ;).

I did an ecosystem presentation that contextualized all three (video, games, mobile) in the bigger picture, and also drew upon their cognitive and then L&D roles. I also deconstructed the game Fluxx (a really fun game with an interesting ‘twist’). Overall, it was a good conference (and nice to be in San Diego, one of my ‘homes’).