I was in contact with a person about a potential book, and she followed up with an interesting question: what’s the vision I have for publishing? She was looking for what I thought was a good book. Of course, I hadn’t really articulated it! I responded, but thought I should share my thinking with you as well. In particular, to get your thoughts! So, what makes a good book? (I’m talking non-fiction here, of course.)

I was in contact with a person about a potential book, and she followed up with an interesting question: what’s the vision I have for publishing? She was looking for what I thought was a good book. Of course, I hadn’t really articulated it! I responded, but thought I should share my thinking with you as well. In particular, to get your thoughts! So, what makes a good book? (I’m talking non-fiction here, of course.)

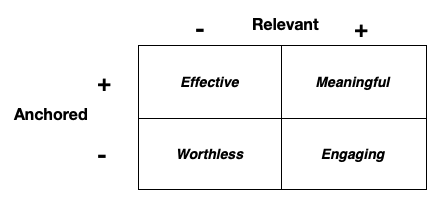

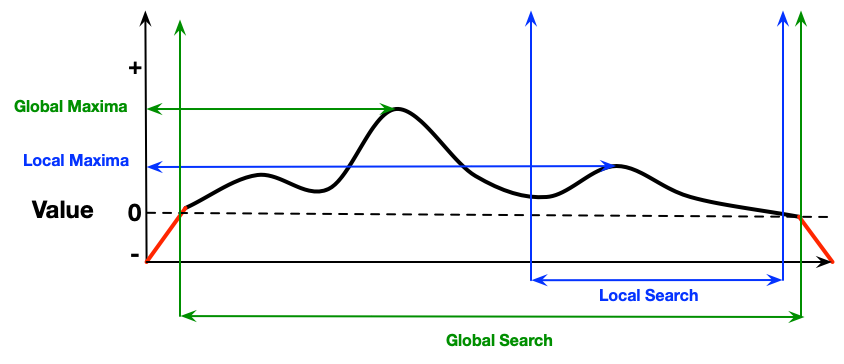

My first response was that I like books that take a sensible approach to a subject. That is, they start where the learner is and get them realizing this is an important topic. Then the book walks them through the thinking with models and examples. Ultimately, a book should leave them equipped to do new things. In a sense, it’s the author leading the reader through a narrative that leaves them with a different and valuable view of the world.

I think these books can take different forms. Some shake up your world view with new perspectives, so for example Don Norman‘s Design of Everyday Things or Todd Rose‘s The End of Average. Another types are ones that provide deep coverage of an important topic, such as Patti Shank‘s Write Better Multiple-Choice Questions. A third type are ones that lead you through a process, such as Cathy Moore‘s Map It. These are rough characterizations, that may not be mutually exclusive, but each can be done to fit the description above.

To me the necessary elements are that it’s readable, authoritative, and worthwhile. That is, first there’s a narrative flow that makes it easy to process. For instance, Annie Murphy Paul’s The Extended Mind takes a journalistic approach to important phenomena. Also, a book needs an evidence-base, grounding in documented experience and/or science. It can re-spin topics (I’m thinking here about Lisa Feldman Barrett’s How Emotions Are Made), but must have a viable reinterpretation. Finally, it has to be something that’s worth covering. That may differ by reader, but it has to be applicable to a field. You should leave with a new perspective and potentially new capabilities.

That’s what came off the top of my head. What am I missing in what makes a good book?