As could be expected (in retrospect ;), a recurrent theme in the discussions from our recent Learning Science Conference was how to deal with objections. For instance, folks who believe myths, or don’t understand learning. Of course, we don’t measure, amongst other things. However, we also have mistaken expectations about our endeavors. That’s worth addressing. So, here I’m talking about convincing stakeholders.

To be clear, I’m not talking about myths. Already addressed that. But is there something to be taken away? I suggested (and practiced in my book on myths in our industry), that we need to treat people with respect. I suggest that we need to:

- Acknowledge the appeal

- Also address what could be the downsides

- Then, look to the research

- Finally, and importantly, provide an alternative

The open question is whether this also applies to talking learning.

In general, when talking about trying to convince folks about why we need to shift our expectations about learning, I suggest that we need to be prepared with a suite of stories. I recognize that different approaches will work in different circumstances. So, I’ve suggested we should have to hand:

- The theory

- The data/research

- A personal illustrative anecdote

- Solicit and use one of their personal anecdotes

- A case study

- A case study of what competitors are doing

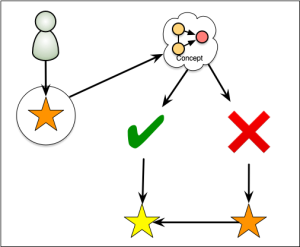

Then, we use the one we think works best with this stakeholder in this situation.

Can we put these together? I think we can, and perhaps should. We can acknowledge the appeal of the current approach. E.g., it’s not costing too much, and we have faith it’s working. We should also reveal the potential flaws if we don’t remedy the situation: we’re not actually moving any particular needle. Then we can examine the situation: here we draw upon one of the second list about approaches. Finally, we offer an alternative: that if we do good learning design, we can actually influence the organization in positive ways!

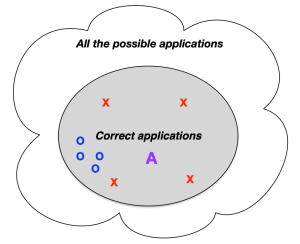

This, I suggest, is how we might approach convincing stakeholders. And, let me strongly urge, we need to! Currently there are far too many who believe that learning is the outcome of an event. That is, if we send people off to a training event, they’ll come back with new skills. Yet, learning science (and data, when we bother) tells us this isn’t what happens. People may like it, but there’s no persistent change. Instead, learning requires a plan and a journey that develops learners over time. We know how to do good learning design, we just have to do it. Further, we have to have the resources and understanding to do so. We can work on the former, but we should work on the latter, too.