Not meaning this to be a sudden spate of reflectiveness, given my last post on my experience with the web, but Cammy Bean has asked when folks became instructional designers, and it occurs to me to capture my rather twisted path with a hope of clarifying the filters I bring in thinking about design.

It starts as a kid; as Cammy relates, I didn’t grow up thinking I wanted to be a learning designer. Besides a serious several years being enchanted with submarines (still am, in theory, but realized I probably wouldn’t get along with the Navy for my own flaws), I always wanted to have a big desk covered with cool technology, exploring new ideas. I wasn’t a computer geek back then (the computer club in high school sent off programs to the central office to run and received the printout a day or so later), but rather a science geek, reading Popular Science and spending hours on the floor looking at the explanatory diagrams in the World Book (I’m pretty clearly a visual conceptual learner :). And reading science fiction. I did have a bit of an applied bent, however, with a father who was an engineer and could fix anything, who helped my brother and I work on our cars and things.

When I got to UCSD (just the right distance from home, and near the beach), my ambition to be a marine biologist was extinguished as the bio courses were both rote-memorization and cut-throat pre-med, neither of which inspired me (my mom was an emergency room nurse, and I realized early on that I wasn’t cut out for blood and gore). I took some computer science classes with a buddy and found I could do the thinking (what with, er, distractions, I wasn’t the most diligent student, but I still managed to get pretty good grades). I also got a job tutoring calculus, physics, and chemistry with the campus office for some extra cash, and took some learning classes. I also got interested in artificial intelligence, too, and was a bit of a groupie around how we think, and really cool applications of technology.

I somehow got the job of computer support for the tutoring office, and that’s when a light went on about the possibilities of computers supporting learning. There wasn’t a degree program in place, but I found out my college allowed you to specify your own major and I convinced Provost Stewart and two advisors (Mehan & Levin) to let me create my own program. Fortunately, I was able to leverage the education classes I’d taken for tutoring, the computer science classes I’d also taken, and actually got out faster than any program I’d already dabbled in! (And got to do that cool ’email for classroom discussion’ project with my advisors, in 1979!)

After calling around the country trying to find someone who needed a person interesting in computers for learning, I finally got hooked up with Jim Schuyler, who had just started a company doing computer games to go along with textbook publisher’s offerings. I eventually managed to hook DesignWare up with Spinnaker to do a couple of home games for them before Jim had DesignWare start producing it’s own home games (I got to do two cool ones, FaceMaker and Spellicopter as well as several others).

After calling around the country trying to find someone who needed a person interesting in computers for learning, I finally got hooked up with Jim Schuyler, who had just started a company doing computer games to go along with textbook publisher’s offerings. I eventually managed to hook DesignWare up with Spinnaker to do a couple of home games for them before Jim had DesignWare start producing it’s own home games (I got to do two cool ones, FaceMaker and Spellicopter as well as several others).

However, I had a hankering to go back to graduate school and get an advanced degree. As I wrestled with how to design the interfaces for games, I read an article calling for a ‘cognitive engineering’, and contacted the author about where I might study this. Donald Norman ended up letting me study with him.

The group was largely focused on human-computer interaction, but I maintained my passion for learning solutions. I did a relatively mainstream PhD but while focusing on the general cognitive skill of analogical reasoning, I also attempted an intervention to improve the reasoning.

Though it was a cognitive group, I was eclectic, and looked at every form of learning. In addition to the cognitive theories that were in abundance, I took and TA’d for the behavioral learning courses. David Merrill was visiting nearby, and graciously allowed me to visit him for a discussion (as well as reading Reigeluth’s edited overview of instructional design theories). Michael Cole was a big fan of Vygotsky, and I was steeped in the social learning theories thereby. David Rumelhart and Jay McClelland were doing the connectionist/PDP work while I was a student, so I got that indoctrination as well. And, as an AI groupie, I even looked at machine learning!

Though it was a cognitive group, I was eclectic, and looked at every form of learning. In addition to the cognitive theories that were in abundance, I took and TA’d for the behavioral learning courses. David Merrill was visiting nearby, and graciously allowed me to visit him for a discussion (as well as reading Reigeluth’s edited overview of instructional design theories). Michael Cole was a big fan of Vygotsky, and I was steeped in the social learning theories thereby. David Rumelhart and Jay McClelland were doing the connectionist/PDP work while I was a student, so I got that indoctrination as well. And, as an AI groupie, I even looked at machine learning!

I subsequently did a postdoc at the University of Pittsburgh’s Learning Research & Development Center, where I was further steeped in cognitive learning theory, before heading off to UNSW to teach interaction design and start doing my own research, which ended up being very much applied, essentially an action- or design-research approach. My subsequent activities have also been very much applications of a broad integration of learning theory into practical yet innovative design.

The point being, I never formally considered myself an instructional designer so much as a learning designer. Having worked on non-formal education in many ways, as well as teaching in higher education, my applications have crossed formal instruction and informal learning. As the interface design field was very much exploring subjective experiences at the time I was a graduate student, and from my game design experience, I very naturally internalized a focus on engaging learning, believing that learning can, and should, be hard fun.

I’ve synthesized the eclectic frameworks into a coherent learning design model that I can apply across technologies, and strongly believe that a solid grounding in conceptual frameworks combined with experiences that span a range of technologies and learning outcomes is the best preparation for a flexible ability to design experiences that are effective and engaging. Passionate as I am about learning, I do think we could do a better job of providing the education that’s needed to help make that happen, and still look for ways to try to help others learn (one of my employees once said that working with me was like going to grad school, and I do try to educate clients, in addition to running workshops and continuing to speak).

And, I’ve ended up, as I dreamed of, with a desk covered with cool technology and I get to explore new ideas: designing solutions that integrate the cutting edge of devices, tools, models, frameworks, all to help people achieve their goals. I continue to think ahead about what new possibilities are out there, and work to improve what’s happening. I love learning experience design (and the associated strategic thinking to make it work), believe there’s at least some evidence that I do it pretty well, and hope to keep doing it myself and helping others do it better. Who’s up for some hard fun?

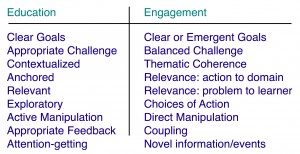

At core was an alignment between what makes effective learning practice, and what makes engaging experiences. Looking across educational theories, repeated elements emerge. Similarly with experience design. It turns that they perfectly align. If you recognize that, and can execute against it, your learning will be greater than the sum of the parts, and will both seriously engage and truly educate. Learning can, and should, be hard fun!

At core was an alignment between what makes effective learning practice, and what makes engaging experiences. Looking across educational theories, repeated elements emerge. Similarly with experience design. It turns that they perfectly align. If you recognize that, and can execute against it, your learning will be greater than the sum of the parts, and will both seriously engage and truly educate. Learning can, and should, be hard fun! I’ve argued before that mobile is not really about learning, but about performance support. That said, there are roles for mobile in courses, either as a learning augment or even microcourses (but not putting a whole elearning course on a mobile device). In talking about mobile, I distinguish between convenience and context.

I’ve argued before that mobile is not really about learning, but about performance support. That said, there are roles for mobile in courses, either as a learning augment or even microcourses (but not putting a whole elearning course on a mobile device). In talking about mobile, I distinguish between convenience and context.